Artificial Intelligence (AI) applications like ChatGPT use artificial neural networks, which mimic brain cells, trained on huge data sets with high energy consumption. Spiking neurons, which use less energy, could solve this issue, but training them has been challenging. A recent study from University of Bonn researchers offers a solution, potentially leading to more energy-efficient AI. The researchers have described the methods and findings of this study in a paper published in Physical Review Letters.

The human brain is remarkable; it uses little energy yet can do complex tasks. AI, while powerful, consumes vast energy. Like the brain, AI uses neural networks but in a continuous flow, unlike biological neurons that send short bursts or spikes.

Making artificial neural networks more brain-like

“Biological neurons do things differently,” explains researcher Raoul-Martin Memmesheimer in a press release issued by University of Bonn. “They communicate with the help of short voltage pulses, known as action potentials or spikes. These occur fairly rarely, so the networks get by on much less energy.”

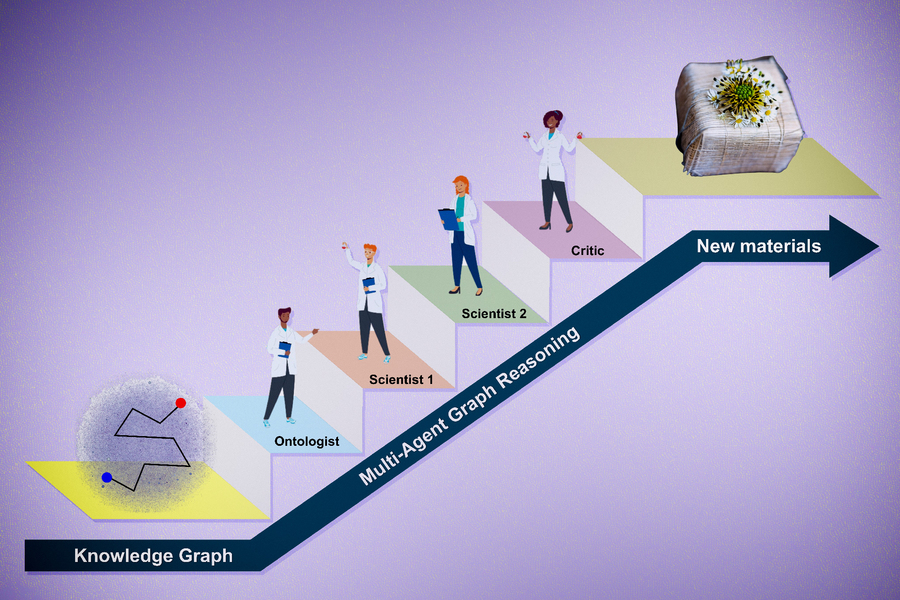

Training neural networks involves showing them examples, like photos, to recognize objects. Standard networks adjust their connections gradually, but spiking networks can’t do this smoothly because they either spike or don’t. The researchers explain that in these networks spikes can only shift in time, not intensity.

This timing shift means that even with spiking neurons, you can still adjust how neurons influence each other, much like with traditional networks, in terms of both the strengths of the connections and the timings of the spikes. This adjustment can be done continuously, matching the effective training methods of non-spiking networks.

The Bonn researchers have shown this approach works by teaching a spiking network to identify handwritten numbers. They plan to scale up, aiming for speech recognition next. This could make AI training more energy-efficient, aligning with how biological brains operate, potentially revolutionizing AI development.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)