NASA’s Europa Clipper spacecraft launched successfully on October 10, 2024, from Kennedy Space Center in Florida.

This mission is all about exploring Europa, one of Jupiter’s moons, which scientists believe has a huge ocean under its icy surface.

The spacecraft took off on a SpaceX Falcon Heavy rocket, which is one of the most powerful rockets available today.

Instead of taking a direct path, Europa Clipper will use gravity assists from Mars and Earth to gain speed and adjust its trajectory. This journey will take about five and a half years, with the spacecraft expected to arrive at Jupiter in April 2030.

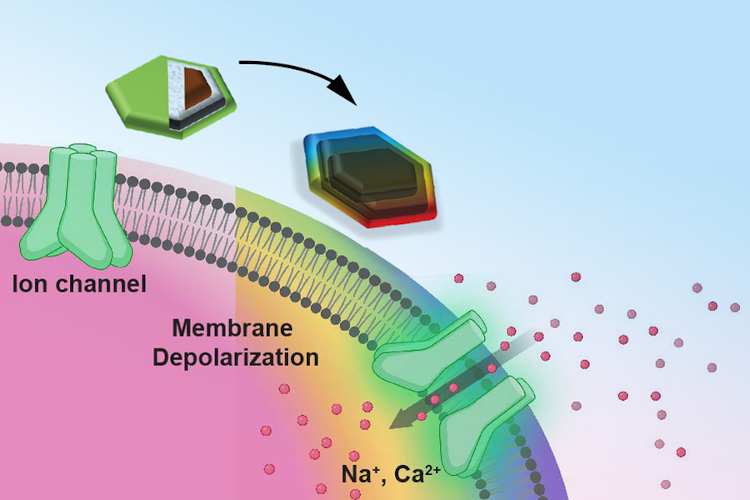

Europa Clipper is equipped with scientific tools to learn more about Europa. These include cameras to take detailed photos and spectrometers to study the moon’s surface. There is an ice-penetrating radar to look beneath the ice, and a magnetometer to measure the magnetic field.

The mission also plans to fly by Europa many times, getting as close as 16 miles above the surface, which will give us lots of data from different parts of the moon. This information will help plan for future missions, maybe even one that could land on Europa or explore its ocean directly.

Life on Europa?

One of the big goals of this mission is to see if Europa has conditions suitable for life. Scientists think that the ocean under the icy surface of Europa could possibly support life.

Scientists are curious if there could be life forms in its ocean, similar to what we find around hydrothermal vents on Earth’s ocean floor. The mission won’t look for life directly but will check out the moon’s habitability.

“This launch isn’t just the next chapter in our exploration of the solar system,” said project manager Jordan Evans. “it’s a leap toward uncovering the mysteries of another ocean world, driven by our shared curiosity and continued search to answer the question, ‘are we alone?’”

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)