John Hopfield and Geoffrey Hinton have won the Nobel Prize in Physics 2024 for foundational discoveries and inventions that enable machine learning with artificial neural networks.

John J. Hopfield is a Professor at Princeton University. Geoffrey E. Hinton is a Professor at University of Toronto.

Two documents, titled “Popular science background: They used physics to find patterns in information” and “Scientific background: For foundational discoveries and inventions that enable machine learning with artificial neural networks,” give detailed explanations of the work of Hopfield and Hinton.

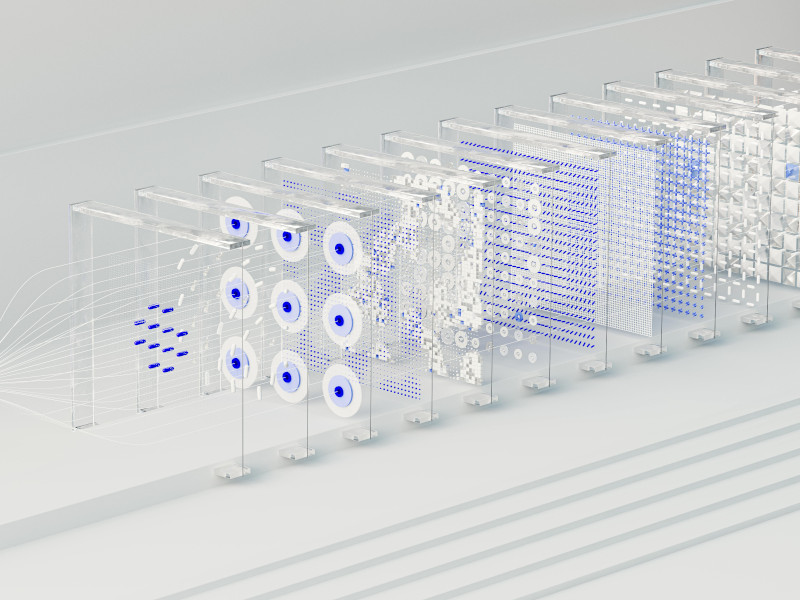

Artificial neural networks

Artificial Neural Networks (ANNs) are computer systems based on large collections of “neurons”, or nodes, connected by “synapses.” After suitable training, ANN perform certain tasks.

In the 1980s, Hopfield developed an associative memory based on a simple ANN. Then Hinton co-developed a stochastic extension of Hopfield’s model called the Boltzmann machine. Hinton also used backpropagation methods to successfully implement examples of deep and dense networks, a milestone toward what is now known as deep learning.

The video of the announcement includes a short phone interview with Hinton. Hinton commented on the influence that his discoveries, and in particular his work on backpropagation, had on future developments and current developments up to GPT-4, of which is is a frequent user.

Physics and beyond

Hopfield and Hinton “have used tools from physics to develop methods that are the foundation of today’s powerful machine learning,” notes the Nobel Foundation in a press release.

“In physics we use artificial neural networks in a vast range of areas, such as developing new materials with specific properties,” adds Ellen Moons, Chair of the Nobel Committee for Physics.

These words explain why two of the main developers of the neural networks used in Machine Learning (ML) and Artificial Intelligence (AI) at large have won the Nobel Prize in Physics.

But this year’s award also makes a lot of sense in view of the spectacular impact that the work of Hopfield and Hinton is having on today’s rapid and pervasive development of AI technology. AI could deeply change society and the world at large. And future AIs could even discover new breakthrough physics.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)