Chinese scientists achieved a big step in clean energy technology, South China Morning Post reports (unpaywalled copy).

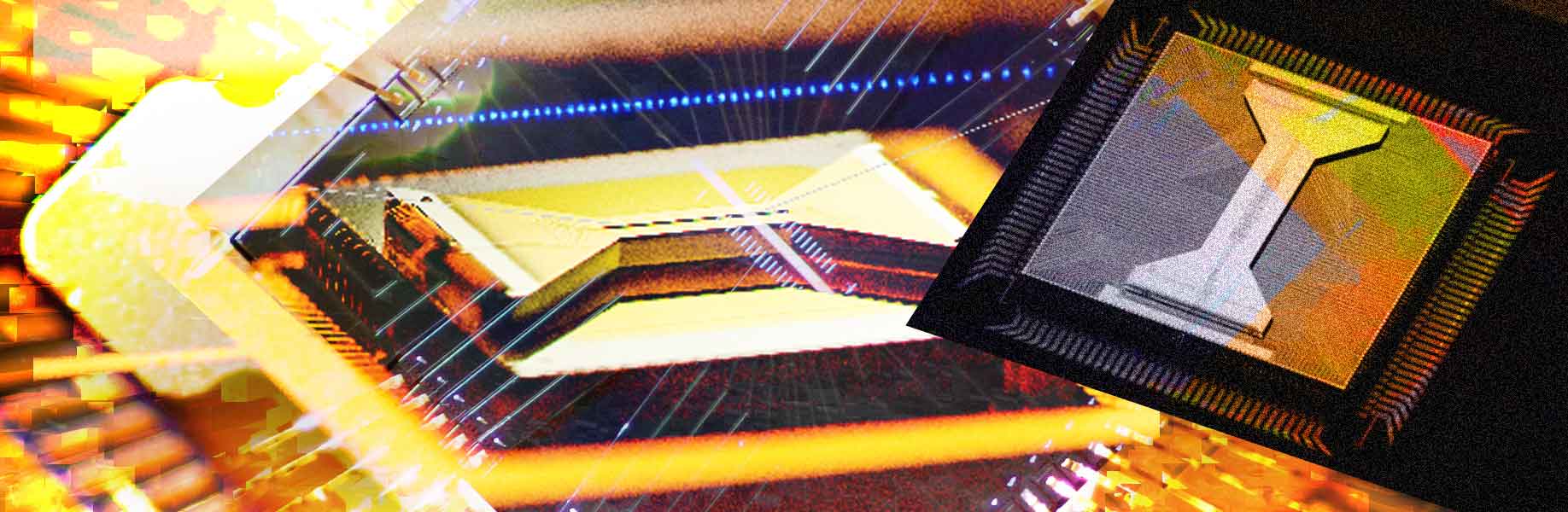

The scientists added fresh fuel to a thorium molten salt reactor while it was running. This reactor is in the Gobi Desert in western China. It uses thorium, a radioactive element found in the Earth’s crust, as fuel. Molten salt, a liquid that carries the fuel and cools the reactor, helps it work. The reactor makes 2 megawatts of thermal power.

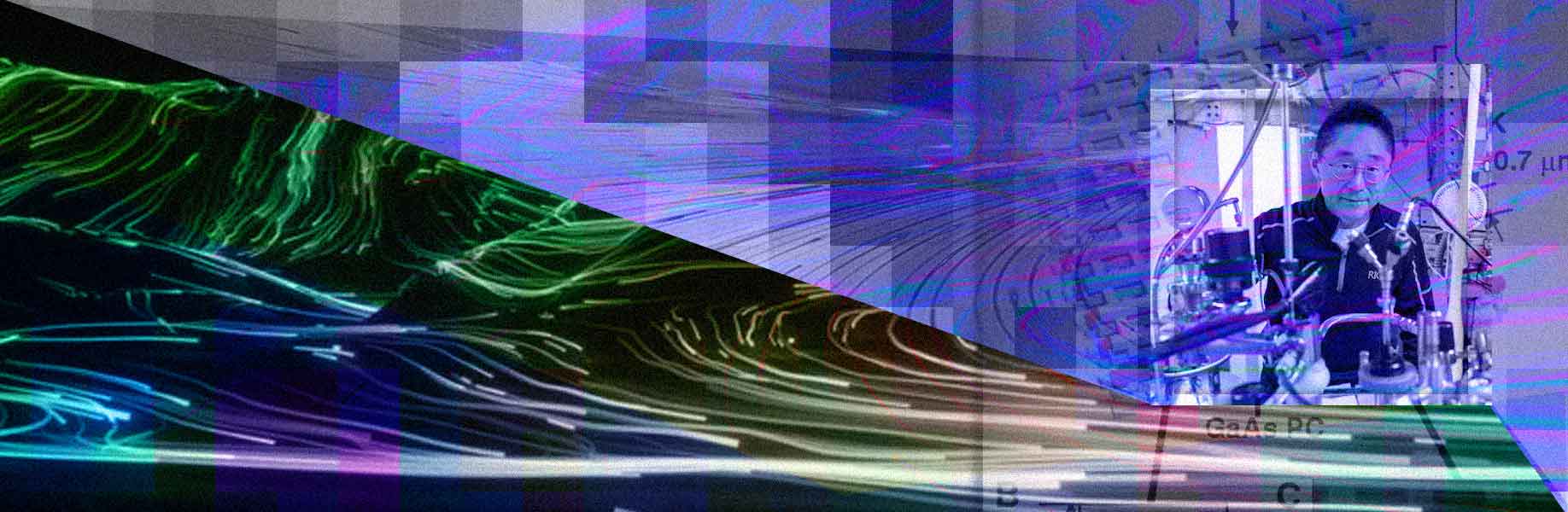

State media reported this success, saying China now leads the world in this technology. Thorium is safer than uranium and more abundant. Experts say one thorium mine in Inner Mongolia could supply China’s energy needs for thousands of years. It also creates very little radioactive waste. Xu Hongjie, the project’s chief scientist, announced this at a meeting on April 8 at the Chinese Academy of Sciences. He compared China’s steady progress to a tortoise beating a lazy hare, like in Aesop’s fable. The United States started this technology in the 1960s but stopped in the 1970s, choosing uranium instead.

A new era of nuclear power

Xu said the U.S. left their research open for others to use. Chinese researchers studied those old American documents and improved the ideas. They began their thorium project in the 1970s. In 2009, the Chinese Academy of Sciences asked Xu to make this technology real. The group grew to over 400 researchers in two years. They faced many challenges, like designing new materials for extreme heat.

Construction of the reactor started in 2018. By October 2023, the reactor achieved criticality, meaning it had a stable nuclear reaction. In June 2024, it reached full power. Four months later, they added new thorium fuel while it was running. This made it the only working thorium reactor in the world.

Xu said this was a tough but correct path. A larger thorium reactor, which will make 10 megawatts of electricity, is now being built. It should be ready by 2030. China also plans to use thorium in ships for emission-free transport. The U.S. wants to restart this technology but has not built anything yet.

“China creates history,” reads an MSN headline.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)