Researchers have developed a new way to see organs within a body by rendering overlying tissues transparent to visible light.

The counterintuitive process—a topical application of food-safe dye—was reversible in tests with animal subjects, and may ultimately apply to a wide range of medical diagnostics, from locating injuries to monitoring digestive disorders and identifying cancers.

Stanford University researchers published the research in the Sept. 6, 2024 issue of Science.

Many uses

″Looking forward, this technology could make veins more visible for the drawing of blood, make laser-based tattoo removal more straightforward, or assist in the early detection and treatment of cancers,″ said Stanford University assistant professor of materials science and engineering Guosong Hong, a U.S. National Science Foundation CAREER grantee who helped lead this work, in a statement.

″For example, certain therapies use lasers to eliminate cancerous and precancerous cells, but are limited to areas near the skin’s surface. This technique may be able to improve that light penetration.″

How it works

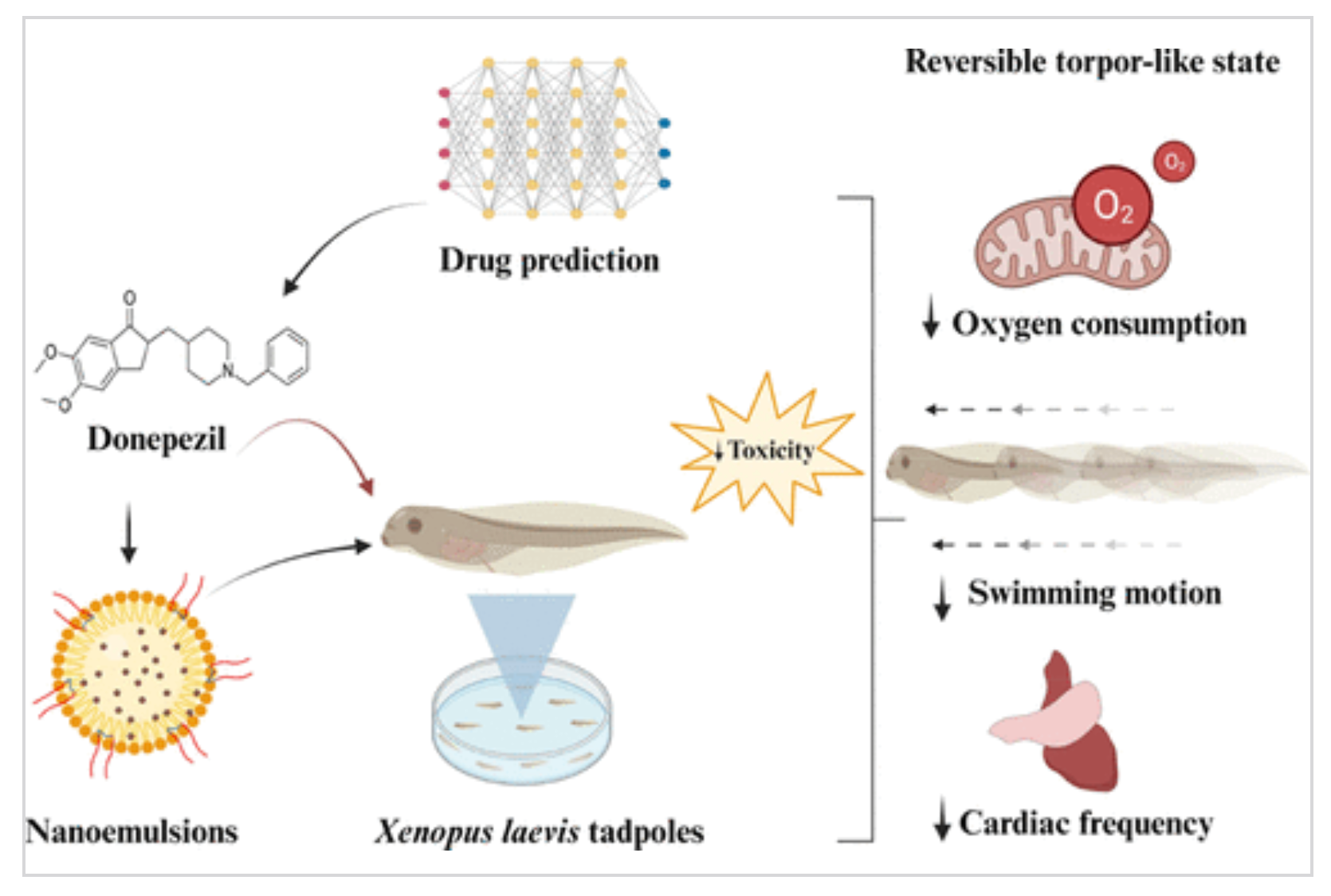

To master the new technique, the researchers developed a way to predict how light interacts with dyed biological tissues. This involves both light scattering and refraction, where light changes speed and bends as it travels from one material into another.

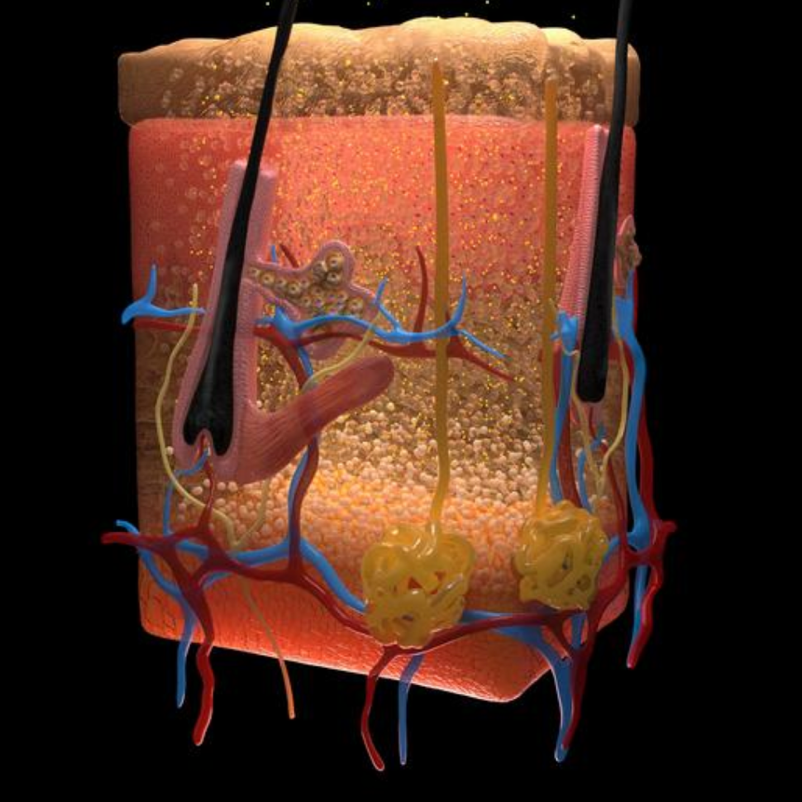

Light scattering is the reason we cannot see through our body: Fats, fluids within cells, proteins, and other materials each have a different refractive index, a property that dictates how much an incoming light wave will bend.

In most tissues, those materials are closely compacted together, so the varied refractive indices cause light to scatter as it passes through. It is the scattering effect that our eyes interpret as opaque, colored biological materials.

The researchers realized if they wanted to make biological material transparent, they had to find a way to match the different refractive indices so light could travel through unimpeded.

The researchers realized that dyes are the most effective at absorbing light, but can also be highly effective at directing light uniformly through a wide range of refractive indices.

They found that molecules of tartrazine (a food dye more commonly known as FD & C Yellow 5), when dissolved into water and absorbed into tissues, are perfectly structured to match refractive indices and prevent light from scattering, resulting in transparency.

The researchers gently rubbed a temporary tartrazine solution on mice. They applied the solution to the scalp, rendering the skin transparent to reveal blood vessels crisscrossing the brain and to the abdomen, which faded within minutes to show contractions of the intestine and movements caused by heartbeats and breathing.

The technique resolved features at the scale of microns (millionth of a meter) and enhanced microscope observations. The tartrazine did not appear to have long-term effects, and any excess was excreted in waste within 48 hours.

The researchers suspect that injecting the dye should lead to even deeper views within organisms, with implications for both biology and medicine.

Old formulas yield new window into medicine

Supported by a range of federal and private grants, the project began as an investigation into how microwave radiation interacts with biological tissues.

In exploring optics textbooks from the 1970s and 1980s, the researchers found two key concepts: mathematical equations called Kramers-Kronig relations and a phenomenon called Lorentz oscillation, where electrons and atoms resonate within molecules as photons pass through.

Well studied for more than a century, yet not applied to medicine in this way, the tools proved ideal for predicting how a given dye can raise the refractive index of biological fluids to perfectly match surrounding fats and proteins.

They realized that the same modifications that make materials transparent to microwaves could be tailored to impact the visible spectrum, with potential applications in medicine.

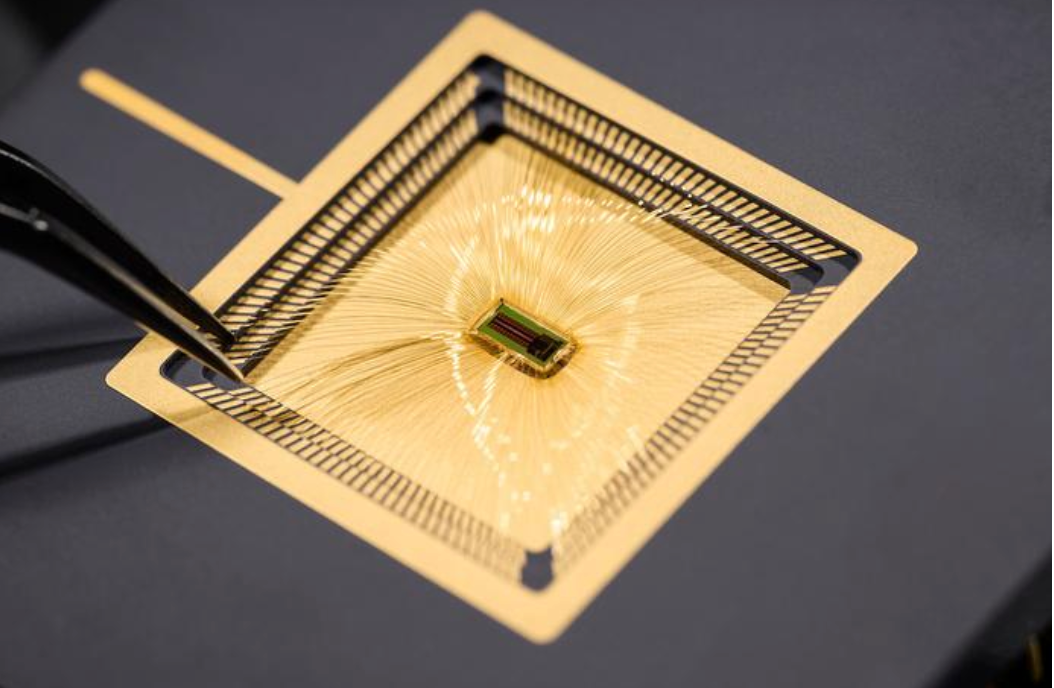

One tool that proved critical was a decades-old ellipsometer, a tool familiar to semiconductor manufacturing, not biology. In a possible first for medicine, the researchers realized it was also perfect to predict the optical properties of their target dyes.

With methods grounded in fundamental physics, the researchers hope their approach will launch a new field of study: matching dyes to biological tissues based on optical properties, potentially leading to a wide range of medical applications.

This research was supported by the U.S.. National Science Foundation, the U.S. National Institutes of Health, the U.S. Air Force Office of Scientific Research, the U.S. Army Long Term Health Education and Training program, and a range of private foundations and institutions.

Warning by researchers: The technique described above has not been tested on humans. Dyes may be harmful. Always exercise caution with dyes and do not consume directly, apply to people or animals, or otherwise misuse.

Citation: Ou, Z., Duh, S., Rommelfanger, N. J., C. Keck, C. H., Jiang, S., BrinsonJr, K., Zhao, S., Schmidt, E. L., Wu, X., Yang, F., Cai, B., Cui, H., Qi, W., Wu, S., Tantry, A., Roth, R., Ding, J., Chen, X., Kaltschmidt, J. A., . . . Hong, G. (2024). Achieving optical transparency in live animals with absorbing molecules. Science. https://doi.org/adm6869 (open-access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)