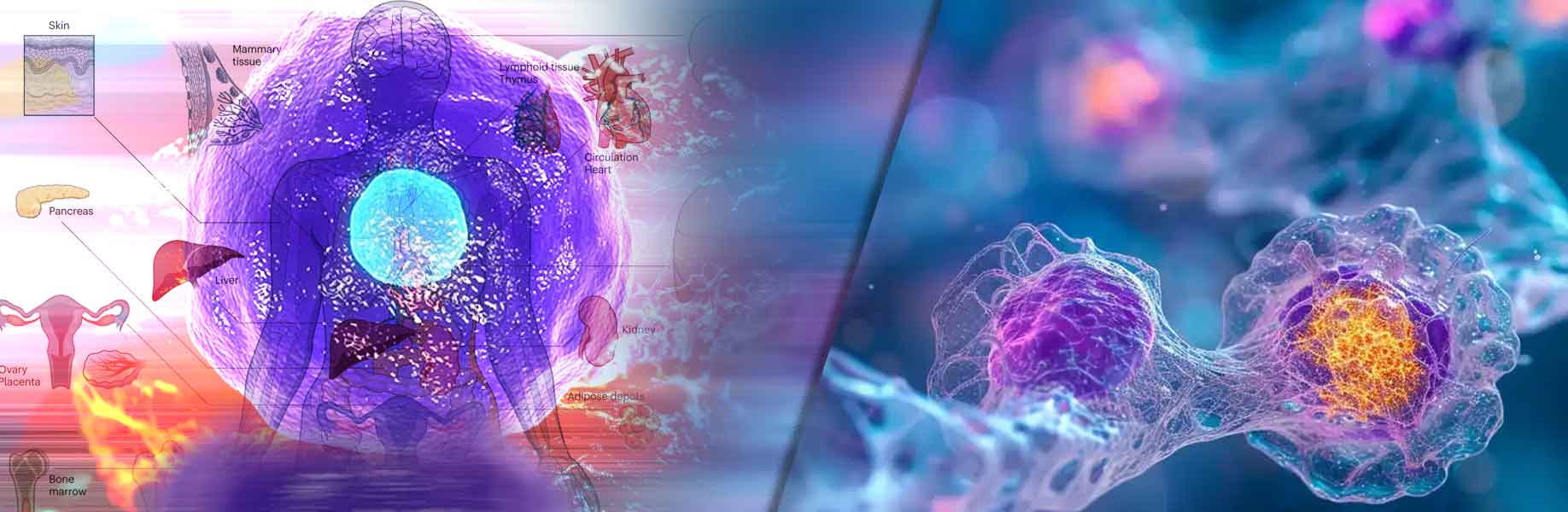

A new artificial intelligence (AI) tool studies millions of cells from patients. This tool, called NicheCompass, quickly predicts changes in tissues. It helps find the best treatments for diseases like cancer. Researchers at the Wellcome Sanger Institute and other places built it. They want to understand the human body better through the Human Cell Atlas Initiative, a big project mapping all cells.

NicheCompass uses generative AI. It makes a visual map of cells using spatial genomic data. This data shows cell types, their locations, and how they talk to each other. The tool looks at cell networks – groups of cells that interact. It spots different cellular neighborhoods, areas where cells work together. No other AI tool does this so well.

The researchers shared their work in Nature Genetics. They tested NicheCompass on breast and lung cancer patients. It finds tissue changes fast, in just one hour. This speed helps doctors plan treatments tailored to each person. It shows how some patients react differently to medicine. Every cell talks to its surroundings, forming networks. Cells have features, like proteins on their surface, that show who they connect with.

Single-cell and spatial genomic technologies study cells in detail. These tools create cell atlases, or maps, of tissues and organs. The atlases show cell types, locations, and how genetic changes affect them. Understanding cells helps explain diseases and find new drugs. But interpreting these cell networks is hard. NicheCompass solves this by learning how cells communicate.

How it works

NicheCompass is a deep-learning AI model that learns from lots of data. It studies cell-to-cell communication and matches similar networks. This lets doctors ask questions about the data. For example, they can see how lung cancer cells talk to nearby cells. The researchers tested it on 10 lung cancer patients. It showed what’s the same and different between them. Similarities improve cancer knowledge. Differences suggest personal treatments.

The tool also worked on breast cancer and a mouse brain with 8.4 million cells. It quickly mapped the brain, proving it works on big projects. Doctors can add patient data and get results fast. This guides decisions for better care. NicheCompass could help treat diseases by understanding cell networks.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)