How to make AI more energy-efficient

Jul. 29, 2024.

1 min. read.

6 Interactions

AI energy consumption could be reduced by a factor of 1,000 or more with a new design

RELATED NEWS

The International Energy Agency (IEA) issued a global energy use forecast in March 2024 warning that global energy consumption for AI is likely to double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026—roughly equivalent to the electricity consumption of the entire country of Japan.

The reason: machine or AI processes transfer data between logic (where information is processed within a system) and memory (where the data is stored)—consuming a large amount of power and energy in the process.

Significant energy savings

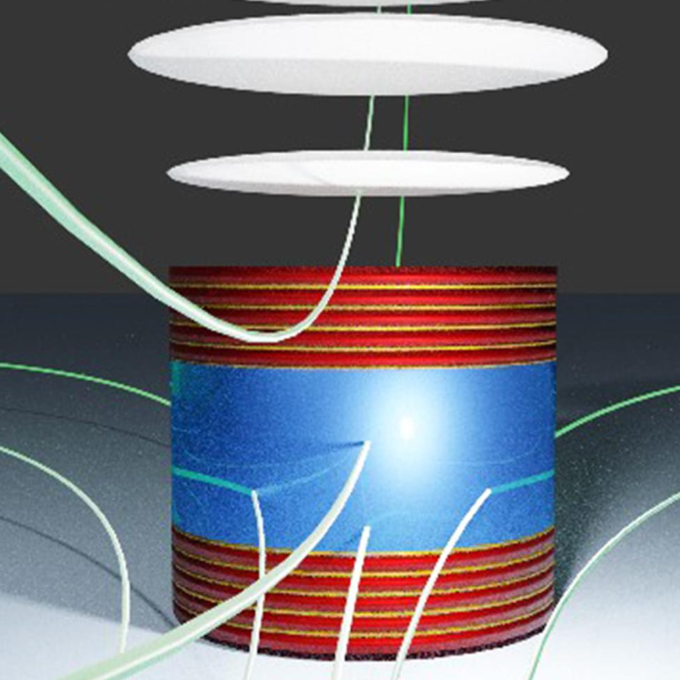

So engineering researchers at the University of Minnesota Twin Cities have developed a state-of-the-art hardware device in which data never leaves computational random-access memory (CRAM). Compared to traditional methods, the new CRAM-based machine-learning inference accelerator can achieve 1,700 to 2,500 times energy savings, the researchers estimate.

The research is published in npj Unconventional Computing, a peer-reviewed scientific journal published by Nature. The work was supported by grants from the U.S. Defense Advanced Research Projects Agency (DARPA), the National Institute of Standards and Technology (NIST), the National Science Foundation (NSF) and Cisco Inc.

Citation: Lv, Y., Zink, B. R., Bloom, R. P., Cılasun, H., Khanal, P., Resch, S., Chowdhury, Z., Habiboglu, A., Wang, W., Sapatnekar, S. S., Karpuzcu, U., & Wang, J. (2024). Experimental demonstration of magnetic tunnel junction-based computational random-access memory. Npj Unconventional Computing, 1(1), 1-10. https://doi.org/10.1038/s44335-024-00003-3 (open access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

2 Comments

2 thoughts on “How to make AI more energy-efficient”

Addressing the energy consumption of AI systems is crucial for sustainable technological advancement. Improving AI's energy efficiency not only reduces environmental impact but also makes these systems more accessible and scalable. Techniques such as optimizing algorithms, utilizing energy-efficient hardware, and employing smarter data processing methods are pivotal steps in this direction. It's encouraging to see a focus on sustainable AI development, as it ensures that the benefits of artificial intelligence can be enjoyed without compromising our planet's health.

🟨 😴 😡 ❌ 🤮 💩

A great article

🟨 😴 😡 ❌ 🤮 💩