The Impact of AI-Generated Media: Understanding Deepfake Technology

Nov. 26, 2024. 7 mins. read.

13 Interactions

Deepfakes are blurring the line between fact and fiction, reshaping trust in media. Can society balance innovation with ethics in the face of AI-generated deception?

In this article, we explore the growing influence of AI-generated content, focusing on deepfake technology and its impact on media and public trust. Deepfakes have made it harder to tell real from fake, challenging media literacy and raising concerns about misinformation, ethical issues, and societal risks. In a paper published in July 2024 in Devotion, Journal of Community Service, Riski Septiawan examines the technology behind deepfakes, their potential for misuse, and the steps needed to tackle the challenges they create. Here, we reflect on the key points of this study.

Introduction

AI-generated media is changing how people consume and evaluate information. Deepfakes, in particular, produce realistic but fabricated content that can manipulate public opinion and spread falsehoods. Septiawan’s research highlights how this technology can undermine trust and emphasizes the importance of media literacy to help people identify and critically analyze manipulated content. The study also discusses the need for ethical guidelines and regulations to address these challenges and ensure AI is used responsibly.

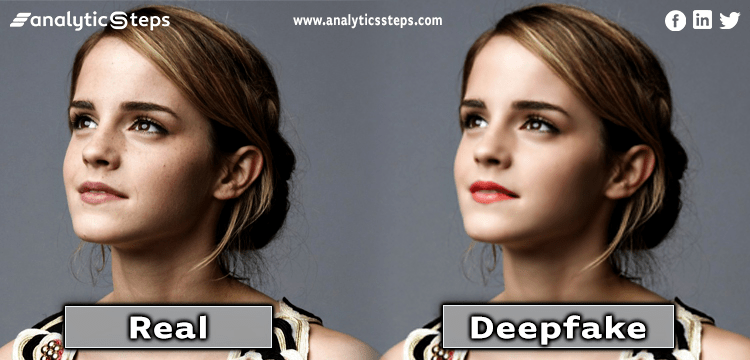

What is Deepfake Technology?

Deepfake technology uses advanced AI methods like generative adversarial networks (GANs) and convolutional neural networks (CNNs) to create lifelike but artificial images, videos, or audio. Here’s how it works:

- Face Swap Deepfakes: AI replaces one person’s face with another in an image or video.

- Lip Sync Deepfakes: AI matches lip movements to audio using deep learning models, creating videos that look natural.

- Audio Deepfakes: Neural networks replicate a person’s voice, allowing someone to appear to say things they never did or even sing in another person’s voice.

A detailed look at the process behind deepfakes:

- Data Collection: It starts with gathering extensive images or videos of the target face. The more diverse and high-quality the data, the better the AI model captures the nuances of the subject’s expressions, angles, and lighting.

- Facial Recognition: Algorithms analyze the collected data to identify unique facial landmarks, such as the shape of the eyes, nose, and mouth. This data forms the foundation for creating realistic facial movements and expressions.

- Face Replacement: Using GANs, the system replaces the original face with the target face. The generator creates synthetic images, while the discriminator critiques them, refining the output until it mimics reality.

- Synchronization: Deep learning models align the replaced face’s lip and eye movements with the original speech or actions. LSTM and transformer models ensure temporal coherence, making the output seamless.

- Editing and Finalization: The final step blends the manipulated face into the original media, adjusting details like skin tone, lighting, and shadows to produce content that withstands scrutiny.

Beyond face swaps, deepfake technology enables lip-syncing and audio deepfakes. Lip-sync deepfakes use AI to synchronize lip movements with new audio inputs, allowing actors’ dialogue to be dubbed into multiple languages without losing natural synchronization. Meanwhile, audio deepfakes mimic voices using neural networks, enabling applications such as AI-generated voiceovers and voice modifications.

Positive Applications: Harnessing the Power of Deepfakes

Despite their risks, deepfakes hold immense potential for positive applications:

- Entertainment and Creativity: Filmmakers can recreate historical figures, enhance special effects, and localize content for global audiences, pushing the boundaries of storytelling.

- Education: Deepfakes enable immersive learning experiences, such as simulating historical events or creating realistic scenarios for medical training.

- Healthcare: In therapy, virtual recreations of lost loved ones are being explored as tools for grief counseling. Deepfake avatars also simplify patient-doctor communication by presenting complex medical procedures in relatable formats.

Hyper-Personalization Meets Manipulation

Deepfake technology is revolutionizing personalized marketing. Imagine advertisements tailored to an individual’s preferences—adjusting the celebrity’s voice, accent, or setting to suit a viewer’s cultural context. While this enhances consumer engagement, it also opens the door to darker applications.

These examples demonstrate that deepfake technology, when used responsibly, can amplify human creativity and problem-solving.

How Deepfakes are Shaping Celebrity Realities

Many well-known deepfake examples feature public figures and celebrities, often demonstrating the technology in a seemingly harmless way. For example, a video of soccer star David Beckham speaking nine different languages showcases the impressive capabilities of deepfake technology, while comedian Jordan Peele created a “public service announcement” by overlaying his mouth and jaw onto former president Barack Obama using accessible apps. Additionally, several deepfake videos on TikTok convincingly portray actor Tom Cruise. Legally, the technology itself is not illegal, but the content it generates can cross legal boundaries.

Although these examples may seem harmless or entertaining, they show how easy it is to use this technology. When applied without consent, deepfakes can invade privacy and sometimes violate laws related to data protection or personal rights.

Ethical Dilemmas: Creativity or Exploitation?

The duality of deepfake technology becomes evident when its ethical challenges are scrutinized. While it empowers filmmakers, educators, and marketers, it also provides tools for exploitation. The same tools used to create relatable content can fabricate fake, harmful media that damages reputations or spreads misinformation, raising questions about consent and accountability. One of the gravest concerns is the creation of non-consensual explicit content, disproportionately targeting women. Such violations not only harm individuals but also highlight the lack of safeguards to prevent misuse.

Deepfakes also threaten the integrity of information ecosystems. In an age of fake news, deepfakes add a potent weapon to the arsenal of disinformation campaigns. Videos of political figures making inflammatory statements or fabricated footage of global events can manipulate public opinion, incite unrest, and undermine trust in institutions.

In a non-media related application, disturbingly, Scammers may found deepfake technology to be a potent tool for targeting vulnerable individuals, particularly the elderly, by creating hyper-realistic audio or video manipulations that mimic the voices and appearances of trusted family members or friends. Using deepfake audio, criminals can place convincing phone calls, imitating a loved one’s voice, and fabricate urgent scenarios—such as requesting financial help due to an emergency or posing as a grandchild in distress. The same technology can generate fake video calls, further solidifying the illusion and exploiting emotional trust. This tactic not only makes it easier to deceive the victim but also bypasses traditional verification methods, as the impersonation appears authentic. Such scams highlight the urgent need for public awareness and technological safeguards to protect against deepfake-enabled fraud.

The question of accountability looms large. Should the creators of deepfake tools bear responsibility for their misuse? While some advocate for strict regulations, others argue for self-regulation within the industry. Septiawan’s research emphasizes the urgent need for a balanced approach that protects individuals without stifling innovation.

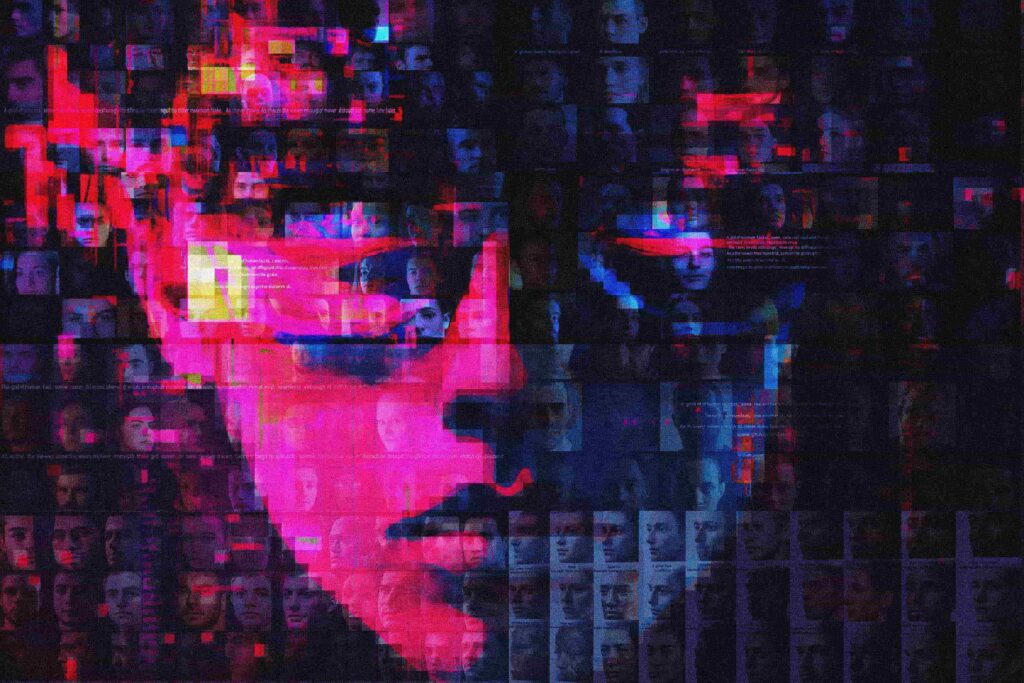

Societal Implications: The Erosion of Trust in Media

Deepfake technology has profound implications for society, particularly in its ability to erode trust. The mere existence of deepfakes fuels skepticism, giving rise to the “liar’s dividend”—the phenomenon where authentic content can be dismissed as fake. This undermines evidence-based discourse, making it easier for bad actors to deny accountability.

The burden of verifying authenticity falls heavily on journalists, fact-checkers, and media platforms, straining resources and complicating the dissemination of reliable information. Furthermore, constant exposure to fabricated realities affects public perception, fostering cynicism and confusion.

Septiawan’s research highlights the psychological toll of deepfakes on individuals and communities. As people struggle to discern fact from fiction, the resulting mistrust can divide societies and exacerbate conflicts.

Legal and Regulatory Responses

The legal landscape surrounding deepfakes remains fragmented. While some countries have criminalized malicious deepfakes, enforcement remains a challenge, especially in cross-border contexts. Laws often lag behind the rapid evolution of AI, leaving gaps that bad actors exploit.

Tech companies are stepping in to address these gaps. Platforms like Facebook and Twitter have implemented policies to identify and remove harmful content, while companies like Microsoft are developing tools to detect AI-generated manipulations. Yet, these efforts are reactive, highlighting the need for proactive solutions like embedding digital watermarks in authentic media and educating users about deepfake detection.

Why Media Literacy Matters

As deepfakes become more common, the ability to recognize manipulated media is critical. Septiawan stresses that media literacy is essential for helping people evaluate what they see and hear. For instance, Meta has introduced “Made with AI” tags to label AI-generated content, aiming to improve transparency. However, such measures need broader education efforts to be effective.

Data literacy is another important area. Understanding how AI works and how data is used can help hold creators accountable and prevent the misuse of this technology.

Conclusion

The rapid growth of deepfake technology highlights the need for stronger media and data literacy, clear ethical standards, and regulations to manage its risks. Deepfakes have the power to influence opinions, erode trust, and harm individuals, making it essential to act quickly to address these challenges. By promoting awareness and accountability, society can better manage the impacts of AI-generated media while also benefiting from its positive applications.

Reference

Septiawan, Riski. “Critical Analysis of AI-Produced Media: A Study of the Implications of Deepfake Technology.” Devotion Journal of Community Service 5, no. 7 (July 2024): 735–741. https://doi.org/10.59188/devotion.v5i7.747.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

3 Comments

3 thoughts on “The Impact of AI-Generated Media: Understanding Deepfake Technology”

Deepfake technology shows how AI can be both amazing and risky. It opens up creative possibilities but also creates big challenges like spreading misinformation and making it hard to trust what we see and hear.

"Deepfakes: the new plot twist we didn’t ask for but can’t stop talking about! This tech is both jaw-dropping and eyebrow-raising—bringing Hollywood-level effects to everyday life but also raising some serious trust issues. From swapping faces to mimicking voices, it’s like the internet’s ultimate magic trick. But as fun as it sounds, this article nails the need for balance—creativity’s cool, but let’s not let manipulation steal the show.

What do you think—tech marvel or ethical minefield?"

What do you think—tech marvel or ethical minefield?"