Deepseek LLM released – 671 billion parameters ✔, outperforms OpenAI on benchmarks✔, MIT-licensed ✔

Jan. 23, 2025.

1 min. read.

1 Interactions

DeepSeek has released the R1 set of AI models. They are open-source and perform above OpenAI's on tests of mathematical and coding ability

DeepSeek has announced the release of its R1 family of models, under an open-source license, and provided details of impressive test scores achieved by their models.

The main models – DeepSeek-R1-Zero and DeepSeek-R1 – have 671 billion parameters each, and were released with six smaller siblings – the DeepSeek-R1-Distill versions ranging from 1.5 billion to 70 billion parameters.

The R1 models are in the headline-grabbing class of ‘simulated reasoning’ models, which go through a step-by-step chain of reasoning to build complex thoughts. This AI paradigm has been pushing forward in recent months, including OpenAI’s o1, which debuted in September 2024, and its o3, which was announced in December but is still not available to the public.

This chain-of-reasoning method increases compute time, but greatly improves performance on maths, science, and engineering questions.

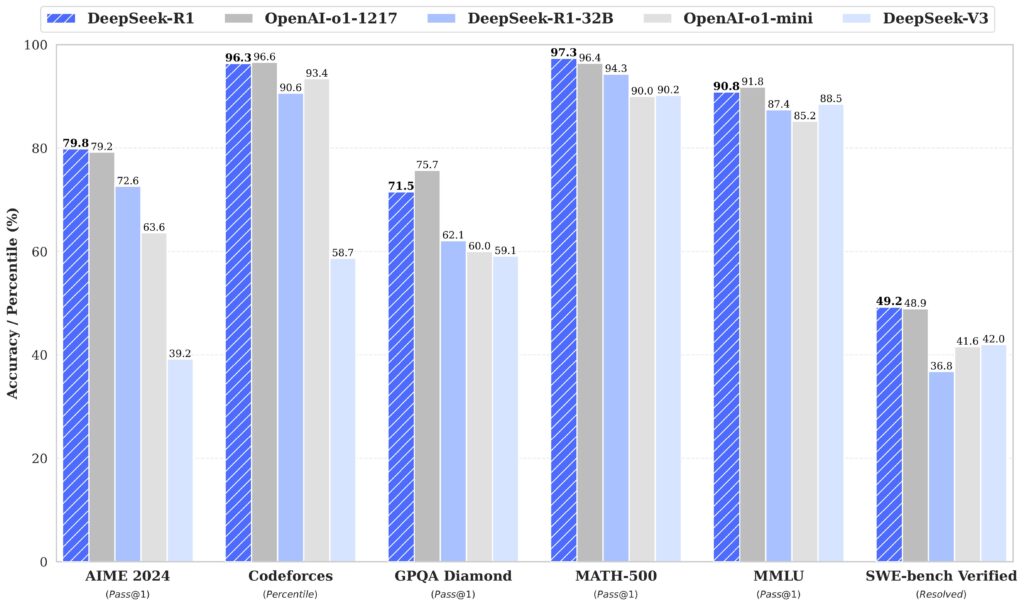

Benchmarking

As this chart shows, R1 models scored about the same as OpenAI on AIME (a maths test), MATH-500, and the coding challenges Codeforces and SWE-bench Verified. And unlike models from the inaccurately-named OpenAI, DeepSeek release their models under an open-source license. You can download their pre-trained models and run them on home computers.

This is another small success for open-source AI, and for the simulated reasoning paradigm. Give it a few months (not years) and the ability of these models to answer technical questions will be another level higher again.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

0 Comments

0 thoughts on “Deepseek LLM released – 671 billion parameters ✔, outperforms OpenAI on benchmarks✔, MIT-licensed ✔”