Deep neural networks mimic human hearing

Dec. 15, 2023.

2 mins. read.

2 Interactions

Could help researchers design better hearing aids, cochlear implants, and brain-machine interfaces

A new study from MIT has found that computational models derived from machine learning are moving closer to mimicking the structure and function of the human auditory system.

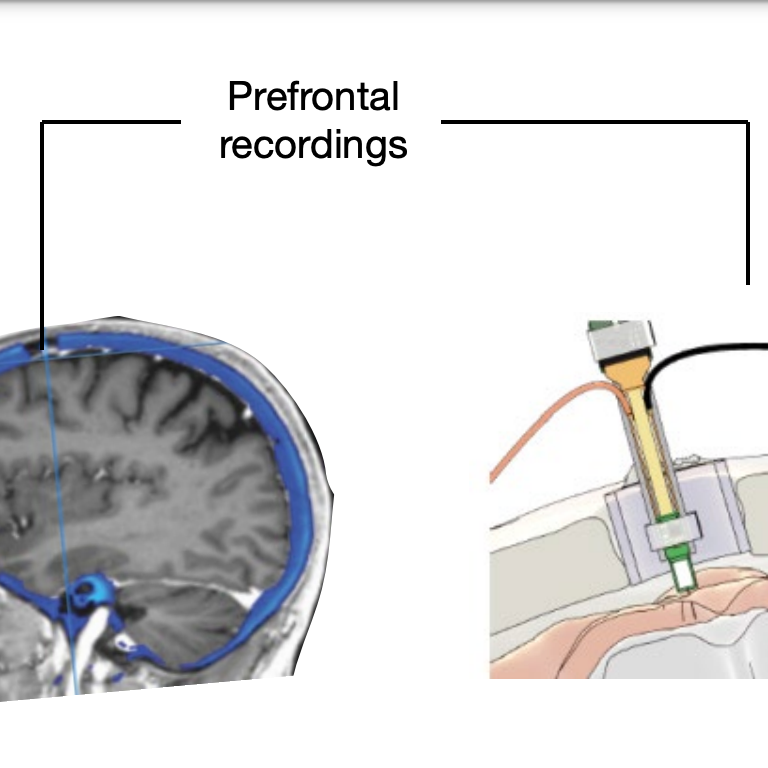

This could help researchers design better hearing aids, cochlear implants, and brain-machine interfaces, the researchers suggest.

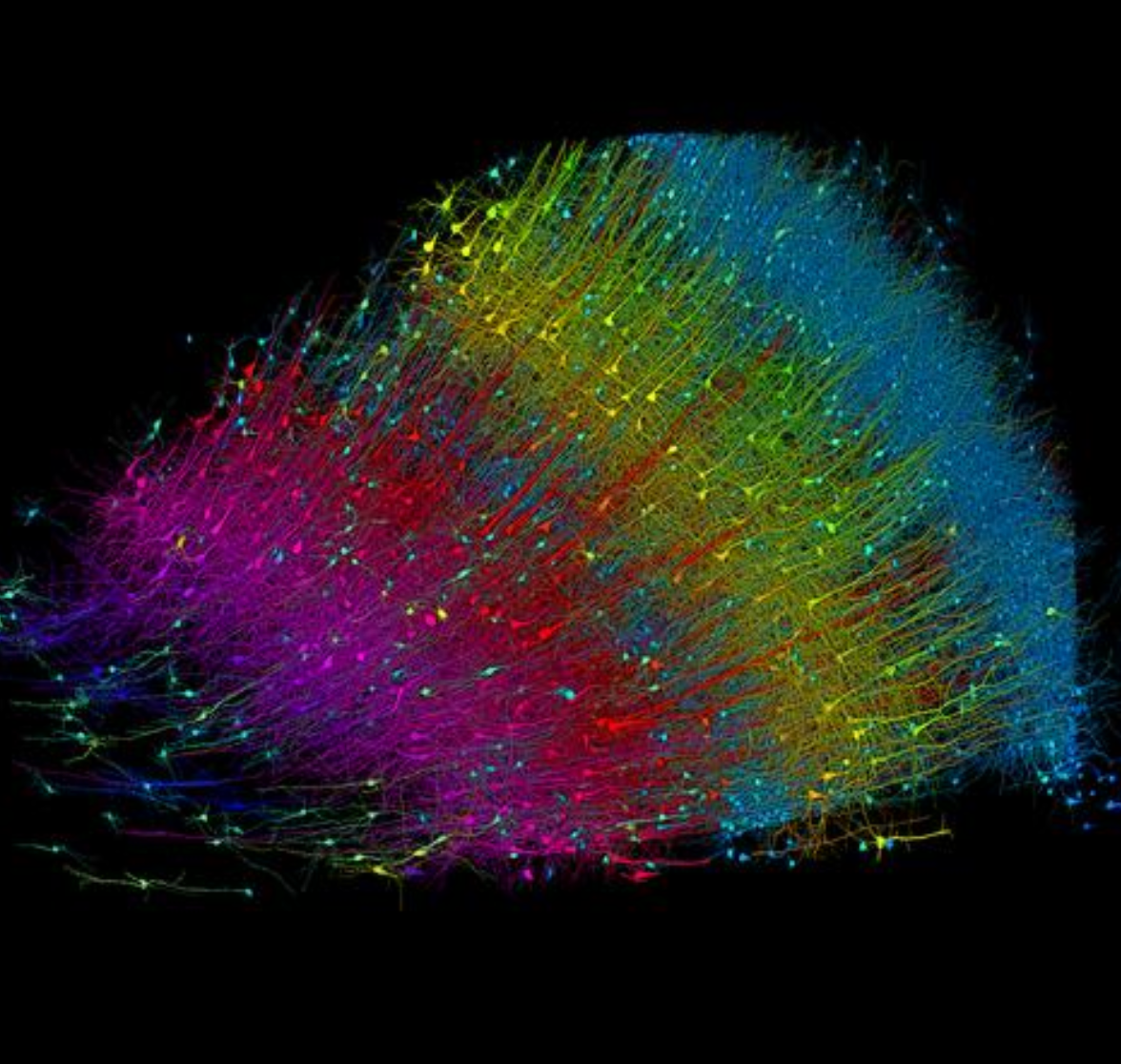

In this largest study yet of deep neural networks trained to perform auditory tasks, the MIT team showed that most of these models share properties seen in the human brain when people are listening to the same sounds.

Mimicking the human auditory system

When trained on auditory inputs, including background noise, the models closely mimic the activation patterns of the human auditory cortex, matching those seen in fMRI scans of people listening to the same sounds.

“This is the most comprehensive comparison of these kinds of models to the auditory system so far,” says Josh McDermott, an associate professor of brain and cognitive sciences at MIT, a member of MIT’s McGovern Institute for Brain Research and Center for Brains, Minds, and Machines, and the senior author of the study, which appears in PLOS Biology.

Auditory models

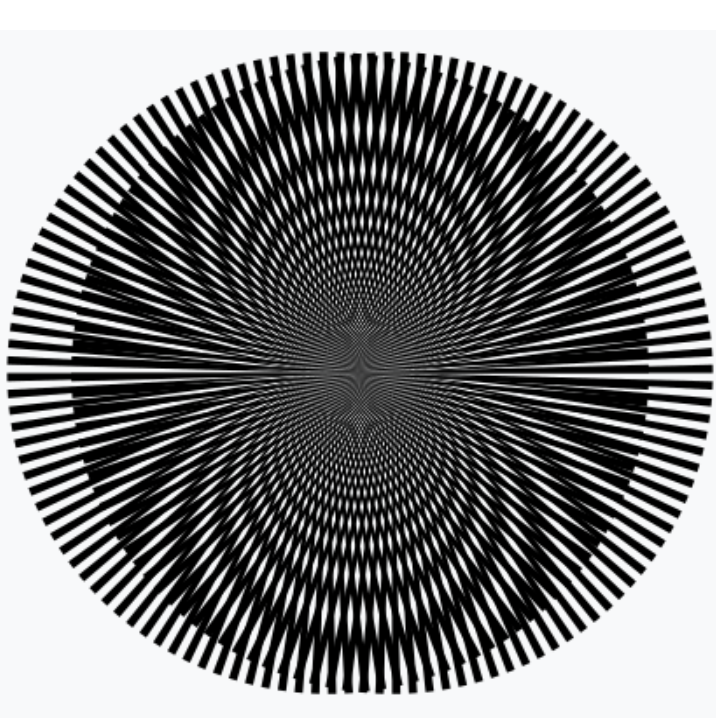

For this study, the researchers analyzed nine publicly available deep neural network models that had been trained to perform auditory tasks. They also created 14 models of their own, based on two different architectures.

Most of these models were trained to perform a single task: recognizing words, identifying the speaker, recognizing environmental sounds, and identifying musical genre, while two of them were trained to perform multiple tasks.

The researchers found that models that had been trained on different tasks were better at replicating different aspects of audition. For example, models trained on a speech-related task more closely resembled speech-selective areas.

Better models

McDermott’s lab now plans to develop models that are even more successful at reproducing human brain responses. Such models could help scientists learn more about how the brain may be organized, and could also be used to help develop better hearing aids, cochlear implants, and brain-machine interfaces.

“A goal of our field is to end up with a computer model that can predict brain responses and behavior. We think that if we are successful in reaching that goal, it will open a lot of doors,” McDermott says.

The research was funded by the National Institutes of Health, an Amazon Fellowship from the Science Hub, an International Doctoral Fellowship from the American Association of University Women, an MIT Friends of McGovern Institute Fellowship, a fellowship from the K. Lisa Yang Integrative Computational Neuroscience (ICoN) Center at MIT, and a Department of Energy Computational Science Graduate Fellowship.

Citation: Greta Tuckute, Jenelle Feather, Dana Boebinger, Josh H. McDermott. December 13, 2023, PLOS Biology, https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3002366 (open-access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

0 Comments

0 thoughts on “Deep neural networks mimic human hearing”