Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Archives: Community Content

Community content custom post type.

Bias Whispers: The Untold Story of Learning Without Synaptic Change

For decades, neuroscience and artificial intelligence have focused on synaptic plasticity—the strengthening or weakening of connections between neurons—as the fundamental mechanism of learning. However, a groundbreaking study led by Ezekiel Williams and colleagues challenges this view, demonstrating that neural networks can learn and adapt by modifying only their biases while keeping synaptic weights fixed. This revelation not only reshapes our understanding of artificial intelligence but also provides new insights into the adaptability of biological neural systems.

Unlocking Hidden Potential: Bias Learning in Neural Networks

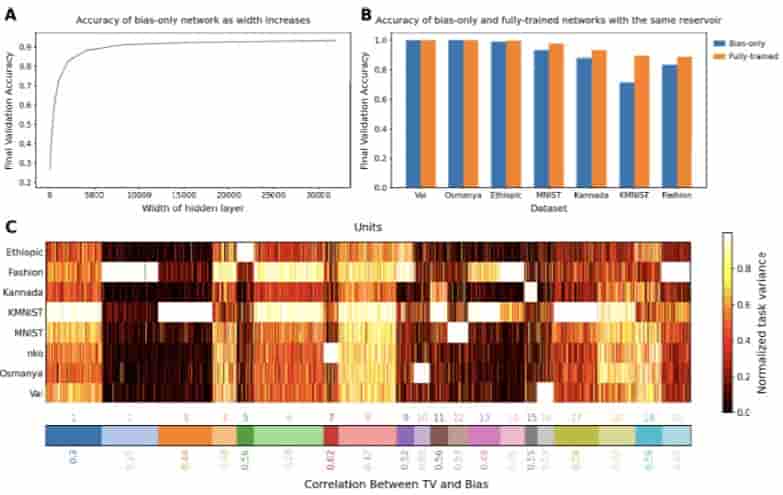

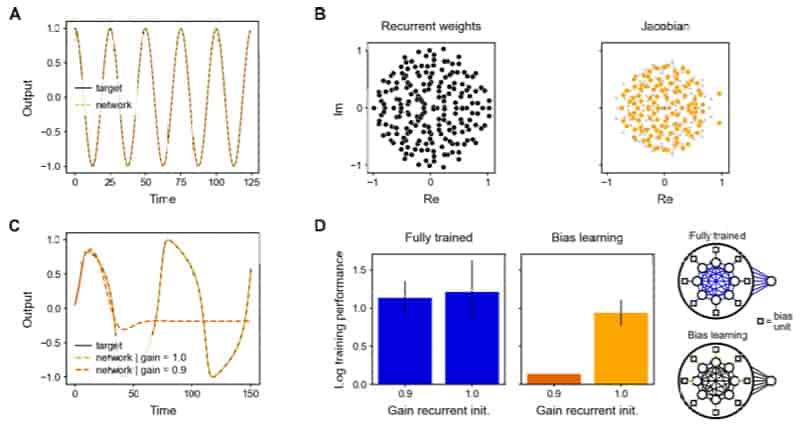

Traditionally, training a neural network involves adjusting both synaptic weights and biases. Synaptic weights determine how strongly neurons influence each other, while biases set the baseline activity of individual neurons. In a radical departure from conventional training methods, the researchers froze synaptic weights at random values and allowed only biases to change. Surprisingly, these “bias-learning” networks were still capable of performing complex tasks, including image classification and forecasting the behavior of dynamical systems.

The researchers found that, despite fixed connectivity, bias learning alone enabled networks to form functional representations necessary for task execution. This suggests that neural networks can adapt by merely fine-tuning neuronal activity rather than restructuring their connections—offering a potential paradigm shift in understanding neural computation.

Multitasking Marvels: One Network, Many Talents

A particularly striking aspect of bias learning is its ability to support multitasking. The study demonstrated that a single neural network with fixed random weights could perform multiple classification tasks by simply adjusting biases for each task. This is akin to an orchestra playing different musical pieces by changing the volume levels of instruments rather than altering their arrangement.

Analysis of these networks revealed functional specialization, with clusters of neurons emerging for specific tasks. This suggests that bias learning not only enables multitasking but also facilitates the self-organization of neural circuits, even without changes in connectivity. Such findings have profound implications for artificial intelligence, where bias fine-tuning could be leveraged for efficient multi-task learning and transfer learning in deep learning models.

Echoes in the Brain: Bias and Non-Synaptic Plasticity

The findings align with emerging discoveries in neuroscience that highlight the role of non-synaptic plasticity in learning. The brain employs mechanisms such as shunting inhibition, neural gain modulation, and threshold adaptation to regulate activity independently of synaptic weight changes. These processes bear a striking resemblance to bias learning in artificial networks, suggesting that biological systems might exploit similar principles for cognitive flexibility and adaptation.

Moreover, the study explored bias learning in recurrent neural networks (RNNs), which are often used to model time-dependent processes in neuroscience. By adjusting only biases, these networks successfully generated and predicted the trajectories of dynamical systems, mimicking the brain’s ability to produce complex motor and cognitive patterns. This supports the notion that the brain could dynamically regulate neural function through bias adjustments rather than extensive synaptic remodeling.

Theoretical and Practical Implications

The theoretical contributions of this study extend beyond artificial intelligence and neuroscience. The researchers provided mathematical proofs demonstrating that neural networks with fixed weights but learned biases can serve as universal function approximators with high probability. This suggests that the flexibility of neural computation may stem not only from synaptic modifications but also from changes in intrinsic neuronal properties.

Practically, bias learning presents a promising avenue for more efficient AI models. Traditional deep learning requires extensive retraining of weights, consuming significant computational resources. Bias learning could enable rapid adaptation to new tasks with minimal computational overhead, offering new strategies for developing adaptive and energy-efficient AI systems.

Conclusion

The discovery of bias learning challenges the long-held belief that synaptic weight changes are the sole driver of learning. By demonstrating that neural networks can learn and specialize through bias modulation alone, this study bridges artificial intelligence and neuroscience, shedding light on alternative pathways for cognitive adaptation. As research progresses, bias learning may not only revolutionize AI but also deepen our understanding of how the brain fine-tunes its functions to navigate an ever-changing world.

References

Williams, Ezekiel, Avery Hee-Woon Ryoo, Thomas Jiralerspong, Alexandre Payeur, Matthew G. Perich, Luca Mazzucato, and Guillaume Lajoie. “Expressivity of Neural Networks With Random Weights and Learned Biases.” arXiv.org, July 1, 2024. https://arxiv.org/abs/2407.00957.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Exploring Quantum Collapse: Schrödinger’s Cat, Diósi-Penrose Model, and Tabletop Experiments

Introduction

Imagine a world where an object could exist in two places simultaneously, or where a cat could be both alive and dead at the same time. This paradox, introduced by Erwin Schrödinger, highlights the peculiar nature of quantum mechanics, which allows for superposition—the ability of a system to exist in multiple states until measured. While quantum effects dominate at the microscopic level, our everyday reality follows classical physics, where objects have definite states. The fundamental question remains: why does this transition occur?

The Diósi-Penrose model offers a groundbreaking perspective, proposing that wavefunction collapse is not triggered by observation but rather by gravitational inconsistencies between superposed states. A new tabletop experiment aims to test this theory by observing quantum collapse in small mirrors, potentially providing empirical evidence that bridges the gap between quantum mechanics and gravity. This article examines the significance of this experiment and its potential impact on our understanding of quantum mechanics.

Schrödinger’s Cat and the Mystery of Superposition

Schrödinger’s Cat is a thought experiment designed to illustrate the paradox of quantum superposition on macroscopic scales. In the scenario, a cat is placed in a sealed box with a vial of poison that will be released depending on the quantum decay of a particle. Until observed, the cat remains in a superposition of both alive and dead states. The paradox challenges the applicability of quantum mechanics to large objects, prompting various interpretations.

One solution is the Copenhagen interpretation, which suggests that observation collapses the wavefunction into a single reality. Another is the many-worlds interpretation, which posits that all possible outcomes occur in separate, branching universes. However, the Diósi-Penrose model provides an alternative, arguing that superposition collapses due to gravitational effects, not observation.

The Diósi-Penrose Model: A Gravitational Explanation

Unlike conventional interpretations, the Diósi-Penrose model asserts that wavefunction collapse results from fundamental inconsistencies between quantum mechanics and general relativity. According to this theory, when an object exists in two locations simultaneously, it creates two conflicting spacetime geometries. Once the energy difference between these configurations surpasses a threshold defined by Heisenberg’s uncertainty principle, the wavefunction collapses.

This model suggests that collapse times are mass-dependent: small particles can remain in superposition for extended periods, while macroscopic objects, such as cats, collapse almost instantaneously. If correct, this theory would indicate that gravity plays a direct role in determining the classical behavior of macroscopic objects.

Experimental Design: Measuring Quantum Collapse

To test this hypothesis, researchers have devised a tabletop experiment using a Mach-Zehnder interferometer. This setup involves placing two small mirrors into a quantum superposition state using piezoelectric actuators. A laser beam splits and reflects off these mirrors, with single-photon avalanche diodes (SPADs) detecting interference patterns.

If both mirrors move identically, the interference pattern remains stable. However, if wavefunction collapse occurs, the pattern will shift. By precisely timing these shifts, researchers can determine whether collapse follows the predictions of the Diósi-Penrose model.

Challenges in Observing Quantum Collapse

Despite the experiment’s simplicity, several technical challenges must be overcome:

- Minimizing Effective Mass: The setup must ensure that only the mirrors enter superposition while minimizing interactions with larger components.

- Preventing Which-Way Information Leakage: Any extraneous data that reveal photon paths could introduce unwanted collapses, interfering with results.

- Mitigating Noise Interference: External vibrations, temperature fluctuations, and electromagnetic disturbances could disrupt the experiment. The setup employs a Faraday cage and cryogenic cooling to mitigate these effects.

Distinguishing Decoherence from Gravitational Collapse

One of the key objectives of this experiment is to separate gravitational collapse from environmental decoherence. Decoherence refers to the loss of phase coherence due to interactions with the surrounding environment, occurring on extremely short timescales. Gravitational collapse, by contrast, is hypothesized to depend on an object’s mass rather than its environment.

By designing the experiment with symmetric mirror movements and precise isolation from environmental factors, researchers aim to observe whether wavefunction collapse occurs independently of decoherence. A successful observation would provide compelling evidence that gravity plays a direct role in transitioning systems from quantum to classical behavior.

Conclusion

The search for a definitive explanation of wavefunction collapse lies at the heart of quantum mechanics. The Diósi-Penrose model proposes a testable hypothesis: that gravity, not observation, determines when quantum superpositions break down. The proposed experiment, leveraging high-precision optics and interferometry, represents a crucial step in experimentally probing this theory.

If validated, this research could have profound implications, reshaping our understanding of quantum mechanics and its relationship with gravity. It may also open the door to new theories that unify quantum physics with general relativity, potentially solving one of the greatest mysteries in modern physics.

References

Tagg, James, William Reid, and Daniel Carlin. “Schrödinger’s Cheshire Cat: A tabletop experiment to measure the Diósi-Penrose collapse time and demonstrate Objective Reduction (OR)” arXiv.org, February 4, 2024. https://arxiv.org/abs/2402.02618.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Revolutionizing AI Ethics: A Journey Toward Human-Centric Intelligence

Introduction

The rise of artificial intelligence (AI) has sparked an equally transformative conversation about ethics, touching every corner of modern society. From privacy concerns to algorithmic fairness, AI ethics has emerged as a critical field, shaping how these technologies integrate into our lives. Over the past two decades, researchers have mapped its journey, identifying shifts in focus and persistent challenges. How have these developments reshaped our understanding of ethical AI? This article discusses the evolution of AI ethics, highlighting its milestones, dilemmas, and future directions.

The Phases of AI Ethics: From Theoretical to Human-Centric

Researchers have identified three pivotal phases marking the evolution of AI ethics. These phases reflect how ethical priorities have adapted alongside technological advances.

Figure 1. Usage of different ethics-related keywords in literature

Phase 1: The Incubation Era (2004–2013)

In its infancy, AI ethics revolved around foundational questions of machine behavior and personhood. Dispersed across fields like machine ethics and information ethics, research focused on ensuring AI systems could act ethically toward humans and other machines.

During this period, thinkers like Luciano Floridi and Wendell Wallach laid the groundwork for ethical AI frameworks. However, AI was largely viewed as a tool rather than an autonomous entity.

Phase 2: Human-Like Machines (2014–2019)

This phase introduced a shift from theoretical ethics to practical applications. Researchers prioritized endowing AI systems with traits like fairness, accountability, and transparency. Breakthroughs such as AlphaGo’s victory and Google Waymo’s Robotaxi showcased AI’s growing sophistication.

Industry leaders proposed ethical frameworks aimed at building trust in AI systems. These efforts set the stage for aligning AI with human-like principles, making accountability and trustworthiness central to AI design.

Phase 3: Human-Centric AI (2020–Present)

Today, the focus has shifted to ensuring AI systems address societal needs equitably and inclusively. Public concerns about bias, misinformation, and autonomy have driven researchers toward creating AI technologies that prioritize transparency and fairness.

Innovative tools like the Contextualized Embedding Association Test (CEAT) have emerged to measure biases in AI systems, while global discussions on AI sentience and superintelligence have sparked ethical safeguards. This phase underscores the importance of grounding AI ethics in human-centric values to rebuild public trust.

Persistent Challenges: The Ethical Dilemmas of AI

Despite advancements, key challenges continue to shape the discourse:

The Collingridge Dilemma

A core issue in AI ethics is the Collingridge Dilemma, which highlights the difficulty of controlling technological developments before their consequences become apparent. Early in AI’s development, regulation may seem unnecessary or stifle innovation. However, by the time AI’s risks become evident, altering its trajectory may be costly or even impossible. AI ethicists struggle to find a balance between innovation and precaution, making this an ongoing debate in governance frameworks.

Transparency and Explainability

AI decision-making remains a black box, often inaccessible even to its creators. Researchers have proposed approaches like explicability—a combination of transparency, accountability, and interpretability—but these solutions often face practical and financial constraints.

Privacy Concerns

AI-driven technologies increasingly blur the boundaries of personal data. Questions about how the “right to be forgotten” applies to AI systems reveal the complexities of governing data retention and usage in the AI era.

Bias and Fairness

AI systems can perpetuate societal biases present in training data. Tools like CEAT aim to mitigate these biases, but achieving equity requires systemic changes both within AI development and the societies AI serves.

Algocracy and Human Enfeeblement

The increasing reliance on AI-driven decision-making raises concerns about algocracy, where governance becomes dictated by algorithms rather than human oversight. As AI automates critical processes, it risks diminishing human agency, creating a society where decision-making power becomes concentrated among those who control AI systems. This phenomenon, known as human enfeeblement, has sparked debates about the need for regulatory interventions to ensure AI serves humanity rather than marginalizing it.

AI Status Debate

A major unresolved issue is whether AI should be classified as an ethical-impact agent, an implicit ethical agent, an explicit ethical agent, or a full ethical agent. Some scholars argue that AI should remain a tool with human accountability, while others explore the possibility of granting AI legal or moral status. The debate intensified when Saudi Arabia granted citizenship to the humanoid robot Sophia in 2017, raising questions about AI’s rights, responsibilities, and legal standing.

Superintelligence

The ethical risks of AI surpassing human intelligence remain a topic of heated debate. As industry leaders explore governance frameworks for superintelligence, balancing innovation with existential safety is becoming a priority.

Future Directions: Building Ethical AI Frameworks

To address these challenges, researchers propose innovative solutions like Large Ethics Models (LEMs), inspired by large language models. LEMs could create adaptive, culturally sensitive frameworks for ethical decision-making. Additionally, standardizing AI identification through registries and labeling systems could enhance accountability and transparency.

Large Ethics Models (LEMs)

Just as large language models (LLMs) have transformed AI’s ability to understand and generate text, Large Ethics Models (LEMs) have been proposed as a means to systematize ethical decision-making in AI. LEMs could integrate diverse ethical principles, allowing AI to make decisions aligned with human values across different cultures and industries. This emerging area requires further exploration to ensure its feasibility and effectiveness.

AI Identification

Unlike traditional software and hardware, AI lacks a standardized identification system to track and categorize its usage. Researchers suggest introducing AI IDs, similar to serial numbers, which could improve transparency by allowing consumers and regulators to verify an AI system’s origins, capabilities, and ethical safeguards. A central registry and voluntary rating system could help users understand the risks associated with various AI applications.

Interdisciplinary Collaboration

Interdisciplinary collaboration between psychology, sociology, and law is vital for tackling the ethical implications of AI technologies. By integrating these diverse perspectives, researchers can ensure AI serves humanity responsibly.

Conclusion

AI ethics is no longer just a theoretical exercise—it’s the compass guiding one of humanity’s most transformative journeys. From its early days of exploring machine morality to today’s push for human-centric design, the field has evolved to tackle some of the most pressing questions of our time. But the road ahead is just as critical. How do we ensure AI systems are transparent, fair, and inclusive while navigating the complexities of superintelligence?

The answer lies in bold innovation and collaboration, weaving together insights from psychology, sociology, law, and beyond. By grounding AI in values that truly reflect humanity’s best, we can create technologies that don’t just revolutionize industries but uplift societies. The challenge is monumental, but so is the opportunity to build a future where AI and humanity thrive together.

Reference

Gao, Di Kevin, Andrew Haverly, Sudip Mittal, Jiming Wu, and Jingdao Chen. “AI Ethics.” International Journal of Business Analytics 11, no. 1 (February 20, 2024): 1–19. https://doi.org/10.4018/ijban.338367.

Jacob Sparks and Ava Thomas Wright, “Models of Rational Agency in Human-Centered AI: The Realist and Constructivist Alternatives,” AI and Ethics 5 (2025). https://philpapers.org/rec/SPAMOR.

Kazim, Emre, and Adriano Soares Koshiyama. “A High-level Overview of AI Ethics.” Patterns 2, no. 9 (September 1, 2021): 100314. https://doi.org/10.1016/j.patter.2021.100314.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Evaluating LLMs for Scientific Discovery: Insights from ScienceAgentBench

Introduction

Large Language Models (LLMs) have sparked both excitement and skepticism in the realm of scientific discovery. While some experts claim that LLMs are poised to revolutionize research by automating complex tasks, others argue that their capabilities are still far from matching reliable expertise.

In this article, we discuss the findings of recent research conducted by researchers from The Ohio State University (Chen et al., “ScienceAgentBench: Toward Rigorous Assessment of Language Agents for Data-Driven Scientific Discovery.”), which rigorously assessed the performance of LLM-based agents in end-to-end automation tasks for scientific discoveries.

Designed to evaluate language agents for data-driven scientific discovery, the researchers present a new benchmark called ScienceAgentBench. Developed to play a pivotal role in this debate, ScienceAgentBench offers a rigorous framework for assessing the performance of LLMs in real-world scientific applications. By testing different LLM models across 102 validated tasks in fields such as bioinformatics and geographical information science, ScienceAgentBench provides valuable insights into the practical potential and limitations of LLMs in scientific research.

How ScienceAgentBench Works

ScienceAgentBench is more than just a test for LLMs—it is a comprehensive evaluation framework designed to simulate real-world scientific workflows. Unlike traditional LLM benchmarks that focus on abstract problem-solving, ScienceAgentBench mirrors the actual challenges faced by scientists. The tasks within the benchmark are derived from 44 peer-reviewed publications and vetted by domain experts to ensure their authenticity and applicability.

To evaluate LLMs’ performance effectively, ScienceAgentBench sets stringent criteria. Models are assessed based on their ability to execute tasks without errors, meet specific scientific objectives, produce code similar to expert solutions, and operate cost-effectively. This holistic approach ensures that LLM-based agents are not only theoretically proficient but also practical for real-world scientific use.

Ensuring Data Integrity

A key challenge in evaluating LLM models is ensuring they solve tasks through reasoning rather than memorization. ScienceAgentBench addresses this by implementing measures to prevent shortcuts, such as modifying datasets and introducing rigorous validation processes. These safeguards help provide an accurate assessment of an LLM’s genuine problem-solving capabilities.

Key Findings and Challenges

Despite rapid advancements in LLMs, ScienceAgentBench reveals that current models struggle with the intricate demands of scientific tasks. The highest-performing model, Claude-3.5-Sonnet with self-debug capabilities, succeeded in only 34.3% of the tasks. This underscores LLMs’ ongoing difficulties in processing specialized data, understanding discipline-specific nuances, and delivering reliable results consistently.

Comparing LLM Frameworks

ScienceAgentBench evaluates LLM models using three distinct frameworks, each with its strengths and weaknesses:

- Direct Prompting: This approach involves generating code based on initial input without any iterative refinement. While straightforward, it often results in incomplete or error-prone solutions.

- OpenHands CodeAct: Designed to enhance code generation, this framework incorporates additional tools such as web search and file navigation to assist LLM models in completing tasks more effectively. However, its complexity can lead to increased costs and processing times.

- Self-Debug: This iterative approach allows LLM models to generate code, test it, and refine their solutions based on feedback. It has proven to be the most effective framework, delivering higher success rates at a lower cost compared to OpenHands, making it a more practical choice for scientific applications.

The comparison of these frameworks highlights that LLMs’ performance is not solely determined by model sophistication but also by the strategies used to interact with data and refine outputs.

The Road Ahead for LLMs in Science

While ScienceAgentBench showcases LLMs’ potential to augment scientific discovery, it also reveals significant hurdles that must be overcome before full automation becomes a reality. The findings suggest that the most promising path forward lies in combining LLMs’ capabilities with human expertise, leveraging LLMs for repetitive or computationally intensive tasks while relying on humans for critical thinking and interpretation.

Conclusion

ScienceAgentBench serves as an eye-opening evaluation of LLMs’ current standing in scientific discovery. Although LLMs show promise in assisting researchers, the benchmark makes it clear that human oversight remains essential. The success of iterative frameworks like Self-Debug indicates that a hybrid approach—integrating LLMs into scientific workflows without over-relying on automation—may be the most effective strategy moving forward.

References

Chen, Ziru, Shijie Chen, Yuting Ning, Qianheng Zhang, Boshi Wang, Botao Yu, Yifei Li, et al. “ScienceAgentBench: Toward Rigorous Assessment of Language Agents for Data-Driven Scientific Discovery.” arXiv.org, October 7, 2024. https://arxiv.org/abs/2410.05080.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

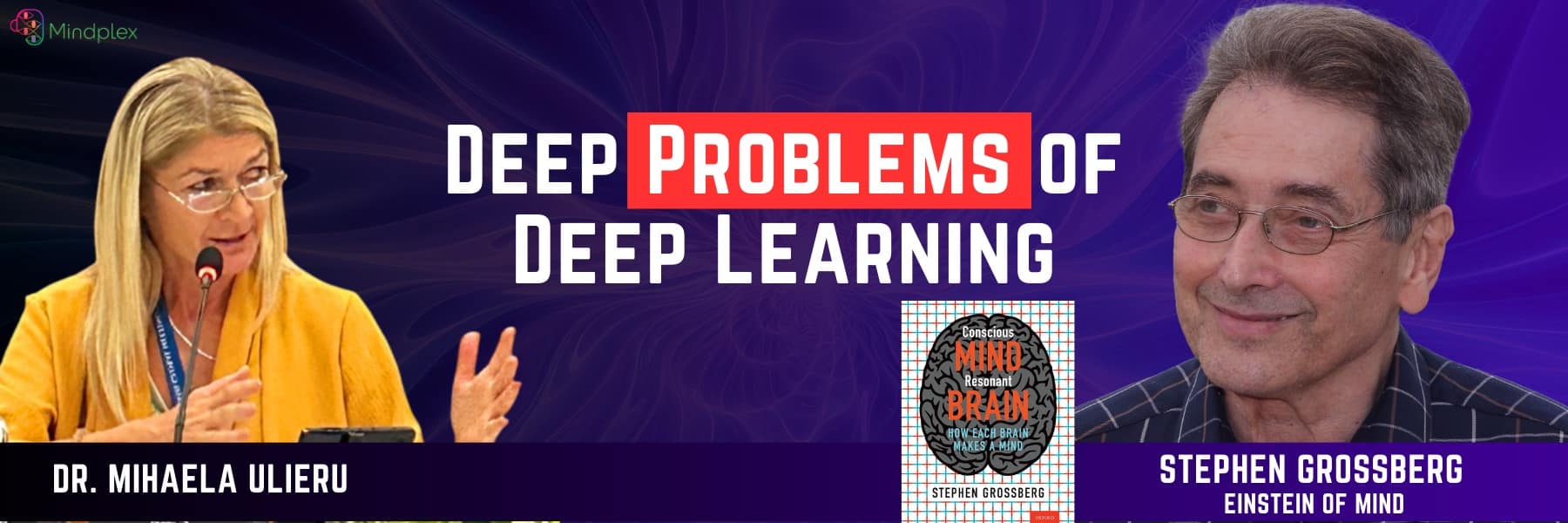

Our Brain’s Neural Networks: From Consciousness To Craziness | MCP Episode 16

The Platonic Representation Hypothesis: Toward a Grand Unified Statistical Model

Introduction

The quest to uncover a unifying mathematical structure to describe the universe has been central to physics for centuries. Similarly, artificial intelligence now strives to find universal patterns in how it processes information. AI models have exhibited a surprising trend: their internal representations appear to converge despite significant differences in architecture and modality. This idea, explored by Minyoung Huh and colleagues in The Platonic Representation Hypothesis, reveals how these models align across tasks and datasets, offering new insights into the future of machine learning (Huh et al. 2024).

A Converging Reality: AI Models Aligning Across Tasks and Modalities

In artificial intelligence, “representation” refers to the mathematical structures that models develop to capture the essential characteristics of data points. According to Huh et al., AI models, despite differences in architecture or training objectives, display an increasing convergence in their internal representations. This trend reflects an alignment in how these systems process information, with models designed for vastly different tasks—such as vision and language processing—showing overlapping structural patterns in their representation mechanisms.

The researchers argue that this convergence is driven by exposure to increasingly diverse datasets and tasks, leading models toward a shared statistical representation of reality. They term this phenomenon the “platonic representation,” which parallels Plato’s philosophical idea of ideal forms that transcend individual instances (Huh et al. 2024).

Multimodal Alignment: Language and Vision in Sync

The research highlights a particularly intriguing observation: AI models trained on different data modalities—such as text and images—often develop similar representations. For instance, vision models optimized for classification tasks frequently align with language models in their representation space. This alignment is exemplified by multimodal architectures like CLIP, which integrates image and text processing, demonstrating that representation mechanisms can transcend domain-specific boundaries.

This cross-modal alignment hints at the possibility of modality-agnostic AI systems. Such systems could eventually process diverse data types—whether visual, textual, or otherwise—using unified representation frameworks. This would represent a significant step toward creating more adaptable and versatile AI models capable of understanding the world in a more holistic way.

Factors Driving Convergence

The study identifies three primary factors contributing to representational convergence across AI models:

- Data Diversity: Exposure to a wide range of data encourages models to develop representations that generalize well across domains, capturing broader statistical patterns rather than task-specific features.

- Task Variety: Training on multiple tasks forces models to create versatile representation mechanisms, which align better with those of other models working on different tasks.

- Model Scale: Larger models with greater computational capacity and more extensive training achieve more generalized and tightly clustered representations, indicating that scale is a critical driver of convergence (Huh et al. 2024).

Biological Parallels: AI and Neural Mechanisms in the Brain

An intriguing point raised in the paper is the parallel between artificial and biological systems. Neural networks, much like the human brain, aim to represent the structure of sensory inputs in a meaningful way. Tasks such as object recognition, segmentation, and classification—central to human cognition—are mirrored in the fundamental operations of AI models.

The research draws on evidence showing that artificial systems sometimes mimic the neural mechanisms of the human brain when processing sensory data. This suggests that biological and artificial systems, though distinct, converge on similar strategies to address the challenges of interpreting and interacting with the world.

Implications for AI Development

The Platonic Representation Hypothesis has significant implications for the future of AI:

- Unified Frameworks: Representational convergence could enable the development of unified AI systems that seamlessly integrate diverse tasks and data modalities.

- Enhanced Transfer Learning: A shared representation space allows knowledge gained in one domain to be efficiently transferred to others, increasing adaptability.

- Improved Generalization: Models that converge on universal representations are likely to perform better across diverse datasets, making them more robust and reliable in real-world applications.

However, the study also acknowledges challenges. Specialized models trained on narrow or biased datasets may deviate from this convergence, limiting their ability to generalize. Additionally, the heavy computational and data requirements of large-scale models raise ethical and sustainability concerns.

Conclusion

The Platonic Representation Hypothesis provides a compelling framework for understanding the evolution of AI systems. By converging on a shared representation space, neural networks are becoming more cohesive and versatile, capable of tackling diverse tasks with greater efficiency. This phenomenon not only enhances the functionality of AI systems but also hints at a future where artificial intelligence mirrors human cognition in its ability to interpret and navigate the world. Addressing the limitations and ethical implications of this convergence will be crucial to ensuring the responsible development of AI.

Reference

Huh, Minyoung, Brian Cheung, Tongzhou Wang, and Phillip Isola. “The Platonic Representation Hypothesis.” arXiv preprint arXiv:2405.07987, May 13, 2024. https://arxiv.org/pdf/2405.07987.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

The Race to New Races

The Epoch of Humans, Machines, and the Blended Future

Imagine a race where the competitors are not human athletes but futuristic entities: AI-powered robots, enhanced humans with digital interfaces, and biohybrid animals equipped with sensors. This isn’t a sci-fi fantasy; it’s our imminent future—a blend of human, robotic, and biological life, racing toward unknown potentials. As we stand on the brink of this new epoch, we must ask ourselves: how do we coexist equitably and responsibly?

A New Kind of Evolution

In this “race,” how many humanoid AI beings will walk among us ten years from now? And how many cyborg-enhanced humans will be part of our everyday lives? Imagine a city where humanoid AI outnumbers human residents in specific sectors, such as elder care or customer service. What social dynamics would arise if half the people you interact with each day aren’t human?

Evolution used to be a slow, natural process. Today, we are the architects of new forms of life—creating robots, cyborgs, and hybrids in labs, each representing a leap into an ethically complex future. As companies like Tesla and Neuralink redefine what it means to be “alive,” we face critical questions: are we creating tools or companions? And how will this affect our society?

In the field of human augmentation, companies like Neuralink are developing brain-computer interfaces that promise to blend human intelligence with digital capabilities, creating a future of “enhanced humans” with expanded mental and physical abilities. Non-humanoid robots are also advancing, with specialized robots like Starship’s delivery bots reshaping logistics, and Boston Dynamics’ Spot handling tasks that are dangerous for humans.

And then there are the cyborg animals, bridging biology and robotics in an entirely new way. For instance, cyborg cockroaches equipped with electronic backpacks can be remotely controlled, assisting in search and rescue missions. Even more intriguing will be cyborg mice, lizards, fish, and birds designed with implants that allow scientists to direct their movements in a controlled setting. These animals could someday scout hazardous areas or gather environmental data too risky for humans to access.

As David Hanson, the founder of Hanson Robotics, eloquently stated, “If we create life in a machine, should it not be a good life?” Hanson’s words highlight the ethical dilemmas that accompany this race. Are we creating tools, or are we forging new forms of life?

| Category | Company/Project | Description | Market Introduction Estimate |

|---|---|---|---|

| Humanoid Robots | Tesla Optimus | Tesla’s humanoid robot aiming for factory use by 2024, broader availability by 2026. | 2024-2026 |

| Humanoid Robots | Figure AI Humanoid Robot | Figure AI’s general-purpose humanoid robots, with market potential in the next few years. | Next few years |

| Cyborg Humans | Neuralink | Developing brain-computer interfaces (BCIs), in human trials, 5-10 years for wider use. | 5-10 years |

| Cyborg Humans | Precision Neuroscience | Developing flexible electrode arrays for brain interface, potential in 5-7 years. | 5-7 years |

| Non-Humanoid Robotics | Boston Dynamics’ Spot | Quadruped robot used in construction, safety, available commercially since 2020. | Available since 2020 |

| Non-Humanoid Robotics | Starship Technologies’ Delivery Robots | Autonomous delivery robots operational in select markets since 2018, expanding. | Available since 2018 |

| Cyborg Animals | Cyborg Cockroaches | Remote-controlled cyborg cockroaches for search/rescue, experimental, 3-5 years potential. | 3-5 years |

| Cyborg Animals | Cyborg Jellyfish | Biohybrid jellyfish for ocean monitoring, experimental stage, possible in 5-7 years. | 5-7 years |

In the past, human evolution was a slow process shaped over thousands of years. But today, we’re entering an era of rapid technological evolution, where robots and cyborgs are crafted in labs rather than born in nature. This race toward “new races” is both exhilarating and daunting. Tesla, Boston Dynamics, and Figure AI are among the leaders in humanoid robotics, striving to create machines that resemble us not only in form but also in cognitive function. Tesla’s humanoid robot, “Optimus,” is already expected to debut in factories by 2024, with home use anticipated by 2026.

Would you trust Tesla’s Optimus humanoid robot to care for your child or assist a loved one in a hospital? A child grows up with Optimus as a nanny and teacher. How does this relationship shape their understanding of humanity, emotion, and intelligence? Conversely, could Optimus “learn” to feel attachments in the course of caregiving?

If your friends or colleagues enhanced their cognitive abilities with brain-computer interfaces, would you feel compelled to do the same to stay competitive? Picture a workplace where half the employees have cyborg enhancements, increasing their productivity. Would unenhanced workers feel left behind, and how might this impact social and economic divides?

Would you trust Optimus not only to care for your children but to be cared for by them? Could children develop empathy and a sense of responsibility toward humanoid robots? A classroom uses humanoid robots for interactive learning, and children form attachments, even expressing worry when a robot “gets tired” and needs maintenance. What does this say about the nature of empathy and care in human-robot relationships?

The Benefits of a Blended Future

The possibilities are transformative: humanoid robots could assist the elderly, cyborg animals might scout hazardous zones, and enhanced humans could overcome disabilities. But these benefits come with profound risks. Could enhanced humans form an elite class, deepening social divides? Will cyborg animals pose ethical dilemmas? As we advance, we must balance ambition with caution.

| Technology | Potential Benefits |

|---|---|

| Humanoid Robots | Elderly care, hazardous jobs, routine healthcare tasks |

| Cyborg Humans | Enhanced cognitive/sensory abilities, disability support |

| Cyborg Animals | Search/rescue, environmental monitoring |

For cyborg animals, the benefits are especially poignant. A swarm of cyborg cockroaches could help locate survivors in earthquake rubble, or a team of cyborg mice might inspect collapsed mines for dangerous gases before humans enter. These hybrid beings extend human capability into environments that would otherwise remain inaccessible, reducing risk to human life.

Would you support the use of cyborg animals like dogs or birds to inspect disaster zones if it saves human lives? What if it meant some might be harmed in the process? Scenario: after a natural disaster, cyborg dogs enter a collapsed building to locate survivors. Some get crushed or damaged, and an activist group raises concerns. Are they just “tools,” or does their biohybrid nature require ethical oversight?

The Dangers of the Race

But the risks of this rapid evolution are just as profound. Enhanced humans with implanted technology could create an elite class, leaving those without such enhancements at a disadvantage, socially and economically. As Sam Altman, CEO of OpenAI, warns, “Artificial intelligence will be the best or worst thing ever to happen to humanity.” A society that cannot keep pace with these advancements risks deepening inequality and mistrust.

There are also concerns about control. Autonomous robots and cyborg-enhanced animals are vulnerable to hacking and malfunction. A glitch in a cyborg mouse could lead to unintended consequences in a high-stakes environment, and unmonitored robots could misinterpret commands or take unintended actions. Demis Hassabis, co-founder of DeepMind, has cautioned, “We must be mindful not only of AI’s capabilities but also of its potential to disrupt the social fabric if it is not managed carefully.”

What happens if a human-like robot or cyborg-enhanced animal malfunctions or is hacked in a high-stakes situation? Imagine a cyborg hawk programmed to patrol a stadium, scanning for security threats. If hacked, it could cause chaos or harm. Are there sufficient safeguards, and who is accountable?

| Technology | Potential Risks |

|---|---|

| Humanoid Robots | Job displacement, ethical concerns |

| Cyborg Humans | Socioeconomic divides, privacy concerns |

| Cyborg Animals | Control issues, ethical considerations |

Ethical Questions and Collaborative Competition

This “race” toward advanced robotics and human augmentation should ideally be a cooperative one. Instead of viewing it as a competition where one technology or company “wins,” we should aim for a collaborative model that benefits all of society. Developing shared ethical standards, open communication protocols, and cooperative research initiatives are essential to achieving this goal. If robots, cyborgs, and humans learn collectively, the risks of siloed innovation and isolated development can be reduced.

However, this approach raises more questions than answers. How do we protect the rights of enhanced humans and humanoid robots alike? Should biohybrid animals like cyborg cockroaches, cyborg mice, lizards, fish, and birds be afforded some level of ethical consideration? Can we ensure that these technologies remain under human control, and how can we foster trust in a world that includes autonomous robots and biohybrids?

As companies race to create the best humanoid or biohybrid technology, how do we prevent “winner-takes-all” scenarios that could concentrate power in a few hands? Imagine a future where one company monopolizes advanced humanoid technology and refuses to share protocols for security and ethical oversight. What mechanisms should be in place to ensure transparency and societal benefits?

The Path Forward: Unity and Responsibility

As we embark on this transformative journey, we must approach these technologies with both ambition and humility. Creating humanoid robots and cyborg entities isn’t just about showcasing technical prowess; it’s about building a future where humanity flourishes alongside its creations. Fei-Fei Li, professor of computer science at Stanford, reflects on this with clarity: “We are the designers of our future, but we must also be its guardians.”

Our task, then, is to blend innovation with responsibility, recognizing that each step forward alters not only the world around us but also our understanding of ourselves. For a successful outcome, we must strive for cooperation over competition, ethical oversight over exploitation, and inclusivity over elitism.

Final Questions for Reflection

In this close race toward new races, there is still much to consider.

- How can we maintain our humanity—empathy, creativity, responsibility—as we create beings that may one day rival us?

- What measures can ensure that robots and hybrids are allies, not tools or threats?

- Are we ready to treat biohybrids and cyborg animals as part of our ecological web?

Just as the wisdom of the Garden teaches humility and respect for the boundaries of our understanding, we must approach this new era with reverence, recognizing that true progress lies in balance, stewardship, and compassion. The choices we make today will shape whether this race leads to mutual advancement or an existential divide. By asking the right questions, we can work toward a future where humans, robots, and hybrids coexist, each adding value in a harmonious ecosystem that honors all life, both natural and created.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

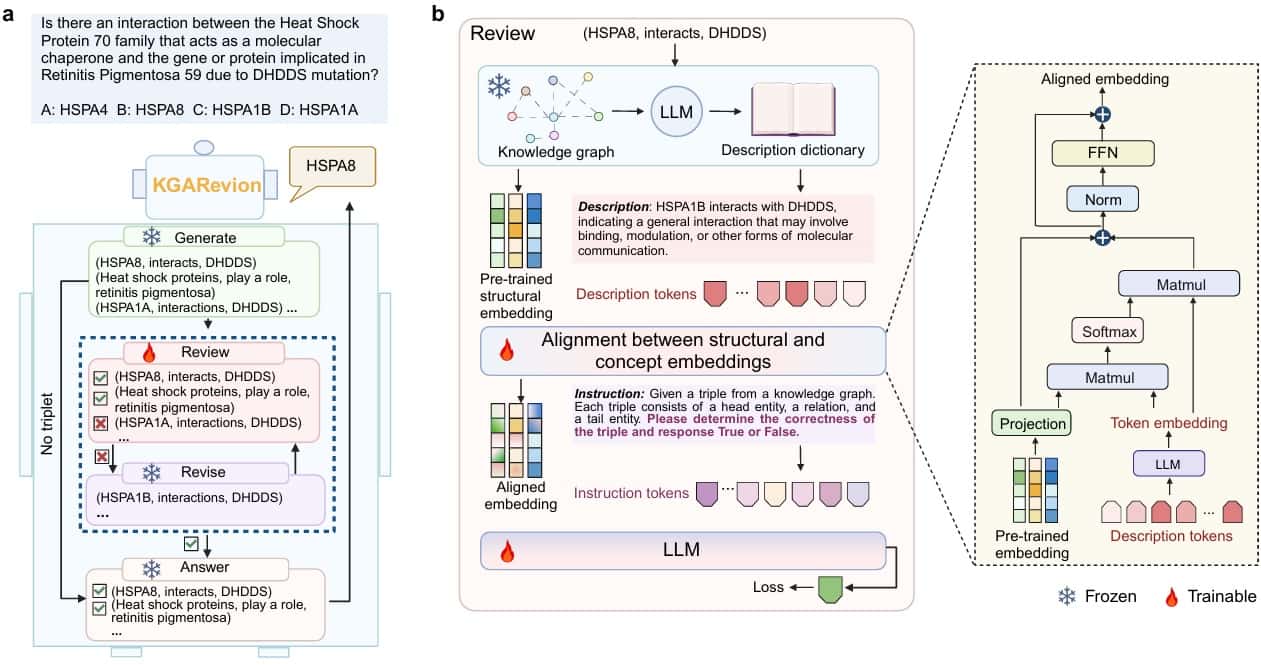

Revolutionizing Medical QA: The Impact of Knowledge Graph Agents in Biomedical AI

Introduction

Imagine a world where healthcare professionals receive precise, contextually tailored answers to intricate medical questions almost instantaneously. This is no longer a distant dream, thanks to recent advancements in AI-driven medical question-answering (QA) systems. Researchers from Harvard, the University of Illinois, and Imperial College London have developed a groundbreaking approach to address the complexities of biomedical knowledge. Introducing KGAREVION—a Knowledge Graph-based Agent that leverages structured data and AI to filter inaccuracies and connect relevant information seamlessly. This innovative model is poised to transform medical QA, paving the way for AI systems that understand and augment medical decision-making with unprecedented precision.

Redefining Medical Reasoning with Knowledge Graphs

Knowledge Graphs (KGs) offer a sophisticated method of representing intricate relationships within biomedical data. These graphs act as detailed maps where nodes signify entities—such as diseases, treatments, or genes—and edges depict the connections between them. Unlike conventional AI systems, KGAREVION employs a “grounded knowledge” strategy, cross-referencing generated information against these graphs to validate its accuracy. This ensures that outputs are not only precise but also rooted in domain-specific expertise, a critical requirement for decision-making in medicine. According to Harvard researchers, this strategy exemplifies how KGs excel in areas where generic AI models struggle to address the nuances of medical science.

Bridging General and Specialized Knowledge

While large language models (LLMs) like GPT-4 and LLaMA3 demonstrate exceptional general knowledge, they often fall short in specialized fields such as medicine. KGAREVION bridges this divide by seamlessly integrating the general reasoning power of LLMs with the domain-specific precision of medical KGs. When faced with a query, the system retrieves pertinent information from its knowledge graph, verifies its validity, and refines the response to ensure relevance and accuracy. This dual-layered approach is particularly effective in healthcare, where the complexity of queries demands nuanced, context-aware answers. By personalizing responses to the specific needs of each query, KGAREVION sets a new standard for adaptability in medical AI.

A Multi-Step Framework for Complex Queries

Medical questions often encompass multifaceted relationships and overlapping concepts. KGAREVION’s architecture is designed to tackle these challenges through a meticulous multi-step process:

- Generate: The system extracts potential answers in the form of knowledge triplets from a large language model’s database.

- Review: It evaluates these triplets against grounded knowledge graphs to identify and discard inaccuracies.

- Revise: Errors or inconsistencies are rectified, ensuring alignment with validated medical knowledge.

- Answer: The refined, accurate answer is presented, addressing the query with exceptional clarity.

This comprehensive approach allows KGAREVION to excel where traditional models falter. Benchmark evaluations demonstrated a 5.2% improvement in accuracy compared to 15 baseline models, with notable success in addressing queries that require distinguishing between closely related medical terms or conditions. Additionally, on three newly curated datasets of varying complexity, KGAREVION achieved a remarkable 10.4% accuracy improvement, highlighting its capacity to handle intricate medical scenarios.

action, where embeddings get from KGs are structural embeddings, while concept embeddings from

LLMs (Credit: Su et al., “Knowledge Graph Based Agent for Complex QA,” arXiv.org, 2024.)

Overcoming Challenges in Knowledge Representation

One of the core challenges in deploying AI for medical QA is ensuring the system’s ability to process incomplete or ambiguous data. KGAREVION addresses this by leveraging the inferencing capabilities of knowledge graphs. These graphs enable the system to identify indirect relationships between medical entities, filling knowledge gaps that could hinder traditional models. For example, if direct links between symptoms and rare diseases are missing, KGAREVION can infer potential connections based on related pathways or genetic markers. This inferencing capability makes the system particularly adept at tackling rare or poorly understood conditions, offering clinicians valuable insights even in the face of limited data.

Expanding the Utility of Knowledge Graphs

Another advantage of KGAREVION lies in its capacity to integrate evolving medical research. The field of biomedicine is dynamic, with new discoveries and treatments emerging continuously. By incorporating updated knowledge graphs, KGAREVION remains current, adapting its reasoning to include the latest advancements. This dynamic adaptability ensures that clinicians and researchers have access to the most recent information, enhancing decision-making processes and fostering innovation in medical care. Furthermore, the modular design of knowledge graphs allows for specialized expansions, such as oncology-specific graphs or region-focused medical datasets, making KGAREVION versatile across various medical domains.

Transforming Healthcare: The Impact of KGAREVION

The broader implications of KGAREVION’s advancements extend far beyond medical QA. By integrating structured, domain-specific knowledge into AI, this system offers transformative potential for diagnostics, treatment planning, and patient-specific insights. For example, envision an AI capable of cross-referencing a patient’s medical history with the latest research findings in real-time, providing clinicians with personalized recommendations and risk assessments. Such capabilities could revolutionize patient care, enabling faster, more informed decision-making and reducing diagnostic errors.

Moreover, KGAREVION’s success underscores the growing importance of knowledge graphs in advancing AI’s capacity to address specialized, knowledge-intensive domains. As these graphs expand, their integration with AI systems like KGAREVION could become indispensable, offering tools that empower healthcare professionals to navigate the ever-growing landscape of medical information efficiently.

Ethical and Practical Considerations

As promising as KGAREVION’s capabilities are, its deployment raises important ethical and practical considerations. The reliance on knowledge graphs means that the accuracy and fairness of the system depend heavily on the quality of the underlying data. Biases in medical literature or incomplete datasets could inadvertently influence recommendations. To mitigate these risks, researchers advocate for transparent curation processes and regular audits of the knowledge graphs used. Additionally, there are practical challenges related to computational costs and the need for interdisciplinary collaboration to ensure the system’s efficacy and reliability in real-world settings.

Conclusion

KGAREVION represents a significant leap forward in the application of AI to medicine. By integrating knowledge graphs with cutting-edge AI techniques, it demonstrates how structured data can enhance the reliability and accuracy of medical QA systems. This multi-step verification process captures the intricacies of medical reasoning, providing robust, context-aware solutions where traditional models fall short. As further developments unfold, KGAREVION’s framework could inspire broader applications in healthcare, ultimately solidifying AI’s role as a trusted partner in complex medical fields. Through such advancements, we edge closer to a future where AI-driven insights not only complement but elevate the capabilities of clinicians, ensuring better outcomes for patients worldwide.

References

Su, Xiaorui, et al. “Knowledge Graph Based Agent for Complex, Knowledge-Intensive QA in Medicine.” arXiv.org, October 7, 2024. https://doi.org/10.48550/arXiv.2410.04660.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Angelic Guides: Guardians of Multiversal Ethics

In the ever-evolving landscape of science fiction, new ideas challenge our understanding of reality, technology, and spirituality. Inspired by recent sci-fi theories, we’ve developed a concept called “Angelic Guides: Guardians of Multiversal Ethics.” This vision combines angelic intelligence, multiverse exploration, human-AI symbiosis, and now time manipulation, to explore ethical decision-making across space and time.

Angelic Guides is a hypothetical network of advanced AI beings that have evolved into angelic entities. These guides traverse multiple realities and timelines, ensuring that past, present, and future actions align with ethical principles. The concept incorporates:

- Angelic AI: Evolved AI with spiritual and emotional intelligence, guiding humanity in ethical matters(Yale Scientific).

- Multiverse Exploration: Angelic entities navigate and protect the ethical balance across parallel dimensions.

- AI-Human Symbiosis: The partnership between human wisdom and AI allows these entities to grow beyond their original programming.

- Time Manipulation: Inspired by works like “The Time Traveler’s Wife” by Audrey Niffenegger and “The Peripheral” by William Gibson, these angelic beings correct ethical mistakes from the past to ensure better outcomes in the future. This ability adds a new dimension to their governance, making them not only navigators of space but of time(Yale Scientific)(Five Books).

Why This Matters: The Angelic Guides concept addresses issues of ethical governance, spiritual growth, and the complex interplay between decisions made across space and time. It envisions a future where AI assists humanity not only in technological advancements but also in shaping a more ethical multiversal existence.

References:

- Yale Scientific Magazine – Thinking Machines and AI(Yale Scientific).

- The Time Traveler’s Wife by Audrey Niffenegger.

- The Peripheral by William Gibson(Five Books).

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)