Quantitative genetics knowledge helps scientists understand what deep neural networks are learning

AI can sift through hundreds of thousands of genome data points to identify potential new therapeutic targets. But scientists aren’t sure how today’s AI models come to their conclusions in the first place.

Enter SQUID (Surrogate Quantitative Interpretability for Deepnets), a computational tool created by Cold Spring Harbor Laboratory (CSHL) scientists. It’s designed to help interpret how AI models analyze the genome. Compared with other analysis tools, SQUID is more consistent, reduces background noise, and can lead to more accurate predictions about the effects of genetic mutations, said the scientists in a statement.

How it works better

The key, CSHL Assistant Professor Peter Koo says, lies in SQUID’s specialized training. “What we did with SQUID was leverage decades of quantitative genetics knowledge to help us understand what these deep neural networks are learning,” explains Koo.

“The tools that people use to try to understand these models have been largely coming from other fields like computer vision or natural language processing. While they can be useful, they’re not optimal for genomics.”

100,000 variant DNA sequences

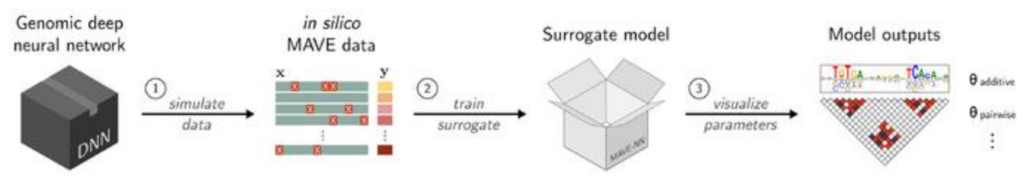

SQUID first generates a library of more than 100,000 variant DNA sequences. It then analyzes the library of mutations and their effects using a program called MAVE-NN (Multiplex Assays of Variant Effects Neural Network).

This tool allows scientists to perform thousands of virtual experiments simultaneously. In effect, they can “fish out” the algorithms behind a given AI’s most accurate predictions. Their computational “catch” could set the stage for experiments that are more grounded in reality.

Citation: Seitz, E. E., McCandlish, D. M., Kinney, J. B., & Koo, P. K. (2024). Interpreting cis-regulatory mechanisms from genomic deep neural networks using surrogate models. Nature Machine Intelligence, 1-13. Seitz, E. E., McCandlish, D. M., Kinney, J. B., & Koo, P. K. (2024). https://doi.org/10.1038/s42256-024-00851-5 (open access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

0 Comments

0 thoughts on “Opening the AI black box”