Scientists at Oak Ridge National Laboratory have found a way to track quick changes in magnetic materials at the nanoscale.

The scientists have described the methods and results of this study in a paper published in Nano Letters.

This knowledge might boost tech like regular computers and new quantum computing.

Materials often shift phases, changing key traits step-by-step with temperature. Knowing how materials act near a critical transition temperature, where big changes happen, helps create tech with special features. The scientists used a nanoscale quantum sensor, a tool detecting tiny quantum signals, to watch spin fluctuations in a magnetic thin film. Spin fluctuations occur when a material’s magnetic direction keeps switching instead of staying steady. Thin films, super thin layers, matter for data storage and gadgets because scientists can tweak their magnetism easily.

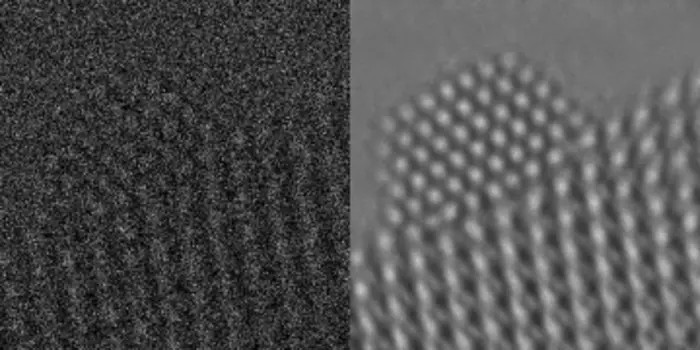

The scientists relied on a scanning nitrogen-vacancy center microscope at ORNL’s Center for Nanophase Materials Sciences. A nitrogen-vacancy center is a diamond flaw where a nitrogen atom replaces a carbon atom, and another carbon is missing, forming unique quantum spin states, the basic units of magnetism in quantum tech. This microscope senses static and shifting magnetic fields, letting researchers see tiny spin details in nanoscale structures.

Toward new spintronics for advanced computing

In an ORNL press release, the scientists explain that the nitrogen-vacancy center acts as a qubit and a sensitive detector. They moved it over the thin film to catch temperature-driven magnetic shifts. They tracked spin fluctuations as the film switched magnetic states due to temperature changes. This showed how local spin changes connect across the material near phase transitions.

These discoveries could spark new spin-based tech, called spintronics, improving data storage and computing power. Quantum computing with spins might also solve tough problems regular computers can’t touch, if scientists master spin interactions.

This work ties ORNL’s skills in quantum info and condensed matter physics. Using today’s quantum tools to understand materials could lead to better quantum devices for networking, sensing, and computing.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)