MIT researchers have fully 3D-printed active electronics. They have successfully 3D-printed logic gates, the basic building blocks in electronics that are essential for computers and other electronic devices to function.

The MIT team described the methods and results of this study in a research paper titled “Semiconductor-free, monolithically 3D-printed logic gates and resettable fuses,” published in Virtual and Physical Prototyping.

Traditional electronics use semiconductors (like silicon) to control electrical signals. The MIT team created logic gates without using semiconductors. Instead, they used a special conductive polymer, a large molecule that can carry electricity.

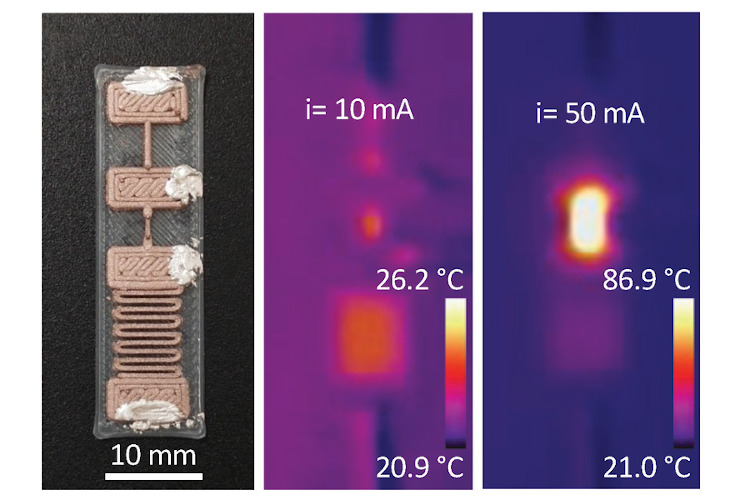

The researchers used polymer filaments doped with copper nanoparticles to 3D-print resettable fuses that still work after a fault. This is a key enabler of the encouraging results of the study.

By using 3D printing, making electronic devices can become simpler and faster. This could lead to cheaper and more accessible electronics. 3D printing also allows for customizing electronics for specific needs more easily.

The materials and methods used are more environmentally friendly compared to traditional electronics manufacturing, which often involves toxic chemicals and complex processes.

The MIT team also tested alternatives to the polymer filaments doped with copper nanoparticles. The researchers tested polymers doped with carbon, carbon nanotubes, and graphene, but could not find another printable material that could function as a resettable fuse.

Democratizing electronics

“This technology has real legs,” says lead researcher says Luis Fernando Velásquez-García in a MIT press release. “While we cannot compete with silicon as a semiconductor, our idea is not to necessarily replace what is existing, but to push 3D printing technology into uncharted territory.”

The ability to 3D print an entire, active electronic device without the need for semiconductors could bring electronics fabrication to businesses, labs, and homes across the globe.

Velásquez-García adds that this is really about democratizing technology. “This could allow anyone to create smart hardware far from traditional manufacturing centers,” he says.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)