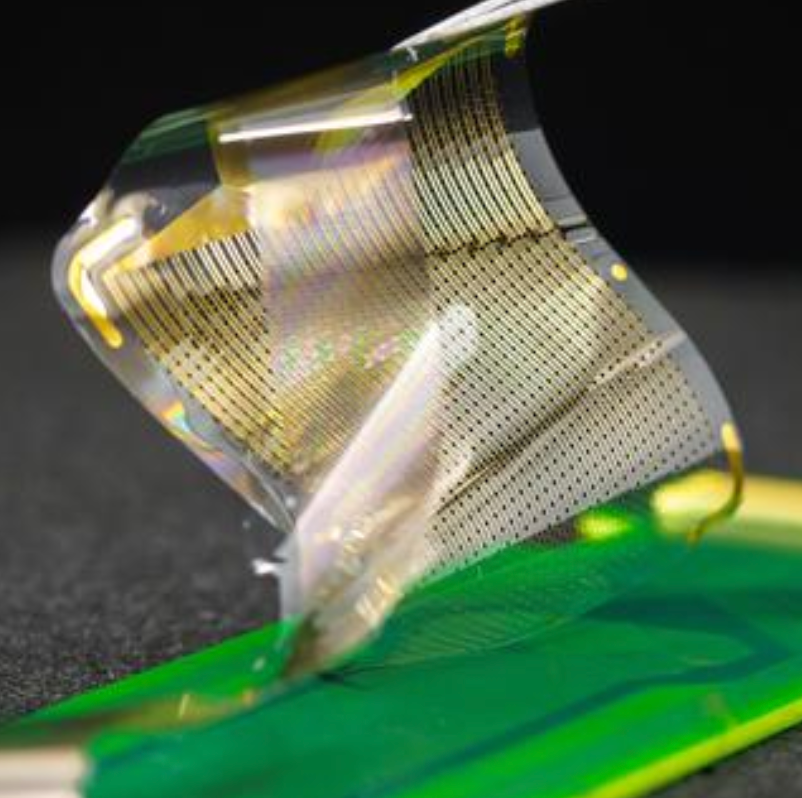

An innovative “Hydropanel” device that distills water directly from water vapor in the atmosphere, using only sunlight power, has been developed by Source, a Scottsdale, Arizona company.

After distillation, the water is mineralized for ideal pH and TDS (total dissolved solid contaminants), resulting in “safe, premium-quality drinking water,” the company says. The process also mineralizes the water with essential magnesium and calcium to increase body absorption and refine alkalinity and taste.

“Each Hydropanel can produce up to three liters of drinking water a day, about the average daily intake for one person,” according to Cody Friesen, an Associate Professor at the School for Engineering of Matter, Transport and Energy at Arizona State University.

Other larger solar-powered devices for producing water are also commercially available.

The company also plans to offer a can version.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)