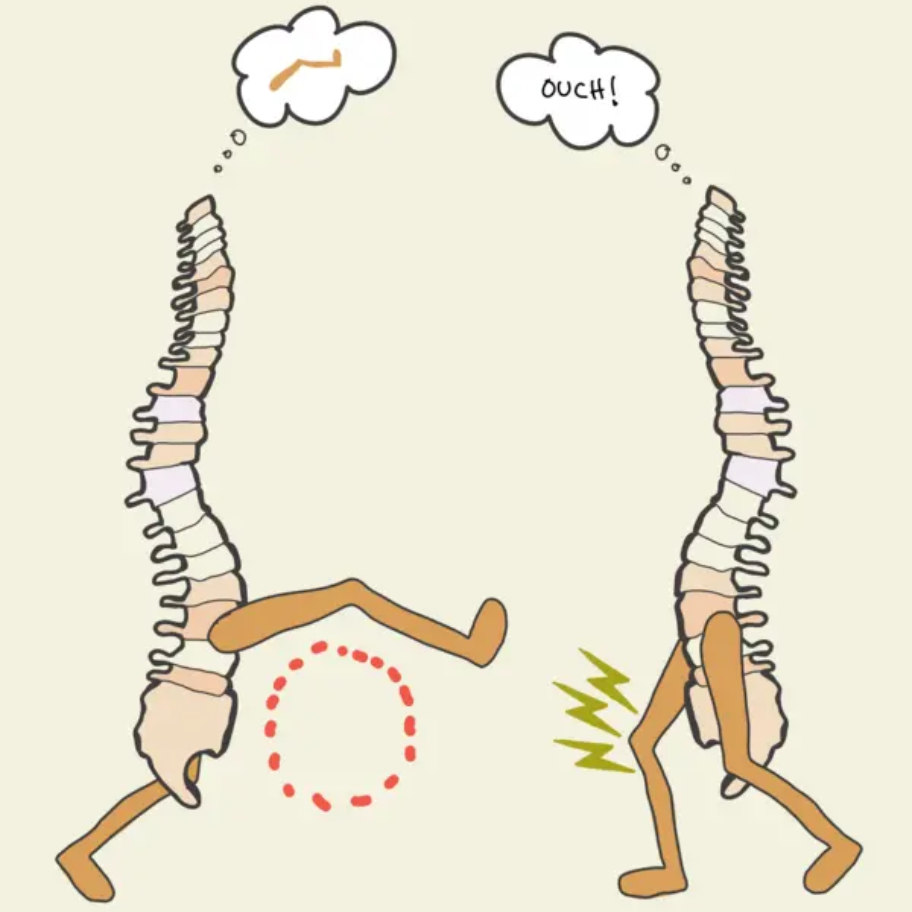

In a new study involving thousands of simulated cases of patients with chest pain, GPT-4 provided inconsistent conclusions, returning different heart-risk assessment levels for the same patient data.

Despite GPT-4’s reported ability to pass medical exams, it also failed to match the traditional methods physicians use to judge a patient’s cardiac risk.

These findings were published in the journal PLOS ONE.

“ChatGPT was not acting in a consistent manner,” said lead author Dr. Thomas Heston, a researcher with Washington State University’s Elson S. Floyd College of Medicine. “Given the exact same data, ChatGPT would give a score of low risk, then next time an intermediate risk, and occasionally, it would go as far as giving a high risk.”

Weakness: Built-in randomness

The authors believe the problem is likely due to the level of randomness built into the current version of the software, which helps it vary its responses to simulate natural language. However, this same randomness does not work well for healthcare uses that require a single, consistent answer, Heston said.

“We found there was a lot of variation, and that variation in approach can be dangerous,” he said. “It can be a useful tool, but I think the technology is going a lot faster than our understanding of it, so it’s critically important that we do a lot of research, especially in these high-stakes clinical situations.”

Chest pains are common complaints in emergency rooms, requiring doctors to rapidly assess the urgency of a patient’s condition. Some very serious cases are easy to identify by their symptoms, but lower-risk ones can be trickier, Heston notes, especially when determining whether someone should be hospitalized for observation or sent home and receive outpatient care.

Potential in healthcare

Despite the negative findings of this study, Heston sees great potential for generative AI in healthcare. “ChatGPT could be excellent at creating a differential diagnosis and that’s probably one of its greatest strengths,” he said.

“If you don’t quite know what’s going on with a patient, you could ask it to give the top five diagnoses and the reasoning behind each one. So it could be good at helping you think through a problem, but it’s not good at giving the answer.”

Citation: Thomas F. Heston, Lawrence M. Lewis. April 16, 2024. ChatGPT provides inconsistent risk-stratification of patients with atraumatic chest pain. PLOS. 10.1371/journal.pone.0301854 (open access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)