Google DeepMind has contributed nearly 400,000 new compounds to the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) Materials Project for use by researchers.

Think future materials like lighter alloys that improve fuel economy in cars, more efficient solar cells to boost renewable energy, and faster transistors for the next generation of computers.

The Materials Project, an open-access database founded at the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) in 2011, computes the properties of both known and predicted materials for use in future technologies. The new compounds include how the atoms of a material are arranged (the crystal structure) and how istable it is (formation energy).

“We have to create new materials if we are going to address the global environmental and climate challenges,” said Kristin Persson, the founder and director of the Materials Project at Berkeley Lab and a professor at UC Berkeley. “With innovation in materials, we can potentially develop recyclable plastics, harness waste energy, make better batteries, and build cheaper solar panels that last longer, among many other things.”

Deep learning tool

To generate the new data, Google DeepMind developed a deep learning tool called Graph Networks for Materials Exploration, or GNoME. Researchers trained GNoME using workflows and data that were developed over a decade by the Materials Project, and improved the GNoME algorithm through active learning.

GNoME researchers ultimately produced 2.2 million crystal structures, including 380,000 that they are adding to the Materials Project and predict are stable, making them potentially useful in future technologies. The new results from Google DeepMind are published today in the journal Nature.

Quick discovery

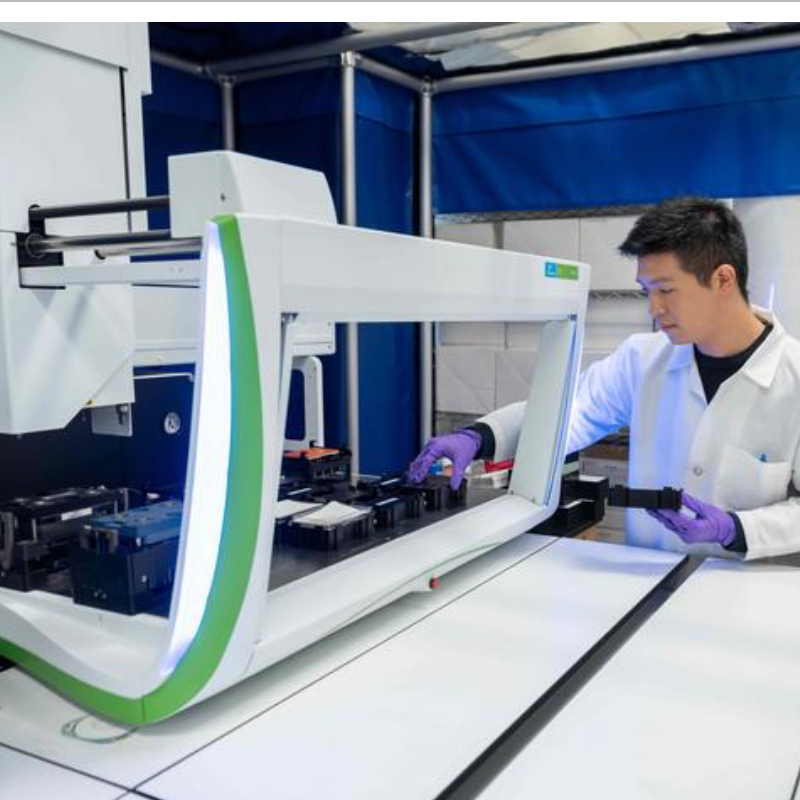

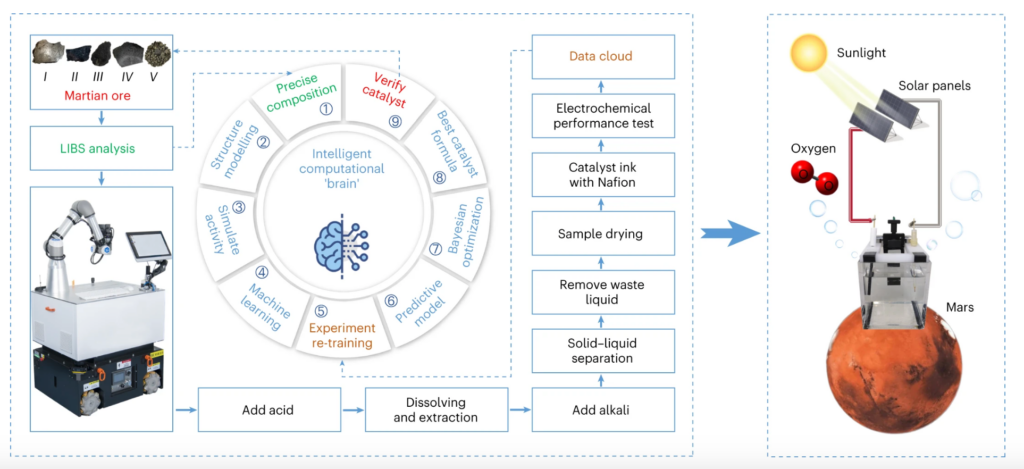

Some of the computations from GNoME were used alongside data from the Materials Project to test A-Lab, a facility at Berkeley Lab where artificial intelligence guides robots in making new materials. A-Lab’s first paper, also published today in Nature, showed that the autonomous lab can quickly discover novel materials with minimal human input.

To make the novel compounds predicted by the Materials Project, A-Lab’s AI created new recipes by combing through scientific papers and using active learning to make adjustments. Data from the Materials Project and GNoME were used to evaluate the materials’ predicted stability.

The Materials Project is the most widely used open-access repository of information on inorganic materials in the world. The database holds millions of properties on hundreds of thousands of structures and molecules, information primarily processed at Berkeley Lab’s National Energy Research Science Computing Center.

More than 400,000 people are registered as users of the site and, on average, more than four papers citing the Materials Project are published every day. The contribution from Google DeepMind is the biggest addition of structure-stability data from a group since the Materials Project began.

New data freely available

The Materials Project is now processing the compounds from Google DeepMind and adding them into the online database. The new data will be freely available to researchers, and also feed into projects such as A-Lab that partner with the Materials Project.

By following promising leads from data in the Materials Project over the past decade, researchers have experimentally confirmed useful properties in new materials across several areas.

These include carbon capture (pulling carbon dioxide from the atmosphere), photocatalysts (materials that speed up chemical reactions in response to light and could be used to break down pollutants or generate hydrogen), thermoelectrics (materials that could help harness waste heat and turn it into electrical power) and transparent conductors (which might be useful in solar cells, touch screens, or LEDs).

Citation: Szymanski, N. J., Rendy, B., Fei, Y., Kumar, R. E., He, T., Milsted, D., McDermott, M. J., Gallant, M., Cubuk, E. D., Merchant, A., Kim, H., Jain, A., Bartel, C. J., Persson, K., Zeng, Y., & Ceder, G. (2023). An autonomous laboratory for the accelerated synthesis of novel materials. Nature, 1-6. https://doi.org/10.1038/s41586-023-06734-w (open access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)