Seeing inside the black box of deep neural networks

Dec. 16, 2024.

2 mins. read.

3 Interactions

Researchers have found a way to visualize how deep neural networks organize information, which helps spotting errors.

Deep neural networks work by passing data through several layers, starting with an input layer that takes in raw data. Hidden layers then analyze this data, with early layers spotting basic features and deeper layers recognizing more complex patterns.

For example, in deep neural networks for image recognition, early layers spot basic features like edges and deeper layers recognizing more complex patterns, like a cat or dog.

But the hidden layers are like a black box; we see the inputs and outputs, but not what happens inside. This opacity becomes a problem when AI makes errors, sometimes from just a tiny change in input.

Researchers at Kyushu University have found a way to visualize how these networks organize information and described it in a paper published in IEEE Transactions on Neural Networks and Learning Systems.

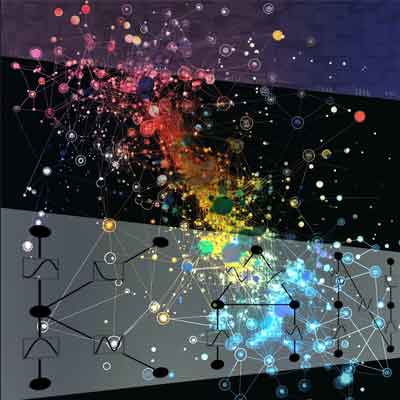

Traditional methods simplify high-dimensional data into 2D or 3D, which loses detail and makes comparing different neural networks or data classes hard.

Organizing the data

The new method, called the k* distribution method, keeps the data in higher dimensions, preventing information loss. It assigns each data point a k* value, showing how far it is from unrelated data. A high k* value means good separation, like cats far from dogs, while a low value indicates overlap, making classification errors more likely. This method reveals if data is clustered, where similar items group together, or if it’s fractured or overlapping, where items are scattered or mixed, potentially leading to classification mistakes.

In a Kyushu University press release, researcher Danilo Vasconcellos Vargas explains this like organizing a warehouse: if items are well sorted, finding them is easy; if not, errors occur.

This approach is crucial for AI used in safety-critical areas like self-driving cars or medical diagnosis. It helps evaluate AI’s decision-making, identify weaknesses, and improve reliability, especially when dealing with incomplete or unclear data. The goal is to make AI systems that are not only accurate but also adaptable to real-world challenges.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

2 Comments

2 thoughts on “Seeing inside the black box of deep neural networks”

Pics or didn't happen :))

🟨 😴 😡 ❌ 🤮 💩

There's a picture in the press release.

🟨 😴 😡 ❌ 🤮 💩