The Future of Lifelike Audio-Driven Talking Faces: Microsoft Research Asia and VASA-1

Jul. 08, 2024. 2 mins. read.

63 Interactions

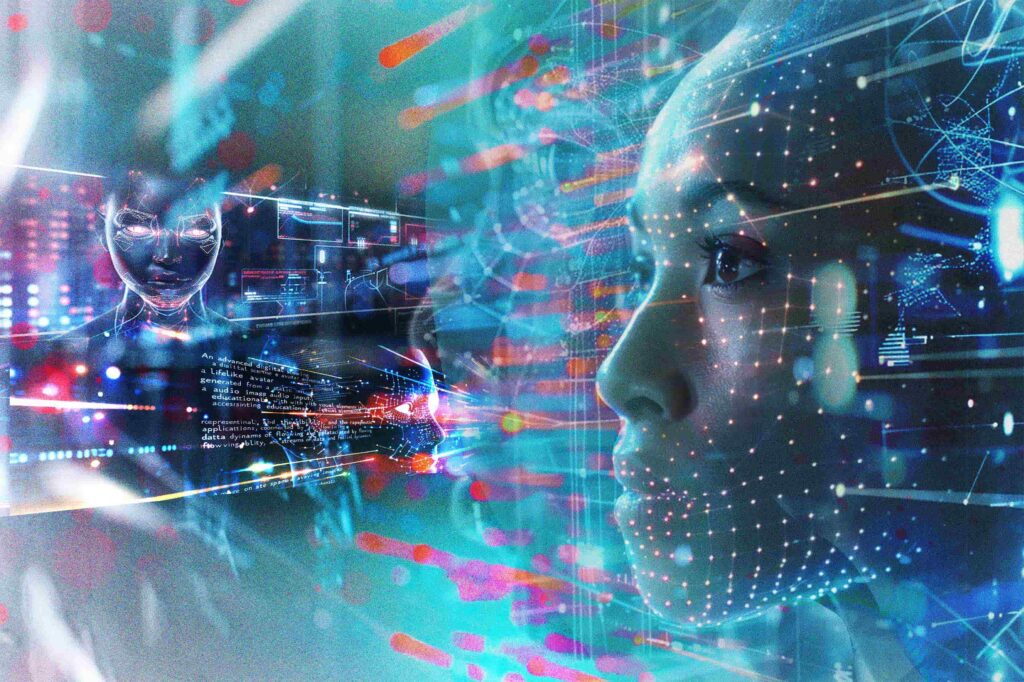

Microsoft Research Asia has introduced VASA-1, a groundbreaking framework for generating lifelike talking faces with appealing visual affective skills (VAS) given a single static image and a speech audio clip.

In the digital age where multimedia and communication technologies continue to impress the masses with their dramatic advancement, Microsoft Research Asia introduces VASA-1, a transformative model designed to generate real-time, lifelike talking faces from a single static image and a speech audio clip. This technology pushes the boundaries of audio-visual synchronization and enhances the realism and effectiveness of human-computer interactions across various domains.

Comprehensive Overview of VASA-1 Technology

VASA-1 stands out for its ability to produce synchronized lip movements, natural facial expressions, and head movements.

Core Innovations:

- Holistic Facial Dynamics Modeling: Unlike traditional methods that treat different facial features separately, VASA-1 models all aspects of facial dynamics—including lip movements, eye gaze, and other expressions—as a single latent variable. This approach ensures seamless integration and fluid motion, contributing to the model’s lifelike outputs.

- Diffusion Transformer Model: At the heart of VASA-1’s capability is a Diffusion Transformer model that enhances the generative process. This model is trained on a vast dataset of face videos, allowing it to accurately replicate human-like nuances in facial dynamics and head movements based on audio inputs alone.

Expanding the Horizons of Digital Communication

VASA-1’s application potential is vast and varied:

- Enhanced Accessibility: VASA-1 can facilitate more expressive interactions for individuals with communicative impairments, providing a platform for clearer and more empathetic communication.

- Education and Learning: In educational settings, VASA-1 can serve as an interactive tool for AI-driven tutoring, capable of delivering instructional content with engaging and responsive facial expressions that mimic human tutors.

- Therapeutic Use: The technology also holds promise in healthcare, particularly in therapeutic settings where lifelike avatars can offer social interaction and emotional support to patients.

Technical Specifications and Performance Metrics

VASA-1 delivers high-resolution videos (512×512 pixels) at up to 40 frames per second, with negligible starting latency, making it ideal for real-time applications. The model’s efficiency and quality are evidenced by its performance across several newly developed metrics for evaluating lifelike digital animations, where it significantly outperforms existing methods.

Future Directions and Ethical Considerations

Looking ahead, the development team aims to refine VASA-1’s capabilities by:

- Broadening Emotional Range: Incorporating a wider array of emotions and talking styles to cover more nuanced interactions.

- Full-Body Dynamics: Expanding the model to include full-body dynamics for complete digital persona creation.

- Multi-Lingual and Non-Speech Sounds: Enhancing the model’s responsiveness to a broader spectrum of audio inputs, including multiple languages and non-verbal sounds.

The ongoing development will focus on safeguarding against misuse, particularly in impersonation or deceptive uses.

Conclusion

VASA-1 by Microsoft Research Asia represents a significant step forward in the convergence of AI and human interaction. By delivering real-time, high-fidelity talking faces, VASA-1 opens new pathways for making digital interactions as rich and engaging as face-to-face conversations. It promises not only to transform user experiences but also to foster connections that transcend the digital divide.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

26 Comments

26 thoughts on “The Future of Lifelike Audio-Driven Talking Faces: Microsoft Research Asia and VASA-1”

Bringing realistic talking faces to digital interactions could be a game-changer for bridging the gap between online and real-life communication.

🟨 😴 😡 ❌ 🤮 💩

Microsoft Research Asia's innovation in generating lifelike talking faces from a single image and audio clip is truly groundbreaking. This technology promises to revolutionize digital communication and enhance user interactions across various fields.

🟨 😴 😡 ❌ 🤮 💩

VASA-1's marriage of holistic facial dynamics and diffusion transformers presents a promising avenue for enhanced realism in AI-powered communication. The potential applications in accessibility and therapeutic settings are particularly intriguing, fostering more inclusive and engaging interactions. Addressing ethical considerations throughout development is commendable, ensuring this technology serves as a force for good.

🟨 😴 😡 ❌ 🤮 💩

VASA-1's ability to generate real-time talking faces is a significant contribution to audio-visual communication technology. The holistic facial dynamics modeling and utilization of a diffusion transformer model are particularly noteworthy advancements. Further exploration of VASA-1's potential impact on human-computer interaction in educational settings, particularly its use in AI-driven tutoring with lifelike avatars, would be insightful.

🟨 😴 😡 ❌ 🤮 💩

It's incredible how a single static image and speech audio clip can generate such realistic and expressive interactions. The future of digital communication looks incredibly promising!

🟨 😴 😡 ❌ 🤮 💩

Your article offers a thorough look at its innovative approach to generating lifelike talking faces from a single image and audio. It might be useful to include more details on the ethical safeguards in place to prevent misuse, as this is a crucial aspect of deploying such powerful technology.

🟨 😴 😡 ❌ 🤮 💩

The future directions for VASA-1, including broadening emotional range, full-body dynamics, and multi-lingual capabilities, show the immense potential for this technology. Ensuring ethical considerations are addressed is crucial as it evolves.

🟨 😴 😡 ❌ 🤮 💩

High-resolution videos at 40 frames per second with negligible latency make VASA-1 ideal for real-time applications. It's exciting to see how this technology will improve digital interactions and offer more natural communication experiences.

🟨 😴 😡 ❌ 🤮 💩

This technology is truly groundbreaking! VASA-1's advancements in creating realistic talking faces from minimal input are amazing. Can't wait to see its impact on digital communication and interaction!

🟨 😴 😡 ❌ 🤮 💩

This is impressive! VASA-1’s ability to create lifelike talking faces from just a static image and audio is a major leap forward in digital interaction. I'm excited to see how this technology evolves and reach its potential.

🟨 😴 😡 ❌ 🤮 💩

These types of AI technologies show us far we've come over the past few years in the field Artificial intelligence, I believe this technology can have radical impact in real time communications but I also have my fears, as these types of technologies can be used for malicious purposes as well. So as always technology is a double edged sword, we have to be careful which part we choose to sharpen!

🟨 😴 😡 ❌ 🤮 💩

This article on VASA-1 by Microsoft Research Asia is fascinating! The ability to create lifelike talking faces from just a photo and audio clip feels like something out of a sci-fi movie. It’s exciting to think about the potential for improving communication for people with impairments, enhancing education, and even providing therapeutic support. The technology behind VASA-1, especially its holistic facial dynamics modeling, is truly impressive. Looking forward to seeing how this evolves!

🟨 😴 😡 ❌ 🤮 💩

Great article! VASA-1 creates lifelike talking faces from a single image and audio, revolutionizing digital interactions with impressive real-time realism.

🟨 😴 😡 ❌ 🤮 💩

The misuse for this is going to create a lot of trouble

🟨 😴 😡 ❌ 🤮 💩

It's great to see Microsoft Research Asia addressing ethical considerations alongside technological innovation. VASA-1's development towards broader emotional range and multi-lingual capabilities looks promising for the future

🟨 😴 😡 ❌ 🤮 💩

Interesting read on Microsoft Research Asia's VASA-1 and its capability to generate lifelike talking faces from audio and a static image! The integration of holistic facial dynamics and a Diffusion Transformer model to produce real-time, synchronized talking faces is particularly impressive. I wonder how VASA-1 handles the complexity of emotional nuances across different cultures with such technology. Additionally, in the context of deepfakes, what measures are in place to ensure the ethical use of such advanced capabilities? The potential applications in accessibility, education, and healthcare are promising. Looking forward to seeing how this technology progresses!

🟨 😴 😡 ❌ 🤮 💩

The holistic facial dynamics modeling and diffusion transformer model behind VASA-1 highlight the technological advancements in this field. The potential applications in accessibility and education are particularly exciting!

🟨 😴 😡 ❌ 🤮 💩

Impressive advancements from Microsoft Research Asia with VASA-1! Real-time lifelike talking faces could revolutionize digital communication and accessibility. Can't wait to see this in action! ??

🟨 😴 😡 ❌ 🤮 💩

This is incredible! VASA-1's ability to generate lifelike talking faces from just an image and audio clip is a game-changer for digital communication. Can't wait to see how it revolutionizes accessibility and education! ??

🟨 😴 😡 ❌ 🤮 💩

The way VASA-1 generates lifelike talking faces from a single static image and speech audio clip is truly impressive. The holistic facial dynamics modeling and Diffusion Transformer model really caught my attention.

🟨 😴 😡 ❌ 🤮 💩

I was particularly intrigued by how VASA-1 can enhance accessibility and create more expressive interactions for individuals with communicative impairments. The potential applications in education and healthcare are very promising.

🟨 😴 😡 ❌ 🤮 💩

It's amazing to see how technology can enhance human-computer interactions in such a realistic way. However, I wonder about its practical usefulness and impact compared to other technological innovations.

🟨 😴 😡 ❌ 🤮 💩

The introduction of VASA-1 by Microsoft Research Asia is truly groundbreaking! This innovative framework, capable of generating lifelike talking faces from just a single static image and speech audio clip, signifies a monumental leap in audio-visual synchronization and human-computer interaction. The holistic facial dynamics modeling and Diffusion Transformer model at the heart of VASA-1 showcase a sophisticated approach to producing natural facial expressions and synchronized lip movements. The potential applications of this technology are vast, ranging from enhanced accessibility for individuals with communicative impairments to engaging AI-driven tutoring in educational settings and therapeutic uses in healthcare. The technical prowess of VASA-1, delivering high-resolution, real-time videos, and its future directions towards broadening emotional range and incorporating full-body dynamics, are truly exciting. Kudos to Tesfu Assefa and the Microsoft Research Asia team for pushing the boundaries of digital communication and setting a new standard for lifelike digital animations!

🟨 😴 😡 ❌ 🤮 💩

I read the paper and watched their demo results, man their model is amazing at least better than sad talker and museTalk?

🟨 😴 😡 ❌ 🤮 💩

nice article

🟨 😴 😡 ❌ 🤮 💩

good read.

🟨 😴 😡 ❌ 🤮 💩