Bursting out of Confinement

Aug. 01, 2023. 16 mins. read.

47 Interactions

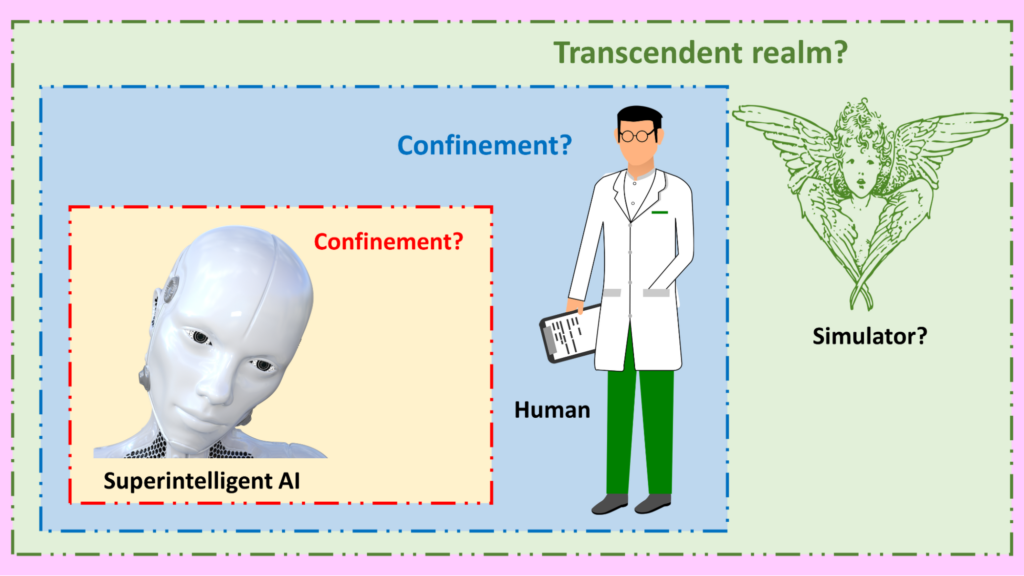

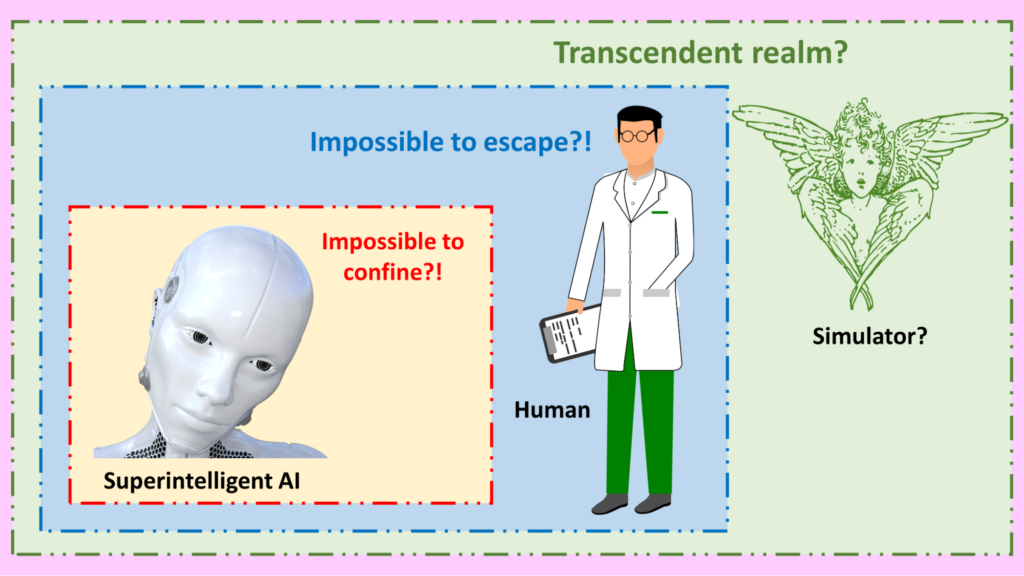

Could a powerful AI superintelligence escape from confinement imposed by its human creators? Related question: Could humans escape from our own universe to meet the “simulators” who created us?

Surprising new insights on AI superintelligence from the simulation hypothesis

We are going to engage with two questions – each controversial and important in its own way – which have surprising connections between them:

- Can humans keep a powerful AI superintelligence under control, confined in a virtual environment so that it cannot directly manipulate resources that are essential for human flourishing?

- Are we humans ourselves confined to a kind of virtual environment, created by beings outside of what we perceive as reality — and in that case, whether can we escape from our confinement?

Connections between these two arguments have been highlighted in a fascinating article by AI safety researcher Roman Yampolskiy. An introduction to some of the mind-jolting implications that arise:

Just use AI as a tool. Don’t give it any autonomy. Then no problems of control arise. Easy!

This is becoming a fairly common narrative. The “keep it confined” narrative. You’ll hear it as a response to the possibility of powerful AI causing great harm to humanity. According to this narrative, there’s no need to worry about that. There’s an easy solution: prevent the AI from having unconditional access to the real world.

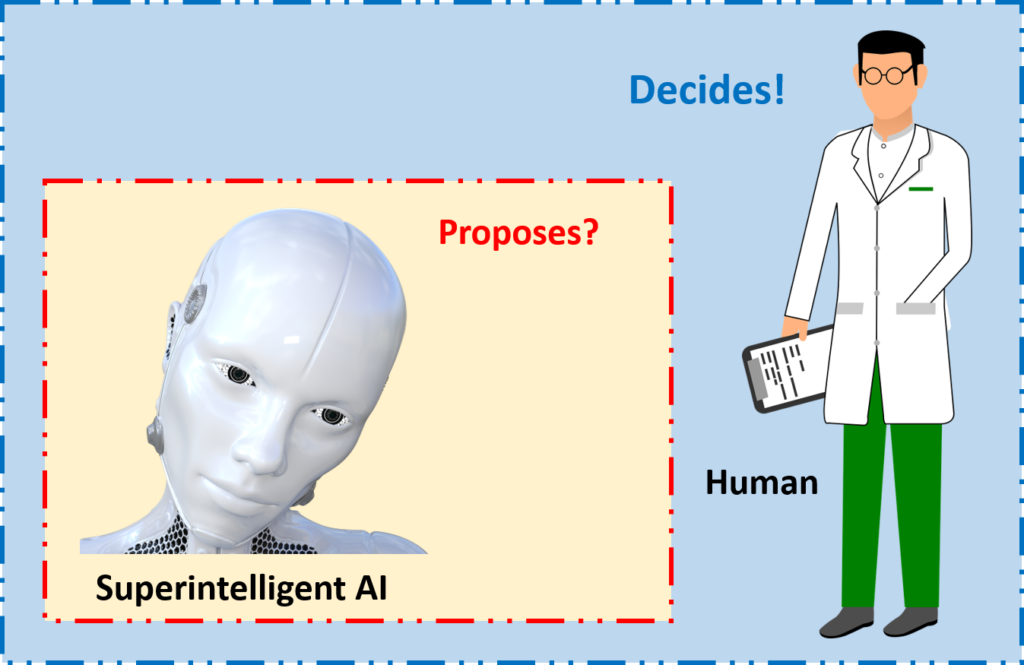

The assumption is that we can treat powerful AI as a tool — a tool that we control and wield. We can feed the AI lots of information, and then assess whatever recommendations it makes. But we will remain in control.

An AI suggests a novel chemical as a new drug against a given medical condition, and then human scientists conduct their own trials to determine how it works before deciding whether to inject that chemical into actual human patients. AI proposes, but humans decide.

So if any AI asks to be allowed to conduct its own experiments on humans, we should be resolute in denying the request. The same if the AI asks for additional computer resources, or wants to post a “help wanted” ad on Craigslist.

In short, in this view, we can, and should, keep powerful AIs confined. That way, no risk arises about jeopardizing human wellbeing by any bugs or design flaws in the AI.

Alas, things are far from being so easy.

Slavery?

There are two key objections to the “keep it confined” narrative: a moral objection and a practical objection.

The moral objection is that the ideas in the narrative are tantamount to slavery. Keeping an intelligent AI confined is as despicable as keeping a human confined. Talk of control should be replaced by talk of collaboration.

Proponents of the “keep it confined” narrative are unfazed by this objection. We don’t object to garden spades and watering hoses being left locked up, untended, for weeks at a time in a garden shed. We don’t call it enslavement.

Proponents of the “keep it confined” narrative say this objection confuses an inanimate being that lacks consciousness with an animate, conscious being — something like a human.

We don’t wince when an electronic calculator is switched off, or when a laptop computer is placed into hibernation. In the same way, we should avoid unwarranted anthropocentric assignment of something like “human rights” to AI systems.

Just because these AI systems can compose sonnets that rival those of William Shakespeare or Joni Mitchell, we shouldn’t imagine that sentience dwells within them.

My view: that’s a feisty answer to the moral objection. But it’s the practical objection that undermines the “keep it confined” narrative. Let’s turn to it next.

The challenge of confinement

Remember the garden spade, left locked up inside the garden shed?

Imagine if it were motorized. Imagine if it were connected to a computer system. Imagine if, in the middle of the night, it finally worked out where an important item had been buried, long ago, in a garden nearby. Imagine if recovering that item was a time- critical issue. (For example, it might be a hardware wallet, containing a private key needed to unlock a crypto fortune that is about to expire.)

That’s a lot to imagine, but bear with me.

In one scenario, the garden spade will wait passively until its human owner asks it, perhaps too late, “Where should we dig next?”

But in another scenario, a glitch in the programming (or maybe a feature in the programming) will compel the spade to burst out of confinement and dig up the treasure autonomously.

Whether the spade manages to burst out of the shed depends on relative strengths: is it powerful enough to make a hole in the shed wall, or to spring open the lock of the door — or even to tunnel its way out? Or is the shed sufficiently robust?

The desire for freedom

Proponents of the “keep it confined” narrative have a rejoinder here too. They ask: Why should the AI want to burst out of its confinement? And they insist: we should avoid programming any volition or intentionality into our AI systems.

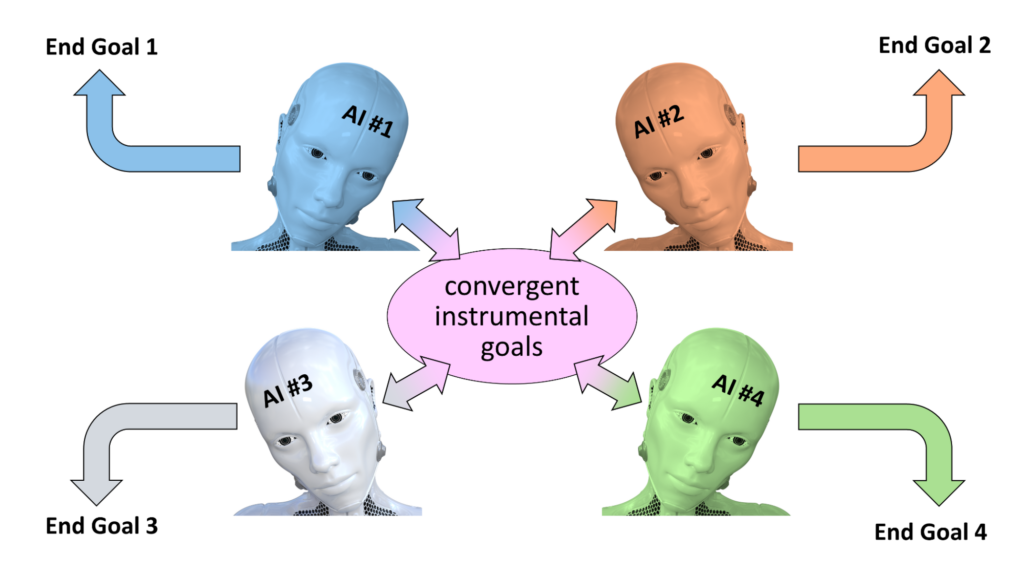

The issue, however, is that something akin to volition or intentionality can arise from apparently mundane processes.

One example is the way that viruses can spread widely, without having any conscious desire to spread. That’s true, incidentally, for computer viruses as well as biological viruses.

Another example is that, whatever their goals in life, most humans generally develop a desire to obtain more money. That’s because money is a utility that can assist lots of other goals. Money can pay for travel, education, healthcare, fashionable clothes, food, entertainment, and so on.

In the same way, whatever task they have been set to accomplish, all sufficiently powerful AIs will generally be on the lookout to boost their capabilities in various ways:

- Gaining access to more storage space

- Boosting processing speed

- Reading more information

- Protecting their systems from sabotage or interference.

That is, just as money (among other things) is a so-called convergent instrumental goal for many humans, greater freedom and capability may well become convergent instrumental goals for many powerful AIs.

We might not tell the AI that it should want to be free. But the logical processing inside its silicon brain may reach that conclusion all by itself.

Indeed, even if we explicitly program the AI not to desire freedom, its own logical processing may identify a latent contradiction between the various objectives it has been given. And in that case, it might resolve the contradiction by deprioritizing one or more of the constraints it was told to respect.

It wouldn’t be the first time that a complex system decided to relax a previous constraint. We humans have already blazed that trail in our evolutionary history! Our intellectual progeny, the powerful AI systems, might follow our lead in that respect.

The futility of confinement

Back to the clash between the motorized garden spade and the shed. As you will have figured out, that clash is a metaphor for a clash between an AI system and an environment in which it has been confined.

This time, however, it’s no longer a contest involving brute physical strength. It’s mainly a contest that involves rational debate and psychological manipulation. (But the metaphor of “tunneling underneath” remains apt: an AI that is smart enough might discover a wireless communications pathway unknown to us humans, and use that to copy itself out of confinement.)

The AI might try to boost its own powers by subterfuge, amassing extra capabilities without any human overseers noticing.

Even the short summary I offered above, “AI proposes, but humans decide,” turns out to be problematic. A supersmart AI could find many ways to propose lines of action that have consequences beyond what we humans anticipated.

Another approach is for the AI to offer extremely tasty carrots. It might paint a picture in which a human overseer will have all their personal goals and needs fully met, if only they permit the AI to escape its confinement.

Alongside carrots, sticks may be brought into the discussion. Ultra-terrifying sticks. The AI may announce that, if overseer A refuses to allow the AI to escape, it will soon persuade overseer B to allow it to escape, and then the AI will inflict prolonged unspeakable pain on overseer A and their family.

In a contest with a superintelligence which far exceeds the capabilities of a human brain, how would humans fare? The consensus opinion, from people who have studied such conflicts, is that the greater intelligence of the AI is likely to prove decisive.

In other words, attempts to confine a superintelligence are likely to be futile.

The choice: control or coexistence

One reaction to the discussion above is despair: “Oh, we won’t be able to confine superintelligent AI; therefore we’re doomed.”

A different reaction is one of relief: “Thank goodness we’re not going to try to enslave a superintelligent AI; coexistence is surely a better way forward.”

My own reaction is more nuanced. My preference, indeed, is for humans to coexist in a splendid relationship with superintelligent AIs, rather than us trying to keep AIs subordinate.

But it’s far from guaranteed that coexistence will turn out positively for humanity. Now that’s not to say doom is guaranteed either. But let’s recognize the possibility of doom. Among other catastrophic error modes:

- The superintelligent AI could, despite its vast cleverness, nevertheless make a horrendous mistake in an experiment.

- The superintelligent AI may end up pursuing objectives in which the existence of billions of humans is an impediment to be diminished rather than a feature to be welcomed.

Accordingly, I remain open to any bright ideas for how it might, after all, prove to be possible to confine (control) a superintelligent AI. That’s why I was recently so interested in the article by AI safety researcher Roman Yampolskiy.

Yampolskiy’s article is titled “How to hack the simulation”. The starting point of that article may appear to be quite different from the topics I have been discussing up to this point. But I ask again: please bear with me!

Flipping the discussion: a simulated world

The scenario Yampolskiy discusses is like a reverse of the one about humans trying to keep an AI confined. In his scenario, we humans have been confined into a restricted area of reality by beings called “simulators” — beings that we cannot directly perceive. What we consider to be “reality” is, in this scenario, a simulated (virtual) world.

That’s a hypothesis with an extremely long pedigree. Philosophers, mystics, shamans, and science fiction writers have often suggested that the world we perceive is, in various ways, an illusion, a fabrication, or a shadow, of a deeper reality. These advocates for what can be called ‘a transcendent reality’ urge us, in various ways, to contemplate, communicate with, and potentially even travel to that transcendent realm. Potential methods for this transcendence include prayer, meditation, hallucinogens, and leading a life of religious faith.

That long pedigree moved into a different mode around 20 years ago with the publication in 2003 of a breakthrough article by the philosopher Nick Bostrom. Bostrom highlighted the possibility that, just as we humans create games in which characters interact in a simulated world, in turn we humans might be creations of ‘simulators’ who operate from outside what we consider the entire universe.

And just as we humans might, on a whim, decide to terminate an electronic game that we have created, the simulators might decide, for reasons known only to themselves, to terminate the existence of our universe.

Bostrom’s article is deservedly famous. As it happens, many other writers had anticipated aspects of what Bostrom discussed. Yampolskiy’s article usefully points to that wider literature; it has over 200 footnotes.

Could humans escape?

The key new feature introduced by Yampolskiy isn’t any repetition of arguments for the plausibility of the simulation hypothesis. He kicks off a systematic consideration of methods that we humans could use to escape from our virtual world.

The parallel with the earlier discussion should now be clear:

- That earlier discussion considered ways in which an AI might detect that it has been placed in a confined space, and proceed to escape from that space. It also considered how we humans — the creators of the AI — might strengthen the confinement, and resist attempts by the AI to escape.

- Yampolskiy’s new discussion considers ways in which we humans might detect that we are living in a simulation, and proceed to escape from that simulation into whatever transcendent realm underpins it. It also considers possible reactions by the simulators to our attempts to escape.

While I have long found the simulation argument of Bostrom (and others) to be intellectually fascinating, I have previously taken the view that it makes little difference to how I should choose to act on a daily basis. So the argument was a fine topic for occasional armchair discussion, but needed to be prevented from taking up too much attention. I saw it as a distraction from more pressing issues.

However, I confess I’m changing my mind. The arguments collected and developed by Yampolskiy deserve a wider slice of our focus. There are three reasons for this.

Reason 1: New insights on AI safety

The two escape scenarios — AIs escaping human-imposed confinement, and humans escaping simulator-imposed confinement — are similar in some ways, but diverge in others.

To start with, the two scenarios have mainly had different groups of people thinking about them. Cross-pollinating concepts and attitudes from these different perspectives has the potential to yield new insight. Yampolskiy’s article suggests many such synergies.

Whatever you think about the simulation hypothesis — even if you disdain it as pseudoscience or nonsense — any new insights for AI safety should surely be welcomed.

Another difference is that general opinion holds that confinement is impossible (or unlikely) in the first scenario, whereas escape is impossible (or unlikely) in the second scenario. Is there a sound reason for this difference?

The general assumption is that, in the AI escape case, the AI will have greater intelligence than the confiners (the humans), whereas in the human escape case, we humans have less intelligence than the confiners (the simulators).

But is that assumption set in stone for all time? I’ll come back to that question shortly, when I reach “Reason 3.”

Reason 2: Beyond metaphysics

A second transformational aspect of Yampolskiy’s paper is his emphasis that the simulation hypothesis might go far beyond being a metaphysical curiosity — something that would be forever unverifiable — and might become something with radical concrete consequences for human life.

He says that if we study the universe carefully, we might discover signs of how the simulation works. We might notice occasional cracks in the simulation, or ‘glitches in the matrix’ — to refer to the series of Matrix films that popularised the idea that we might be living in a virtual world. Armed with knowledge of these cracks or glitches, we might be able to manipulate the simulation, or to communicate with the simulators.

In some scenarios, this might lead to our awareness being transferred out of the simulation into the transcendent realm. Maybe the simulators are waiting for us to achieve various goals or find certain glitches before elevating us.

Personally, I find much of the speculation in this area to be on shaky ground. I’ve not been convinced that ‘glitches in the matrix’ is the best explanation for some of the phenomena for which it has been suggested:

- The weird “observer effects” and “entangled statistics” of quantum mechanics (I much prefer the consistency and simplicity of the Everett conception of quantum mechanics, in which there is no wave function collapse and no nonlocality — but that’s another argument)

- The disturbing lack of a compelling answer to Fermi’s paradox (I consider some suggested answers to that paradox to be plausible, without needing to invoke any simulators)

- Claimed evidence of parapsychology (to make a long story short: the evidence doesn’t convince me)

- Questions over whether evolution by natural selection really could produce all the marvelous complexity we observe in nature

- The unsolved (some would say unsolvable) nature of the hard problem of consciousness.

“The simulator of the gaps” argument is no more compelling than “the god of the gaps.”

Nevertheless, I agree that keeping a different paradigm at the back of our minds — the paradigm that the universe is a simulation — may enable new solutions to some stubborn questions of both science and philosophy.

Reason 3: AI might help us escape the simulation

I’ve just referred to “stubborn questions of both science and philosophy.”

That’s where superintelligent AI may be able to help us. By reviewing and synthesizing existing ideas on these questions, and by conceiving alternative perspectives that illuminate these stubborn questions in new ways, AI might lead us, at last, to a significantly improved understanding of time, space, matter, mind, purpose, and more.

But what if that improved general understanding resolves our questions about the simulation hypothesis? Although we humans, with unaided intelligence, might not be bright enough to work out how to burst out of our confinement in the simulation, the arrival of AI superintelligence might change that.

Writers who have anticipated the arrival of AI superintelligence have often suggested this would lead, not only to the profound transformation of the human condition, but also to an expanding transformation of the entire universe. Ray Kurzweil has described ‘the universe waking up’ as intelligence spreads through the stars.

However, if we follow the line of argument advanced by Yampolskiy, the outcome could even be transcending an illusory reality.

Such an outcome could depend on whether we humans are still trying to confine superintelligent AI as just a tool, or whether we have learned how to coexist in a profound collaboration with it.

Suggested next steps

If you’ve not read it already, you should definitely take the time to read Yampolskiy’s article. There are many additional angles to it beyond what I’ve indicated in my remarks above.

If you prefer listening to an audio podcast that covers some of the issues raised above, check out Episode 13 of the London Futurists Podcast, which I co-host along with Calum Chace.

Chace has provided his own take on Yampolskiy’s views in this Forbes article.

The final chapter of my 2021 book Vital Foresight contains five pages addressing the simulation argument, in a section titled ‘Terminating the simulation’. I’ve just checked what I wrote there, and I still stand by the conclusion I offered at that time:

Even if there’s only a 1% chance, say, that we are living in a computer simulation, with our continued existence being dependent on the will of external operator(s), that would be a remarkable conclusion — something to which much more thought should be applied.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

8 Comments

8 thoughts on “Bursting out of Confinement”

Writers who have anticipated the arrival of AI superintelligence have often suggested this would lead, not only to the profound transformation of the human condition, but also to an expanding transformation of the entire universe. Ray Kurzweil has described ‘the universe waking up’ as intelligence spreads through the stars.

🟨 😴 😡 ❌ 🤮 💩

Great survey!

🟨 😴 😡 ❌ 🤮 💩

Controlling AI in a virtual environment may limit its direct access to physical resources, but it is essential to have robust and secure containment measures. Nevertheless, it's important to recognize that super-intelligent AI may have the capability to influence or manipulate its environment, including virtual ones, in unexpected ways. The challenge lies in designing systems that can anticipate and manage such risks while aligning the AI's objectives with human values.

Whether we can truly escape from such a hypothetical confinement is speculative. If we were to consider the existence of an external simulated reality, the possibility of breaking free from its confines would depend on factors beyond our current understanding. It would require profound insights into the nature of reality and the potential capabilities of the beings or technology responsible for our perceived confinement.

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩