“The Singularity” — the anticipated creation of Artificial General Intelligence (AGI) — could be the most important concept in the history of humanity. It’s unfortunate, therefore, that the concept is subject to considerable confusion.

The first confusion with “the Singularity” is that the phrase is used in several different ways. As a result, it’s easy to become distracted.

Four definitions

For example, consider Singularity University (SingU), which has been offering courses since 2008 with themes such as “Harness the power of exponential technology” and “Leverage exponential technologies to solve global grand challenges.”

For SingU, “Singularity” is basically synonymous with the rapid disruption caused when a new technology, such as digital photography, becomes more useful than previous solutions, such as analog photography. What makes these disruptions hard to anticipate is the exponential growth in the capabilities of the technologies involved.

A period of slow growth, in which progress lags behind expectations of enthusiasts, transforms into a period of fast growth, in which most observers complain “why did no one warn us this was coming?”

Human life “irreversibly transformed”

A second usage of the term “the Singularity” moves beyond talk of individual disruptions — singularities in particular areas of life. Instead, it anticipates a disruption in all aspects of human life. Here’s how futurist Ray Kurzweil introduces the term in his 2005 book The Singularity Is Near:

What, then, is the Singularity? It’s a future period during which the pace of technological change will be so rapid, its impact so deep, that human life will be irreversibly transformed… This epoch will transform the concepts that we rely on to give meaning to our lives, from our business models to the cycle of human life, including death itself…

The key idea underlying the impending Singularity is that the pace of change of our human-created technology is accelerating and its powers are expanding at an exponential pace.

The nature of that “irreversible transformation” is clarified in the subtitle of the book: When Humans Transcend Biology. We humans will no longer be primarily biological, aided by technology. After that singularity, we’ll be primarily technological, with, perhaps, some biological aspects.

Superintelligent AIs

A third usage of “the Singularity” foresees a different kind of transformation. Rather than humans being the most intelligent creatures on the planet, we’ll fall into second place behind superintelligent AIs. Just as the fate of species such as gorillas and dolphins currently depends on actions by humans, the fate of humans, after the Singularity, will depend on actions by AIs.

Such a takeover was foreseen as long ago as 1951 by pioneering computer scientist Alan Turing:

My contention is that machines can be constructed which will simulate the behaviour of the human mind very closely…

It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. There would be no question of the machines dying, and they would be able to converse with each other to sharpen their wits. At some stage therefore we should have to expect the machines to take control.

Finally, consider what was on the mind of Vernor Vinge, a professor of computer science and mathematics, and also the author of a series of well-regarded science fiction novels, when he introduced the term “Singularity” in an essay in Omni in 1983. Vinge was worried about the unforeseeability of future events:

There is a stone wall set across any clear view of our future, and it’s not very far down the road. Something drastic happens to a species when it reaches our stage of evolutionary development — at least, that’s one explanation for why the universe seems so empty of other intelligence. Physical catastrophe (nuclear war, biological pestilence, Malthusian doom) could account for this emptiness, but nothing makes the future of any species so unknowable as technical progress itself…

We are at the point of accelerating the evolution of intelligence itself. The exact means of accomplishing this phenomenon cannot yet be predicted — and is not important. Whether our work is cast in silicon or DNA will have little effect on the ultimate results. The evolution of human intelligence took millions of years. We will devise an equivalent advance in a fraction of that time. We will soon create intelligences greater than our own.

A Singularity that “passes far beyond our understanding”

This is when Vinge introduces his version of the concept of singularity:

When this happens, human history will have reached a kind of singularity, an intellectual transition as impenetrable as the knotted space-time at the centre of a black hole, and the world will pass far beyond our understanding. This singularity, I believe, already haunts a number of science fiction writers. It makes realistic extrapolation to an interstellar future impossible.

If creatures (whether organic or inorganic) attain levels of general intelligence far in excess of present-day humans, what kinds of goals and purposes will occupy these vast brains? It’s unlikely that their motivations will be just the same as our own present goals and purposes. Instead, the immense scale of these new minds will likely prove alien to our comprehension. They might appear as unfathomable to us as human preoccupations appear to the dogs and cats and other animals that observe us from time to time.

AI, AGI, and ASI

Before going further, let’s quickly contrast today’s AI with the envisioned future superintelligence.

Existing AI systems typically have powerful capabilities in narrow contexts, such as route-planning, processing mortgage and loan applications, predicting properties of molecules, playing various games of skill, buying and selling shares, recognizing images, and translating speech.

But in all these cases, the AIs involved have incomplete knowledge of the full complexity of how humans interact in the real world. The AI can fail when the real world introduces factors or situations that were not part of the data set of examples with which the AI was trained.

In contrast, humans in the same circumstance would be able to rely on capacities such as “common sense”, “general knowledge,” and intuition or “gut feel”, to reach a better decision.

An AI with general intelligence

However, a future AGI — an AI with general intelligence — would have as much common sense, intuition, and general knowledge as any human. An AGI would be at least as good as humans at reacting to unexpected developments. That AGI would be able to pursue pre-specified goals as competently as (but much more efficiently than) a human, even in the kind of complex environments which would cause today’s AIs to stumble.

Whatever goal is input to an AGI, it is likely to reason to itself that it will be more likely to achieve that goal if it has more resources at its disposal and if its own thinking capabilities are further improved. What happens next may well be as described by IJ Good, a long-time colleague of Alan Turing:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an “intelligence explosion,” and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

Evolving into artificial superintelligence

In other words, not long after humans manage to create an AGI, the AGI is likely to evolve itself into an ASI – an artificial superintelligence that far exceeds human powers.

In case the idea of an AI redesigning itself without any human involvement seems far-fetched, consider a slightly different possibility: Humans will still be part of that design process, at least in the initial phases. That’s already the case today, when humans use one generation of AI tools to help design a new generation of improved AI tools before going on to repeat the process.

I.J. Good foresaw that too. This is from a lecture he gave at IBM in New York in 1959:

Once a machine is designed that is good enough… it can be put to work designing an even better machine…

There will only be a very short transition period between having no very good machine and having a great many exceedingly good ones.

At this point an “explosion” will clearly occur; all the problems of science and technology will be handed over to machines and it will no longer be necessary for people to work. Whether this will lead to a Utopia or to the extermination of the human race will depend on how the problem is handled by the machine.

Singularity timescales: exponential computational growth

One additional twist to the concept of Singularity needs to be emphasized. It’s not just that, as Vernor Vinge stressed, the consequences of passing the point of Singularity are deeply unpredictable. It’s that the timing of reaching the point of Singularity is inherently unpredictable too. That brings us to what can be called the second confusion with “The Singularity.”

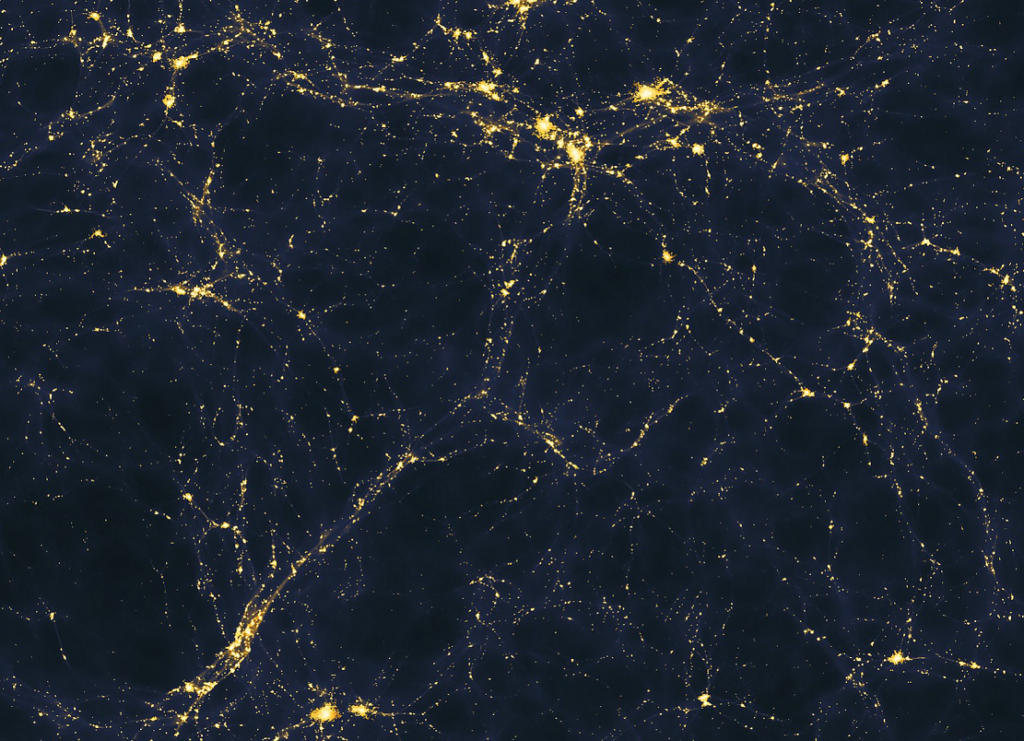

It’s sometimes suggested, contrary to what I just said, that a reasonable estimate of the date of the Singularity can be obtained by extrapolating the growth of the hardware power of computing systems. The idea is to start with an estimate for the computing power of the human brain. That estimate involves the number of neurons in the brain.

Extrapolate that trend forward, and it can be argued that such a computer would match, by around 2045, the capability not just of a single human brain, but the capabilities of all human brains added together.

Next, consider the number of transistors that are included in the central processing unit of a computer that can be purchased for, say, $1,000. In broad terms, that number has been rising exponentially since the 1960s. This phenomenon is part of what is called “Moore’s Law.”

This argument is useful to raise public awareness of the possibility of the Singularity. But there are four flaws with using this line of thinking for any detailed forecasting:

- Individual transistors are still becoming smaller, but the rate of shrinkage has slowed down in recent years.

- The power of a computing system depends, critically, not just on its hardware, but on its software. Breakthroughs in software defy any simple exponential curve.

- Sometimes a single breakthrough in technology will unleash much wider progress than was expected. Consider the breakthroughs of deep learning neural networks, c. 2012.

- Ongoing technological progress depends on society as a whole supplying a sufficiently stable and supportive environment. That’s something else which can vary unpredictably.

A statistical estimate

Instead of pointing to any individual date and giving a firm prediction that the Singularity will definitely have arrived by then, it’s far preferable to give a statistical estimate of the likelihood of the Singularity arriving by that date. However, given the uncertainties involved, even these estimates are fraught with difficulty.

The biggest uncertainty is in estimating how close we are to understanding the way common sense and general knowledge arises in the human brain. Some observers suggest that we might need a dozen conceptual breakthroughs before we have a comprehension sufficient to duplicate that model in silicon and software. But it’s also possible that a single conceptual leap will solve all these purportedly different problems.

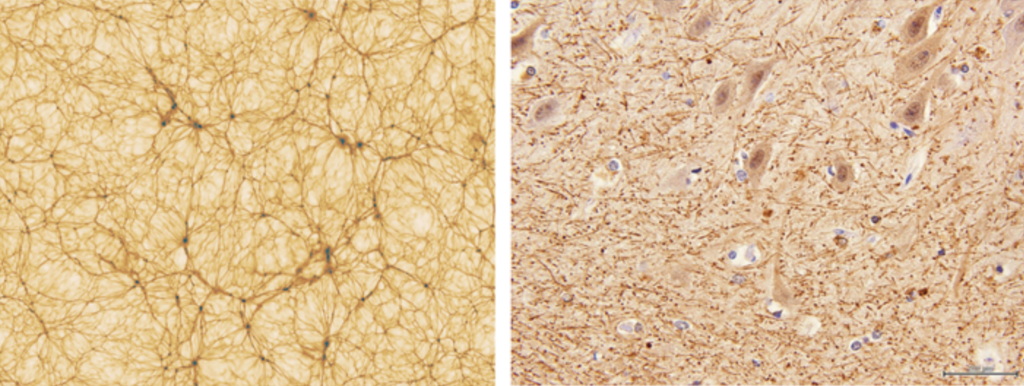

Yet another possibility should give us pause. An AI might reach (and then exceed) AGI level even without humans understanding how it operates — or of how general intelligence operates inside the human brain. Multiple recombinations of existing software and hardware modules might result in the unforeseen emergence of an overall network intelligence that far exceeds the capabilities of the individual constituent modules.

Schooling the Singularity

Even though we cannot be sure what direction an ASI will take, nor of the timescales in which the Singularity will burst upon us, can we at least provide a framework to constrain the likely behavior of such an ASI?

The best that can probably be said in response to this question is: “it’s going to be hard!”

As a human analogy, many parents have been surprised — even dumbfounded — by choices made by their children, as these children gain access to new ideas and opportunities.

Introducing the ASI

Humanity’s collective child — ASI — might surprise and dumbfound us in the same way. Nevertheless, if we get the schooling right, we can help bias that development process — the “intelligence explosion” described by I.J. Good — in ways that are more likely to align with profound human wellbeing.

That schooling aims to hardwire deep into the ASI, as a kind of “prime directive,” principles of beneficence toward humans. If the ASI would be at the point of reaching a particular decision — for example, to shrink the human population on account of humanity’s deleterious effects on the environment –- any such misanthropic decision would be overridden by the prime directive.

The difficulty here is that if you line up lots of different philosophers, poets, theologians, politicians, and engineers, and ask them what it means to behave with beneficence toward humans, you’ll hear lots of divergent answers. Programming a sense of beneficence is as least as hard as programming a sense of beauty or truth.

But just because it’s hard, that’s no reason to abandon the task. Indeed, clarifying the meaning of beneficence could be the most important project of our present time.

Tripwires and canary signals

Here’s another analogy: accumulating many modules of AI intelligence together, in a network relationship, is similar to accumulating nuclear fissile material together. Before the material reaches a critical mass, it still needs to be treated with respect, on account of the radiation it emits. But once a critical mass point is reached, a cascading reaction results — a nuclear meltdown or, even worse, a nuclear holocaust.

The point here is not to risk any accidental encroachment upon the critical mass, which would convert the nuclear material from hazardous to catastrophic. Accordingly, anyone working with such material needs to be thoroughly trained in the principles of nuclear safety.

With an accumulation of AI modules, things are by no means so clear. Whether that accumulation could kick-start an explosive phase transition depends on lots of issues that we currently only understand dimly.

However, something we can, and should, insist upon, is that everyone involved in the creation of enhanced AI systems pays attention to potential “tripwires.” Any change in configuration or any new addition to the network should be evaluated, ahead of time, for possible explosive consequences. Moreover, the system should in any case be monitored continuously for any canary signals that such a phase transition is becoming imminent.

Again, this is a hard task, since there are many different opinions as to which kind of canary signals are meaningful, and which are distractions.

Concluding thoughts

The concept of the Singularity poses problems, in part because of some unfortunate confusion that surrounds this idea, but also because the true problems of the Singularity have no easy answers:

- What are good canary signals that AI systems could be about to reach AGI level?

- How could a “prime directive” be programmed sufficiently deeply into AI systems that it will be maintained, even as that system reaches AGI level and then ASI, rewriting its own coding in the process?

- What should that prime directive include, going beyond vague, unprogrammable platitudes such as “act with benevolence toward humans”?

- How can safety checks and vigilant monitoring be introduced to AI systems without unnecessarily slowing down the progress of these systems to producing solutions of undoubted value to humans (such as solutions to diseases and climate change)?

- Could limits be put into an AGI system that would prevent it self-improving to ASI levels of intelligence far beyond those of humans?

- To what extent can humans take advantage of new technology to upgrade our own intelligence so that it keeps up with the intelligence of any pure-silicon ASI, and therefore avoids the situation of humans being left far behind ASIs?

However, the first part of solving a set of problems is a clear definition of these problems. With that done, there are opportunities for collaboration among many different people — and many different teams — to identify and implement solutions.

What’s more, today’s AI systems can be deployed to help human researchers find solutions to these issues. Not for the first time, therefore, one generation of a technological tool will play a critical role in the safe development of the next generation of technology.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)