Substrate-independent Computation

Jan. 13, 2023.

9 min. read.

37 Interactions

When asked to think about the origins of computation, we might imagine Babbage, Lovelace, or von Neumann. But it may surprise one that computation has always been with us, even before tubes and transistors — at least as old as the Earth.

Even a humble bucket of water can function as a perceptron when oscillated, able to discern between a one and a zero. The different surface tensions of interacting fluids, the Marangoni effect, can be applied to find the optimal path through a maze — the shortest distance between two different chemicals.

In biology, tiny, single-cell organisms can apply a microtubule-based finite state machine to compute how to walk.

It’s even possible to use glass or crystals — perhaps even ice crystals — to function as basic neural networks. These would be enough to interpret classic machine-learning datasets, such as MNIST (handwritten digits).

So computation does not require computers. Physical matter in the right configuration is enough. Our universe is teeming with computation at every level.

In another example, an electrode is putting current into a petri dish of mineral oil suspending metal balls. That sheet of current draws these balls together to form tendrils in a self-organized fashion. They are self-organizing to gain the greatest energy throughput possible.

We see similar patterns showing up in many different places in nature, and within biology, geography and electrophysics. These different shapes manifest because systems evolve for maximal energy throughput (the amount of energy across the system per unit time per unit mass). The cosmologist Eric Chaisson labeled this “energy rate density.”

Underlying principles have been postulated to govern these kinds of phenomena. These are described as “constructal laws,” and they cause a river tributary, a lung, a tree, and a lightning strike to share the same pattern, optimized for energy flow.

Life: a pocket of warmth

Entropy is the process by which things drift towards equilibrium and get colder. Negative entropy describes a pocket of warmth that actively resists being cooled.

One may describe life as a pocket of warmth that resists a cold universe by taking energy into itself and radiating it out again. This process of taking energy in and radiating it away is called “dissipation.”

The universe tries to make us all cold and pull everything apart — diffuse it. Life is a pocket where that does not happen, a process found at the delicate balancing point between something purely static and something very diffuse and disorganized — a point of meta-stability.

In this liminal space, it’s possible to maintain a pocket of negative entropy, or negentropy. Like the metal balls, systems are constantly evolving, getting better at keeping things warm. They develop for maximal negentropy, whether chemical, physical, or biological systems — perhaps even technological and symbolic systems.

Entropy maximization to predict the future

Harvard University researcher Alexander Wissner-Gross takes this negentropy maximization principle further: into intelligence itself. He describes something he calls the causal entropic force, where he reckons that systems evolve themselves to optimize for the greatest number of future paths, or the largest number of potential options possible in their future.

He has applied this principle to create AI systems that are trying to preserve the possibility of maintaining potential options.

For example, if you miss the ball in the game Hacky Sack, the play simply ends. AI systems are trying to prevent such a closed state, allowing for outcomes of potentially infinite length.

This principle can even be applied to networks between human beings. Relationships suffer entropy, like everything else. So we must constantly invest some energy to maintain them. If we let a relationship dwindle by not investing energy in it, we may lose opportunities.

Generally, destroying relationships or the life or health of others is not ethically preferable. Usually, conquering, looting, and pillaging only work once. Harming others precludes sustainable opportunities, which may be preserved by cooperation.

Instead, striving to preserve optionality can be applied as a model of ethics — using rules that permit infinite outcomes.

Intelligence as prediction

One can model all of these problems by preserving the greatest number of paths in the future, while avoiding paths with few or no options. Researchers Alexander Wissner-Gross and Cameron Freer posit that entropy maximization is an intelligence process that allows entities or agents to aim towards a future with the highest throughput of energy.

You can model intelligence itself as a process of predicting an expected utility and working back from there. It arises as an emergent property of this entropy-maximization process. So an agent would try to control as much of its environment as possible by making predictions and putting that probabilistic sense into its planning mechanisms.

Consciousness everywhere

Such synchronizations of oscillators are also found at scales from biological cells to human minds. Neuroscientists have recently found that people appear to synchronize their neural rhythms with other minds, they reported in the journal Neuroscience of Consciousness. That research finding could upend our current models of consciousness.

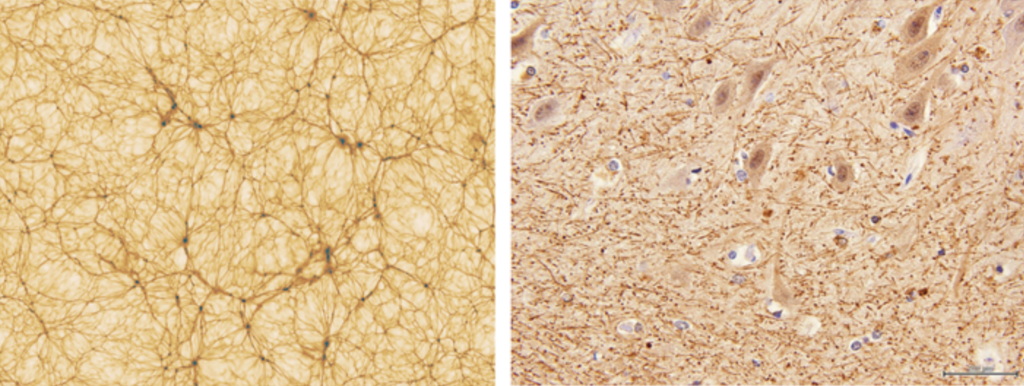

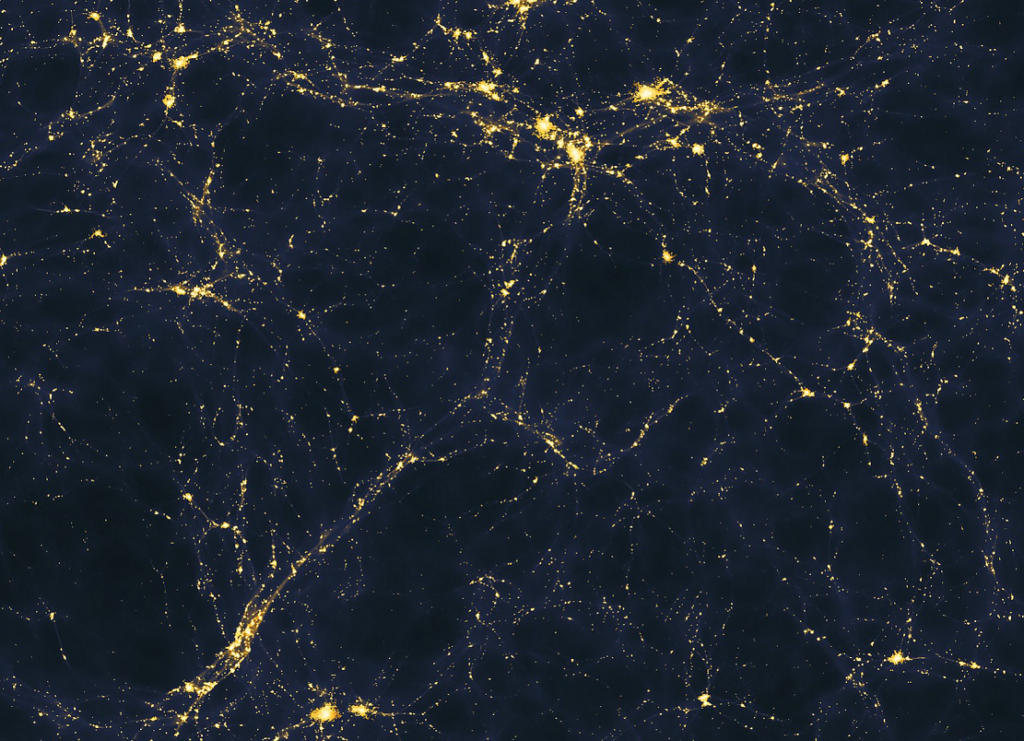

These constructual laws of entropy maximization may even be seen in similarities between networks of neuronal cells in the human brain and dark-matter filaments between galaxies, according to astrophysicist Franco Vazza at the Radio Astronomy Institute in Bologna, Italy and neuroscientist Alberto Feletti at Azienda Ospedaliero-Universitaria di Modena, Italy.

They have compared the complexity of neuronal networks and galaxy networks. “The first results from our comparison are truly surprising,” they report in Nautilus.

“The universe may be self-similar across scales that differ in size by a factor of a billion billion billion,” they found. “The total number of neurons in the human brain falls in the same ballpark as the number of galaxies in the observable universe.”

Other similarities can even include large-scale common spin of orbiting moons, binary star systems, and cosmic web filaments in the early universe, observed as synchronizing, similar to biofilms, beehives, and brains.

Spiral galaxies have revealed a large-scale spin in the early universe (credit: NASA, ESA, and the Hubble SM4 ERO Team)

They used three of the world’s most powerful observatories — the Sloan Digital Sky Survey; the Panoramic Survey Telescope, and Rapid Response System; and the Hubble Space Telescope — to find the spin direction of more than 200,000 objects across the sky.

Astronomers have also found galaxies that are coherently linked through “spooky action at a distance” in “odd sympathy” (like Christiaan Huygens’ double pendulums oscillating in synchronicity), connected by a vast network called the “cosmic web.

Also, star formation in dwarf galaxies is occurring at the moment when astrophysical jets are released, yet in areas not within the path of such jets. That suggests indirect but instant connections between phenomena across vast distances.

Another explanation for this “mysterious coherence” is based on the rotational direction of a galaxy, which “tends to be coherent with the average motion of its nearby neighbor.”

These observations demonstrate that space cannot be as empty as we commonly believe. Some force, structure, intergalactic medium, gravitational ripples, spacetime frame, or matter, finely distributed, must link these massive distant entities, and vibrations transmitted through this force lead them to become coherent over time.

So regardless of the medium, the entropy maximization principles are self-organizing.

All energetic objects in the universe are dissipative to some degree. Stars first evolved 200 million years into the lifetime of the universe, as thermodynamic negentropy engines. More sophisticated negentropy engines (which we call “life”) have evolved since.

Such processes can arise spontaneously in nature through an oscillating flow within concentrations and diffusions of amino acids or ribozymes, sun-drenched pockets of warm brackish water, through diffusive media such as refractive ice.

Such naturally computational actions may be the origin of a “spark of life” occurring within abundant organic matter and ice with salt in crystalline invariant forms that bootstrap self-replication processes within RNA and phospholipid protocells.

Natural selection on the level of species or constants can be modeled as simply a glacial form of “back-propagation” (or more precisely, different yet comparable processes of backward-flowing optimization), reaching into that potential future and trying to find the optimal next step.

This (dissipation-oriented) loss minimization function appears to be organizing the evolution of life, as well as organizing the universe at colossal scales.

The emergent properties of this flow are organizing behavior within the universe on a massive scale for ever greater levels of collective dissipation and resulting emergent social coopetition and flocking phenomena, whether on the scale of a bacterial biofilm, a living organism, consciousness, a forest, global civilization, stellar clusters, or galactic superclusters.

The same properties emerge at all scales, from infinitely small to titanically vast, which encourages clumping at all levels, but with greater efficiency at larger scales. The greater efficiency at higher scales enables universal evolution.

All this computation appears to be occurring as a byproduct of entropy maximization, which is endemic within the universe. If this is the case, consciousness may exist at all scales, from the very limited level of microbes to humans and the pan-galactic beyond, all functioning upon the same principles but at differing scales.

Beyond the silicon chip

There is more energy-rate density (the dissipation of energy flow) in a bucket of algae than in an equivalent mass of stellar matter. However, even beyond life, we are doing something very special on Earth: The greatest dissipative object in the known universe is the computer chip.

But soon, we may eschew silicon and compute with biological cells, pure optics, or raw matter itself. We have already seen a move, from the traditional CPU-centric von Neumann model to the massively parallel GPU architectures, applied to machine learning and crypto.

Perhaps the paradigm will shift again to tiny computational processes in each cell or molecule, yet massive in aggregate.

As we have recognized ourselves as electrical beings, we shall undoubtedly come to recognize our many computational processes. All of physics is digital, and we are computer lifeforms. This paves the way toward further integration with our synthetic analogs.

Today we carry supercomputers in our pockets. One day, the secrets of substrate-independent computation (computing with raw matter or energy itself instead of silicon) will enable us to carry “copilots” within the fiber of our being, fueled by our blood sugar, connected to our senses, internal and external.

These copilots will witness every experience we have, every frisson, every impulse, our memories, and the pattern of our personalities.

This sum of experience becomes a sort of Ship of Theseus: The original vessel may disintegrate, but the copy remains, created piecemeal, moment by moment, rather than during a whole-brain upload.

One day, such processes may enable the greater part of us to transcend mortality.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

3 Comments

3 thoughts on “Substrate-independent Computation”

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩