AGI Lessons from Holography

May. 11, 2023. 7 min. read.

26 Interactions

Delving into the brain's interpretation of reality, Rachel St. Clair tackles the challenge of creating AGI and proposes a holographic model approach.

When we think about creating an artificial general intelligence (AGI), we mostly think about what types of tasks it should be able to solve, which environments it should be able to perform in, or what types of learning it should be capable of. However, for most people’s vision of AGI, there is often a desired implicit ability of an AGI that is overlooked.

If we want our AGI to be able to solve many of the tasks that humans solve, then there is a basis of shared reality. So first, reality can be likened to the fundamental substrate of all things, which the scientific field of physics strives to find laws of order for. Now, to understand this shared basis of reality, indulge in this thought experience.

Suppose you are playing the video game Super Mario Bros, but instead of playing the game as you would on your computer, you are the character in the game; controlling your own in-game choices. Super Mario Bros is your universe and the mechanics of that game is your reality. Now you want to complete a task in Super Mario Bros. You’ve been living in the Super Mario Bros game as a player all your life, so you have some understanding of how the universe of Super Mario Bros works. You’ve built a model of what actions you can and can’t take, and how you may plan to achieve your task. Now suppose you’re suddenly dropped from Super Mario Bros into the old Atari game Pong. Without forgetting everything you know about the universe of Super Mario Bros and learning the universe of Pong, you won’t be able to operate because there is virtually nothing shared between the two universes. You will be playing as if the rules of Super Mario Bros are the rules of Pong, you’ll fail.

This is what it’s like trying to create an AGI. We have something that doesn’t understand the rules of the game and we’re expecting it to operate as if it understands the world as we do. A computer program doesn’t understand, or at least have a conceptual understanding, of our reality. We need to teach it how our universe works, the best we can. Even more so, we need to teach our AGI how to learn for itself how our reality works. That it can include video games, quantum mechanics, and how cats can sometimes act as liquids even though they should be solids, among other things.

If we, as humans, have learned how to interpret whatever reality really is, then our learning method, or rather interpretation method, might benefit an AGI. So when we are considering how to make AGI, let us consider the nature of reality.

After all, the more advanced lifeforms on Earth share an experience, some sort of objective reality which we can all agree upon. This points to the brain as an interpretation device. It takes in the signals from the universe and converts them into a representation that benefits brain owners for navigating and learning to survive in their environment. But, how does the brain do this?

There are many answers to this question provided from neuroscience, ancient civilizations, mystics, and the likes. However, there is one particular answer that affords an interesting perspective for AGI design: The Holographic Theory. Although, since it’s what most scientists wouldn’t classify as a real theory, complete with tests to assert assumptions, we’ll refer to it as the holographic model. The holographic model was first and independently introduced by David Bohm and Karl Pribram in the 80’s. The model is explored in depth by Michael Talbot’s good read, “The Holographic Universe”.

Starting with Pribram’s work, Talbot describes how various neuroscience and psychology studies suggest that neurons fire according to input stimuli represented as frequencies. For vision, the stimulus is a light wave, the cochlea in the ear captures sounds according to frequencies, and skin responds to frequencies of vibrations. Neurons then fire according to these input frequencies and interfere with surrounding neurons. As we know, when neurons fire the connections between neurons get stronger. Thus, neurons are thought to respond to wave frequency and the strengths between neurons store the pattern of interference created by wavefronts.

The second facet of the holographic model comes not from brain related research, but rather from one of Einstein’s colleagues, David Bohm. Experimental results that weren’t possible under the current quantum mechanics interpretation, were possible under Bohm’s new quantum philosophy. Amongst other results, early research on spooky action at a distance is the most useful for understanding Bohm’s idea. Particles (e.g. electrons) behave in such a way that two of them can be observed very far away from each other and both the particles have the exact same spin behavior. Think of spin like a special dance electrons do when they are floating around. So how could two different objects exhibit the same behavior even if they are very, very far away from each other?

Bohm postulated that it is because the particles are actually not very far away from each other, they are in fact, in the same location. Or what physicists like to call, non-locality, meaning they have no location. This concept can be sort of confusing and a full understanding can’t be described so briefly. Bohm attributes this ability to explicate and implicate states of reality. That reality is enfolded in the implicate state with non-locality and when we observe it, reality is unfolded in the explicate state with locality, patterns, and distinction. When the electrons are unfolded to measure spin states, they come from the implicate state where they are enfolded and information is shared and distributed across the whole, because everything is one. Thus when they are unfolded, the information of the spin state is still retained from the enfolded state.

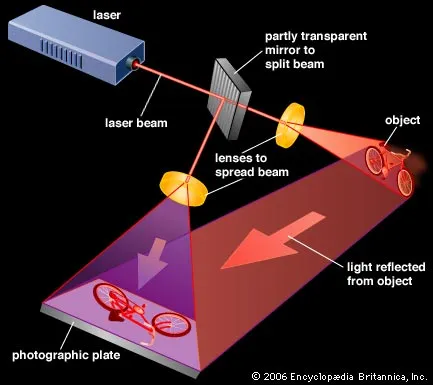

What Bohm and Pribam have in common is that both touch on the nature of reality being a hologram. A hologram is made by two waves interfering with each other as they collide on a photographic plate. The plate records the interference pattern such that when the light is shined through the plate, only the object recorded is projected.

The analogy of the holographic model helps us understand a different kind of system. A system that can’t be reduced to the sum of its parts. This means that a reductionist approach is the wrong approach to understanding reality with the holographic model. In science, reductionism is a way of breaking down a larger concept or phenomena into a minimal number of smaller parts and the interactions of those parts. In complex system science, emergent phenomena occur when the parts of a system are combined to create the whole. Here, the parts don’t add up to the whole system because new parts emerge as other parts interact. Complex systems are non-reductionist; where disorder is organized into order, in which true randomness doesn’t exist. Every action has a precise reaction and the cycle continues.

Likewise, when the brain processes information (according to the holographic model) it doesn’t break up the incoming information into bits and store each bit as a part. If this was the case, it would be impossible to store each memory we have as a neuron, we just don’t have enough neurons, and we’re not growing new ones every time we make a memory. Instead, we multiplex neurons. We distribute information, such as memories, all over large modules in the brain.

Even so, traditional artificial intelligence and some neuroscientists still cling to the reductionist model. Deep learning constructs feature maps that build up parts of the original inputs in order to classify the correct output. With enough feature layers, we can investigate what object was seen from a particular neural net output.

Instead of storing and processing information bit-by-bit, perhaps we should consider a non-reductionist approach. One that doesn’t try to force the disorder of reality into order, but instead one that tries to interpret the disorder in a meaningful way.

Results are likely to lead to an AGI that is more like humans, more understandable in the same way that you can try to understand another person’s decisions. It may also lead to a stronger interpretation of reality and afford some measures of safety. The more we share, the more likely we are to come to the same conclusion, the same goals, like protecting the human race and making the world a better place to live.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

4 Comments

4 thoughts on “AGI Lessons from Holography”

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩

🟨 😴 😡 ❌ 🤮 💩