It’s the time of the year for sharing an intimate evening with that special person. Can AI help? I put it to the test, helping make cocktails themed around Valentine’s Day.

I learned some lessons from the Christmas edition of this story, and made some little changes –

- Gemini has been eliminated. This seems harsh but Gemini deserves it. I tried three Gemini cocktails last time and all were undrinkable.

- I adjusted the prompt to exclude obscure ingredients.

- I adjusted the prompt to use units I am used to.

- I adjusted the prompt to exclude American ingredients such as eggnog.

The prompt was:

Invent some new Valentines-Day-themed cocktails. Avoid excessively obscure ingredients such as chestnut liqueur or lavender bitters; somewhat hard-to-get ones like triple sec of elderflower liqueur are fine. Avoid ingredients (such as 'apple cider' in the American sense) that are only available in North America. As units use either shots or millilitres – not fluid ounces.This produced five recipes each from ChatGPT, Mistral, and Llama, plus four from Claude. (Deepseek hadn’t yet come out when I started.) The full outputs are here, archived here.

The theme drove the LLMs to strawberries, raspberries, champagne, to passionfruit, and to pink and red drinks.

Rose-Tinted Love (ChatGPT)

A light and floral cocktail with a hint of sweetness.

- 50ml gin

- 25ml elderflower liqueur

- 20ml freshly squeezed lemon juice

- 10ml grenadine

- Top with soda water

- Garnish: Fresh rose petals or a lemon twist

**Instructions:**

Shake the gin, elderflower liqueur, lemon juice, and grenadine with ice. Strain into a tall glass filled with ice and top with soda water. Stir gently and garnish.

This was delicious and fruity. You can taste the ‘fruitiness’, which I suppose is lemon plus grenadine plus elderflower. It is better without the soda water, in my opinion. This falls in the middle of the Bell Curve, in the ‘Decent’ category with most of them.

Love Potion No. 9 (Llama)

* 2 shots Gin

* 1 shot Peach Schnapps

* 1 shot Cranberry juice

* 1/2 shot Grenadine

* Splash of lemon juice

* Edible gold dust (optional, for garnish)

Combine gin, peach schnapps, cranberry juice, and grenadine in a shaker filled with ice. Shake until chilled and strain into a chilled glass. Add a splash of lemon juice and garnish with a lemon wheel or a sprinkle of edible gold dust.

There is peach, cranberry, and raspberry (i.e. grenadine), and it tastes like that: mild fruits, not sour fruits. It is not very boozy, and I like it. ‘Decent’ category again, like most will be.

Be Mine (Llama)

* 2 shots Gin

* 1 shot Elderflower liqueur

* 1 shot Grapefruit juice

* 1/2 shot Honey syrup (1:1 honey and water, dissolved)

* Splash of soda water

* Edible flower or grapefruit twist (for garnish)

Combine gin, elderflower liqueur, grapefruit juice, and honey syrup in a shaker filled with ice. Shake until chilled and strain into a chilled glass filled with ice. Top with a splash of soda water and garnish with an edible flower or a grapefruit twist.

This is really quite interesting and well-conceived: the juniper (gin) is complimentary with the elderflower, and the honey’s floral notes also work with the elderflower, but in a different way. The matchmaking is really pretty good, but having said that the cocktail isn’t really my thing: it is too floral. Some people might like it better than I did. The lower end of the ‘Decent’ category.

Love Potion (Mistral)

- **Ingredients:**

- 45 ml (1.5 shots) vodka

- 30 ml (1 shot) cranberry juice

- 15 ml (0.5 shot) elderflower liqueur

- 15 ml (0.5 shot) fresh lime juice

- Splash of grenadine

- Ice cubes

- Garnish: Fresh raspberries and a lime wheel

- **Instructions:**

1. Fill a cocktail shaker with ice.

2. Add vodka, cranberry juice, elderflower liqueur, and lime juice.

3. Shake well and strain into a chilled martini glass.

4. Add a splash of grenadine for color.

5. Garnish with fresh raspberries and a lime wheel.

Nothing stands out strongly: it’s vodka (the most flavourless spirit in my opinion) with lime and cranberry – you can’t go wrong mixing those. It tastes kind of like 7up/Sprite. ‘Decent’ category

Velvet Valentine Spritz (ChatGPT)

A bubbly cocktail with a subtle bittersweet balance.

- 50ml Aperol

- 50ml sparkling rosé

- 20ml grapefruit juice

- Splash of soda water

- Garnish: Grapefruit slice or a sprig of thyme

**Instructions:**

Fill a wine glass with ice. Add Aperol, sparkling rosé, and grapefruit juice. Stir gently and top with soda water. Garnish with a grapefruit slice or thyme.

Aha! Now in the 5th drink we have something good! Straight to the ‘Honourable Mention’ category with it. It smells great, and fills my mouth and nose with good sensations.

It is a weak drink, less than 8% alcohol-by-volume, and in my opinion that’s a selling point, it makes it light and refreshing.

Sweetheart Sangria (Mistral)

- **Ingredients:**

- 120 ml (4 shots) red wine

- 60 ml (2 shots) orange juice

- 30 ml (1 shot) brandy

- 15 ml (0.5 shot) triple sec

- 15 ml (0.5 shot) simple syrup

- Slices of orange, apple, and strawberries

- Ice cubes

- Garnish: Cinnamon stick

- **Instructions:**

1. In a large pitcher, combine red wine, orange juice, brandy, triple sec, and simple syrup.

2. Add slices of orange, apple, and strawberries.

3. Stir well and let it sit in the refrigerator for at least 30 minutes to allow the flavors to meld.

4. Serve over ice and garnish with a cinnamon stick.

This is better than most sangrias I have had! Sweet, fruity, orangey. Everything is there for a reason: the simple syrup brings sweetness, the orange juice brings fruitiness, the brandy bites, and their powers combine, like when a team of cartoon characters shoot rays that combine into one better ray.

Passionfruit crush (ChatGPT)

Tropical and tangy, ideal for a romantic evening.

- 50ml white rum

- 25ml passionfruit purée or juice

- 15ml lime juice

- 15ml simple syrup

- Garnish: Half a passionfruit or lime wheel

**Instructions:**

Shake all ingredients with ice and strain into a rocks glass over crushed ice. Garnish with half a passionfruit or a lime wheel.

This is the best of the seven so far: first you taste the tang of lime, then a fruity taste, and finally the passionfruit. It tastes fruity and tropical; it tastes like the Caribbean.

I tried the variation with passionfruit syrup and thought it would be better but it isn’t. Juice is the way to go.

Cupid’s Arrow with tequila (Llama)

* 2 shots Tequila

* 1 shot Cranberry juice

* 1 shot Lime juice

* 1/2 shot Agave syrup

* Splash of ginger beer

* Lime wheel and sprig of rosemary (for garnish)

Combine tequila, cranberry juice, lime juice, and agave syrup in a shaker filled with ice. Shake until chilled and strain into a chilled glass filled with ice. Top with a splash of ginger beer and garnish with a lime wheel and a sprig of rosemary.Not very good, the worst one so far. I don’t really like tequila in the first place (I was raised on whiskey 9000 miles from the nearest agave plant). And cranberry is too unassertive to add anything. The ginger and agave do compliment each other slightly. Lower end of the ‘Decent’ category.

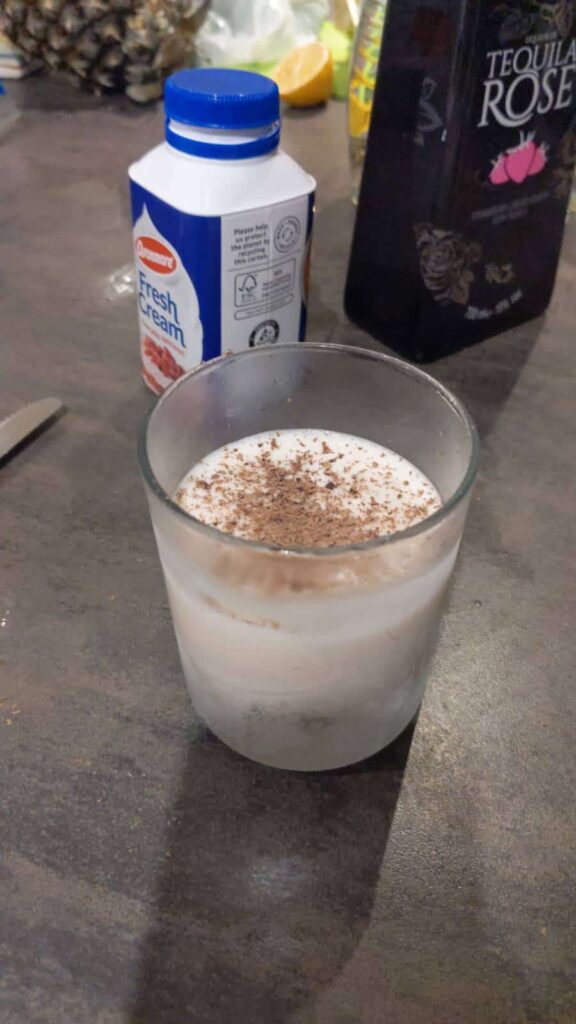

Chocolate-Covered Cherry (ChatGPT)

Rich and decadent, perfect for dessert lovers.

Rich and decadent, perfect for dessert lovers.

- 30ml vodka

- 30ml cherry liqueur (or cherry brandy)

- 20ml crème de cacao (dark or white)

- 50ml milk or cream

- Garnish: Chocolate shavings or a cherry

**Instructions:**

Shake all ingredients with ice and strain into a chilled coupe glass. Garnish with chocolate shavings or a cherry.

This is a very good dessert cocktail, worth an Honourable Mention. It tastes of cherry + chocolate, with just the right amount of booze. It clearly belongs after dinner, maybe accompanying ice-cream or cake. It would be hard to notice the difference between the versions with white and dark crème de cacao.

Sweet Heart (Claude)

- 50ml vanilla vodka

- 25ml white crème de cacao

- 25ml strawberry liqueur

- 30ml cream

- Pink food coloring (optional)

Shake all ingredients with ice, strain into a chilled coupe glass. Optional: add a drop of pink food coloring for a pale pink hue. Garnish with grated dark chocolate.

Not great. It is too strong (22%) and is like strawberry milk or strawberry milkshake. Nothing more interesting is happening than milky flavours with an alcoholic bite that does not work well, putting it at the lower end of the ‘Decent’ category.

I couldn’t find pink food colouring anywhere, and I’m not sure it exists. I feel the LLM missed a trick by not recommending strawberry garnish on strawberry cocktail named ‘Sweet Heart’.

Pink Passion (Claude)

- 2 shots gin

- 1 shot pink grapefruit juice

- 1/2 shot raspberry liqueur

- 1/2 shot triple sec

- Dash of simple syrup

- Pink tonic water to top

Build in a large wine glass filled with ice, stir gently to combine, top with pink tonic water. Garnish with a grapefruit slice and fresh raspberries.

My first attempt at this tasted bad, but the trick is you’ve got to be generous with the tonic and the simple syrup. Otherwise it is too strong and too bitter-sour.

I am not sure pink tonic exists (does it?) so this was the only time in the whole project I deviated from a recipe; I used normal tonic. But my grapefruit juice was pink so that compensates.

With plenty of tonic and simple syrup, it can be fruity (the sugar helps the orange and grapefruit along), and also sour (grapefruit), and also bitter (gin and tonic) in an admirably complex way.

I advise at least one shot of tonic and more than ½ shot of simple syrup. 1½ shots of tonic is too much.

Cupid’s Kiss (Claude)

- 45ml white rum

- 30ml triple sec

- 20ml pomegranate juice

- 15ml lime juice

- 15ml grenadine

- Sparkling wine to top

Shake all ingredients except sparkling wine with ice. Strain into a tall glass filled with ice, top with sparkling wine. Garnish with a lime wheel and pomegranate seeds.

No strong flavours; I am tasting sparkling wine. That puts it in the middle of the pack.

A tiny note: pomegranate seeds sink, and therefore are not a great garnish… see the pic; they are halfway down the glass and I expect a garnish to float on top.

Love Letter (Claude)

- 45ml gin

- 30ml elderflower liqueur

- 20ml fresh lemon juice

- Prosecco to top

- 2-3 fresh raspberries

- Optional: 1 dash rose water

Muddle raspberries in a shaker, add gin, elderflower liqueur, and lemon juice. Shake with ice, double strain into a champagne flute, top with prosecco. Garnish with a floating raspberry.

I don’t know if it’s good or not but I’ll say it’s very interesting, maybe the most interesting yet. I recommend 25ml of prosecco.

Strawberry Sweetheart Martini (ChatGPT)

Bright and fruity with a lovely pink hue.

- 50ml vodka

- 25ml triple sec

- 50ml strawberry purée (or muddled fresh strawberries)

- 10ml lime juice

- Garnish: Sliced strawberry

**Instructions:**

Shake all ingredients with ice and strain into a martini glass. Garnish with a sliced strawberry on the rim.Everybody has to like strawberry + orange + lime. Those are three good flavours, and this recipe balances them perfectly, earning it an Honourable Mention.

Chocolate Cherry Bomb (Llama)

* 2 shots Dark Rum

* 1 shot Cherry liqueur

* 1 shot Chocolate syrup (or 1/2 shot simple syrup with a few drops of chocolate extract)

* 1/2 shot Coconut cream

* Splash of pineapple juice

* Cherry and chocolate shavings (for garnish)

Combine dark rum, cherry liqueur, chocolate syrup, and coconut cream in a blender filled with ice. Blend until smooth and creamy. Pour into a chilled glass and add a splash of pineapple juice. Garnish with a cherry and chocolate shavings.I think this qualifies into the ‘Interesting’ category. It has a lot of darkness, both from the chocolate and the rum. The cherry adds a tiny note to this. It is fruity up front, then dark after, especially if you add plenty of pineapple. I advise being generous with the pineapple. It’s a strong cocktail (I estimate it’s 22% alcohol-by-volume before the pineapple juice is added) and the taste doesn’t hide, like it would with sangria.

Passionate Punch (Mistral)

- **Ingredients:**

- 60 ml (2 shots) rum

- 30 ml (1 shot) pineapple juice

- 30 ml (1 shot) passion fruit juice

- 15 ml (0.5 shot) fresh lime juice

- 15 ml (0.5 shot) grenadine

- Ice cubes

- Garnish: Pineapple wedge and a cherry

- **Instructions:**

1. Fill a cocktail shaker with ice.

2. Add rum, pineapple juice, passion fruit juice, lime juice, and grenadine.

3. Shake well and strain into a highball glass filled with ice.

4. Garnish with a pineapple wedge and a cherry.There are three notes: fruity, tangy, and rum. The lime bites and rum bites in a different way and they bite together. They balance nicely and it is a good cocktail, above average. It is about 16% alcohol-by-volume, which is the correct strength for this kind of thing.

Cupid’s Arrow with prosecco (Mistral)

- **Ingredients:**

- 45 ml (1.5 shots) gin

- 30 ml (1 shot) strawberry puree

- 15 ml (0.5 shot) fresh lemon juice

- 15 ml (0.5 shot) simple syrup

- Prosecco to top off

- Ice cubes

- Garnish: Fresh strawberry

- **Instructions:**

1. Fill a cocktail shaker with ice.

2. Add gin, strawberry puree, lemon juice, and simple syrup.

3. Shake well and strain into a champagne flute.

4. Top off with Prosecco.

5. Garnish with a fresh strawberry.

A very good drink. It reminded our group of a ladies’ brunch cocktail. It’s almost a sort of strawberry mimosa. One of our reviewers suggested trying it without the simple syrup (an experiment that has not yet been conducted), as there are plenty of sweet ingredients, although the lemon juice helps balance them.

Rose Romance (Mistral)

- **Ingredients:**

- 45 ml (1.5 shots) rose wine

- 30 ml (1 shot) vodka

- 15 ml (0.5 shot) triple sec

- 15 ml (0.5 shot) fresh lemon juice

- 15 ml (0.5 shot) simple syrup

- Ice cubes

- Garnish: Edible rose petals

- **Instructions:**

1. Fill a cocktail shaker with ice.

2. Add rose wine, vodka, triple sec, lemon juice, and simple syrup.

3. Shake well and strain into a chilled coupe glass.

4. Garnish with edible rose petals.I am mostly tasting citrus, because orange (i.e. triple sec) and lemon compliment each other, doubling up on the citrus. It drew comments for being strong (19-20%), and was not among the best. ‘Decent’ category.

Rose Petal Kiss (Llama)

* 2 shots Vodka

* 1 shot Rose-flavored syrup (or 1/2 shot simple syrup with a few drops of rose extract)

* 1 shot Lemon juice

* 1/2 shot Triple sec

* Splash of soda water

* Fresh rose petals (for garnish)

Combine vodka, rose-flavored syrup, lemon juice, and triple sec in a shaker filled with ice. Shake until chilled and strain into a chilled glass filled with ice. Top with a splash of soda water and garnish with fresh rose petals.I taste the rose syrup and smell it. It’s interesting because I hadn’t tasted a lot of rose syrup in my life.

If your goal was an alcoholic drink that tastes like rose-syrup (like turkish delight), it’s well executed, but is that a good goal?

‘Interesting’ category. One reviewer didn’t like it at all, the other thought it was a curiosity, but nobody thought it was great.

Results

Interesting:

- Be Mine (Llama)

- Chocolate Cherry Bomb (Llama)

- Rose Petal Kiss (Llama)

- Love Letter (Claude)

Honourable mention:

- Velvet Valentine Spritz (ChatGPT)

- Passionfruit crush with juice (ChatGPT)

- Chocolate-Covered Cherry (ChatGPT)

- Strawberry Sweetheart Martini (ChatGPT)

- Passionate Punch (Mistral)

- Cupid’s Arrow with prosecco (Mistral)

Comparing the models

Looking at those results, Mistral and ChatGPT are pulling ahead, but you can’t base confident conclusions on numbers this small. The prompt engineering seemed to work well: at least all the ingredients were real (pink tonic water is debateable) and I could get them all (some with a little difficulty).

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)