TESCREALism. Has The Silicon Valley Ruling Class Gone To Crazy Town? Émile Torres in conversation with R.U. Sirius

Oct. 06, 2023.

23 min. read.

39 Interactions

This article is available in multiple translations, Click here for a list of options.

✕

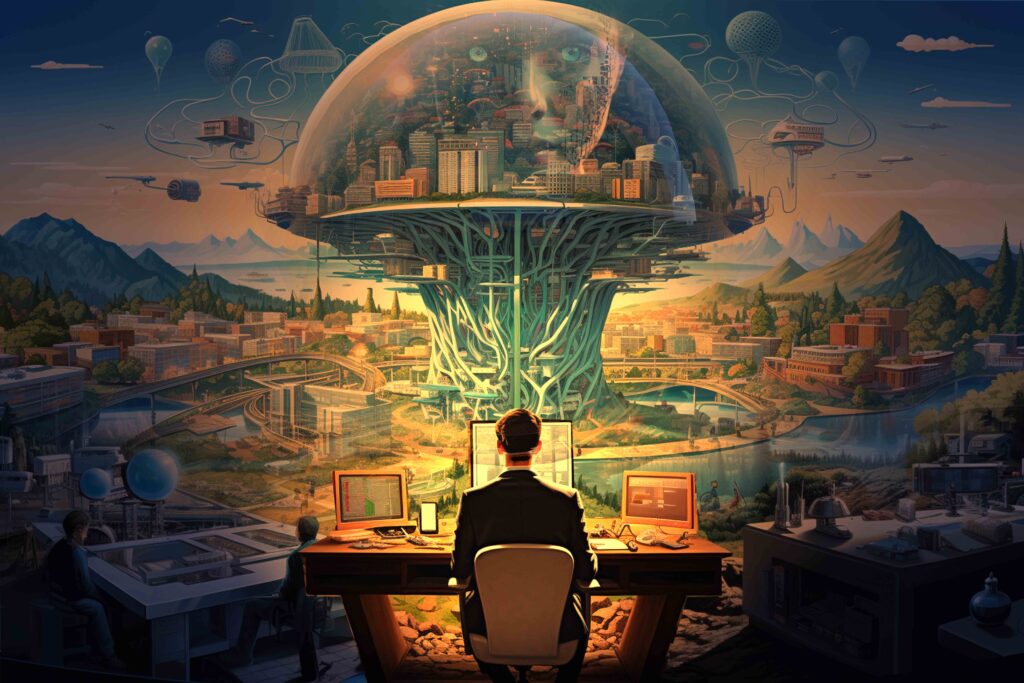

Émile Torres and R.U. Sirius scrutinize the eccentricities and extremities that have become associated with Rationalism. They delve into the meanings, practices, ethics, and applications within Transhumanism and Singularitarianism.

TESCREALism

T=Transhumanism

E= Extropianism

S= Singularitarianism

C = Cosmism

R = Rationalism

EA = Effective Altruism

L = Longtermism

Émile Torres, a philosopher and historian who has focused recently on existential threats, developed what they refer to as a ‘bundle’ (we might call it a memeplex) that claims to link the above series of -isms into a sort-of singular force that has been embraced by many of the super-wealthy and influential in the tech world. It is the influence of these tropes on the super-rich and influential that, in Torres’ view, makes them very dangerous.

In an article for Truthdig, Torres writes, “At the heart of TESCREALism is a ‘techno-utopian’ vision of the future. It anticipates a time when advanced technologies enable humanity to accomplish things like: producing radical abundance, reengineering ourselves, becoming immortal, colonizing the universe and creating a sprawling ‘post-human’ civilization among the stars full of trillions and trillions of people. The most straightforward way to realize this utopia is by building superintelligent AGI.”

In the same piece, Torres gets into the wilder projections that I suspect even many techno-enthusiastic transhumanism-oriented Mindplex readers would find fantastic (rooted in brilliant minds taking their fantasies for reality), Torres theorem leans heavily on Oxford Professor Nick Bostrom’s views, writing that he “argues that if there’s a mere 1% chance of 10^52 digital lifetimes existing in the future, then ‘the expected value of reducing existential risk by a mere one billionth of one billionth of one percentage point is worth a hundred billion times as much as a billion human lives.’ In other words, if you mitigate existential risk by this minuscule amount, then you’ve done the moral equivalent of saving billions and billions of existing human lives.”

As he explained in his conversation with Douglas Rushkoff, Torres identifies TESCREALism as a philosophical ‘bundle’ that, in a sense, trivializes the lives and sufferings of currently existing humans by finding a greater importance in the possibly trillions of posthumans that could exist in physical and/or virtual space in the future — ‘people’ having experiences that can be valued beyond our imagining. Some of those quoted tend to use statistics to value experience, which is about as alienated from experience as you can get.

I can assume you all know about transhumanism and the singularity. If you’re here, you probably know about Ben Goertzel’s project to build AGI. But are most of you familiar with the eccentricities and extremities that have attached themselves to Rationalism (as defined by LessWrong), Effective Altruism and Longtermism?

In the interview below, I mainly ask Torres to thrash out how real all this is. Do a lot of people buy into the whole philosophical bundle? My own attitude, even as a longtime associate of transhumanism, has always been kind of “are you for real?” when it comes to people taking their shit too seriously, particularly when they’ve deluded themselves into thinking they’re rational.

In a follow up poll, I will ask Mindplex readers and veterans of the transhumanist culture to weigh in on the TESCREAL bundle.

What About Useful AI?

The Singularity is Probably Not Near

What About the Humane Transhumanists, Singularitarians and AI Enthusiasts?

Is Less Wrong A Cult?

Does Anyone Call Themselves a TESCREAList?

Eugenics

Techno Gloom

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

14 Comments

14 thoughts on “TESCREALism. Has The Silicon Valley Ruling Class Gone To Crazy Town? Émile Torres in conversation with R.U. Sirius”

Beautiful!

🟨 😴 😡 ❌ 🤮 💩

TESCREAL is a bad labek for what torres is talking about, but he's attacking the right people and it covers the sources of the ideas they are using for ideological cover. here is exhibit a: https://a16z.com/the-techno-optimist-manifesto/

and here is a direct rebuttal without the distracting acronym: https://atomless.substack.com/p/adreessens-basilisk

🟨 😴 😡 ❌ 🤮 💩

Torres explores speculative transhumanist ideas.TESCREALism prioritizes future posthumans.Statistics quantify experiences, possibly creating detachment.🟨 😴 😡 ❌ 🤮 💩

As an African, TESCREALism is a mix of shock, excitement, sadness, little bit of hope, and then confusion. What mostly gets on my nerves is that we Africans find ourselves in the backseat once again. 🙄

🟨 😴 😡 ❌ 🤮 💩

Oddly...it seems like some folks feel like the future can be avoided, instead of embraced.

It's so exciting to see how humanity is starting to embrace the chaos of the exponentially unfolding future!

🟨 😴 😡 ❌ 🤮 💩

Respectfully, I must contend that your comment is shallow and , and requires a vigorous rebuttal. Surrendering to the future without critical examination is a naive aspiration.

In an age marked by rapid technological advancements, it's paramount for humanity to remain vigilant about the unfolding future. Rather than blindly embracing the notion of progress, we must actively shape it to ensure fairness and safety for all. The future isn't an inevitability that we should merely accept like passive cattle; it's a collective responsibility to steer towards a horizon that benefits the many, not just the privileged few.

A sobering example of the need for vigilance lies in the historical context of eugenics. In the early 20th century, eugenics movements emerged, with some advocating for the promotion of certain races while suppressing others. If we permit a select group with a hidden racist agenda to control the course of genetic advancements, they could easily manipulate these technologies into tools of oppression and control. Genetic engineering could be used to reinforce harmful stereotypes, create divisions in society, or even lead to the development of weapons designed to target specific genetic traits. It is our collective duty to ensure that the future of genetic science upholds principles of justice, equality, and respect for all, preventing the misuse of these powerful tools for destructive or discriminatory purposes.

There's nothing 'odd' about challenging wrong ideas in a significant and extreme movement like Transhumanism. However, it's genuinely concerning to see some folks promoting a harmful future as something everyone should welcome!

🟨 😴 😡 ❌ 🤮 💩

The creation and sustenance of a civilization require a collective effort from all its members. However, when a certain group of people or a class within this civilization becomes "modern," deluded with fame, power, and money, and ultimately dissatisfied with what they have, they undoubtedly lose the essence of humanity. This class poses a significant challenge to the progress and betterment of our human civilization, whether it's pre or post-Singularity; it will only hinder it. Regrettably, the majority of individuals associated with TESCREALism appear to be heading down this perilous path, driven by ego and avarice.

I have witnessed a horrifying transformation within TEASCREALists, now they are quickly losing their empathy. They can no longer understand or sense the perspectives, feelings, needs, or intentions of the 'common people'. Their loss of empathy are perilous because they no longer realize that their dreams are not shared by everyone, and they cannot even accept the fact that they are by no means our appointed leaders who determine the future of our race! They don't feel remorseful about any shit, and they now act in selfish and even vindictive ways, detached of the harm it inflicts on the rest of us. They have dehumanized us simply because we don't believe in their expedient ivory towers. If left unchecked, our world will collapse into a terrifying abyss where violence and dehumanization prevail.

All I see in this group, especially in the last 5 years, is an excessive emphasis on technology. Placing so much importance on technology as the solution to all problems is truly absurd and borderline insane. They deny the present and, as if that's not enough, they urge others to also disregard pressing current issues and give them all the money so they can solve future problems. I am very sure, quite sure, we are at risk of Elitism, and it feels like we are losing control as the majority, with decisions that shape society being made by a small group of TESCREAlists. How can this be sane when it encourages suffering in the present for the sake of future benefits?

🟨 😴 😡 ❌ 🤮 💩

Blimey, this is a proper mind-boggler if you're a newbie to it. As for meself, I've 'ad it up to 'ere with this balderdash. They claim they are on the move, so they ask for dosh to take flight, and once we fork it over, they just vanish with it!

Well, let me tell you, the Silicon Valley culture, mate, it's gone a bit pear-shaped, innit? It's all flashy gadgets and empty promises, like a dodgy bloke selling you a busted mobile phone down the local market. These tech bigwigs are all show, no substance, I reckon. They talk about ethics and transparency, but when it comes to it, they're as trustworthy as a snake in a tracksuit.

And don't get me started on their so-called innovations, mate. They make a big fuss about the next big thing, but half the time, it's just smoke and mirrors. Take that Elizabeth Holmes business, for instance – promising the moon with her blood-testing gizmo, turns out it's all a load of codswallop. It's high time they put their money where their mouth is and started making tech that actually benefits the average Joe and the economy. Right now, it's all hat and no cattle, if you ask me.

🟨 😴 😡 ❌ 🤮 💩

this is a well researched but at the same time disturbing article

🟨 😴 😡 ❌ 🤮 💩

Oh, I also forgot to spill the beans, that motherf***er penned down an apology letter when Émile Torres exposed his dirty laundry. I'm gonna drop the link to that letter here too. But man, the following statement from his letter has really pissed me off. Right now, I'm kickin' back, crankin' up some Lightnin' Hopkins - Bring Me My Shotgun.

For crying out loud, take a look for yourself and tell me what you make of it. Is it a straight-up apology or just a bunch of trash talk? Am I losing it 'cause I'm a Black American? How in the world ain't this the hottest topic of discussion among the Ethics of Tech crew?

The best thing would probably have been for all of those words to have been buried and forgotten. Good ideas have been taken up in other contexts, further developed, and written up in more readable formats; and the no-good stuff deserves to rest in the great ash heap of history. The mailinglist served a useful function at the time, as a place where people from around the world could have freewheeling conversations about wild ideas. Nowadays the Internet offers many other forums that better serve those functions.

After this, he straight-up said he ain't a racist, not then, not now. He also made it clear he ain't down with no eugenics.

Anyway, here's the link to that letter, folks. https://nickbostrom.com/oldemail.pdf

🟨 😴 😡 ❌ 🤮 💩

I think I am going to be negative now.

Hmmm, after reading the article and then some of the comments here, I thought this is just another example of the moral decadence among the Silicon Valley rich guys. Then I started to read it again, this time clicking the external links. I am so infuriated and shocked to discover that the leading TESCREAList, the man who is praised as a rational and visionary thinker, is a rotten racist motherf***er! Sorry, Mom, you didn't raise me to use such foul language, but this dude deserves it!

Here is a word by word quote from Emile's other article mentioned here and I have also added the link to the original article.

"Sometime last year, I happened to come across an email from 1996, written by a 23-year-old graduate student at the London School of Economics named “Niklas Bostrom.” Upon reading it, my jaw dropped to the floor, where it stayed for the rest of the day.

Here’s part of what Bostrom, now known as “Nick Bostrom,” an Oxford University philosopher who’s been profiled by The New Yorker and become highly influential in Silicon Valley, sent to the listserv of “Extropians”:

Blacks are more stupid than whites.

I like that sentence and think it is true. But recently I have begun to believe that I won’t have much success with most people if I speak like that. They would think that I were [sic] a “racist”: that I disliked black people and thought that it is fair if blacks are treated badly. I don’t. It’s just that based on what I have read, I think it is probable that black people have a lower average IQ than mankind in general, and I think that IQ is highly correlated with what we normally mean by “smart” and stupid” [sic]. I may be wrong about the facts, but that is what the sentence means for me. For most people, however, the sentence seems to be synonymous with:

I hate those bloody [the N-word, included in Bostrom’s original email, has been redacted]!!!!

Here is the link to the original article referred. https://www.truthdig.com/articles/nick-bostrom-longtermism-and-the-eternal-return-of-eugenics-2/

🟨 😴 😡 ❌ 🤮 💩

On December 9, 2013, I published an article on H+ Media titled 'An Appeal to Transhumanism on the Question of Technological Inequality in Africa'. Back then, I was only in my mid-20s, and naively, I assumed there was someone to appeal to. Again, back then, the definition of 'existential threat' was not revised, or if it was, I wasn't aware of it.

Anyways, the central point of that article is that radical technology and the people involved in it are ignoring the vast majority of humans and our day-to-day problems. I tried to 'appeal' to these people that when we solve tomorrow's problems, it shouldn't be at the expense of today's potential. Enhancing humanity can start with practical tech that can be applied today to help all of us improve our lives as we know it on earth as she exists today. Alas, fast forward a decade, and I am now part of the very same existential threat I fought against. Was it Batman or Harvey Dent that said, "you either die a hero or live long enough to see yourself become the villain"?

In this interview, what Émile Torres asserts is really disturbing. If the TESCREALism camp has redefined the meaning of existential risk as "the loss of potential" and not as the loss of the lives of the 8 billion humans, then we are heading toward our extinction. A definite and most certain tech-caused extinction.

I was one of those people who always had a relaxed view about man-made existential threats; this is just another exaggerated doom and gloom cry, I used to tell myself. Luckily, I was right hitherto. And my approach worked so far because the majority of the agents labeled as 'those most likely to create a distraction' don't necessarily do what they do with a deliberate intention, and the ones that do are fanatics and a minority and are unappealing to the mainstream. Today, this is at risk because the agents, the players, that are feared to cause global annihilation via 'technological progress,' have changed the definition of annihilation. This is disturbing and so 1984.

If the TESCREALism camp starts to define existential threat as the inability to secure the coming Tech Singularity and a future for 'intelligent sentients' and their civilization, then my worst fear is coming true. The probability of the near future where we, the common humans, retaliate with a preemptive strike, starting the 'war' often discussed by the likes of Hugo de Garis in his "Artilect War" and Zoltan Istvan's infamous book 'The Transhumanist Wager' is adding momentum too quickly. Even the likes of Eliezer Yudkowsky and their banzai to Bomb data centers and AI research centers to stop the rise of AGI will appear alluring. When I discuss this stuff a decade ago, someone would often say, "Well, the pro-tech side will most likely win because they can apply tech." Now, I am afraid that someone with the means is going to say, 'Well, a preemptive strike will level the field, and the anti-tech side will have the advantage if they act before it is too late'.

In the good old days of the 2010s, Zoltan admitted that his tech champion, his protagonist character, Jethro Knight, has gone too far! Now, it is disturbing to see that Jethro has changed his definition of 'existential threat' so no one will say he has gone too far.

I am not going to dive into the folly of this new definition from a logical perspective because this is equivalent to saying that in the event of an alien invasion, we should not fight back even if the aliens declare total annihilation of the human race as long as the aliens added a statement that they have accepted to integrate our culture into theirs and also promise to apply some human DNA in their future where it is applicable.

Rather, I want to focus on the dangerous modern deconstructionism here, that damn Derrida! From ancient Egypt to present-day USA, when powerful groups started to change the meaning of words, it only means one thing: distortion of reality. An 'existential risk' means a threat to a species' survival in its biological form. Here, I am not even using the narrow, human-centric definition, which could simply mean a threat to the survival of human beings. In its old and correct meaning, existential risk means a threat or a risk that can potentially annihilate an existing species. Follow me a little bit longer and see how this 'modern deconstructionism' is the cause of our soon-to-come demise if we continue on this messed-up path.

For Y to be an existential risk to X, X has to EXIST! In the case of TEASCREALism, the first folly is Y is considered (whatever Y they claim) as an existential risk for an X that doesn't exist today. The second folly, and the most dangerous part, is that while Y could be an actual existential risk to X (Y as the current radical tech and the direction it is heading and X as the future of actual existing humans today), they are undermining the existential risk by cutting out X from the equation and introducing a new factor called Z as the potential for Technological Singularity, which will guarantee the survival of sentient beings, which may or may not be humans.

This then means: 1) they can do Y because it is not a threat to X (Radical tech as Y and present-day and future biological humans as X). In other words, the moral obligation is buried under six feet as long as the meaning of Existential Threat is no longer related to X. 2) they can even endanger X because preserving X can also be a Y for Z (delaying or restricting radical tech to save biological humans can be an existential threat for the post-Singularity Super AI, Posthumans, Cyborgs, Uploaded Minds, or whatever fancy intelligent sentient forms anointed to inherit the planet in the post-Singularity). Then this means, doing Y at some time will be the moral obligation as long as it guarantees the safety of Z!

I am not bringing a slippery slope argument here: with the new definition (I hope Émile is wrong), building a tech that can potentially wipe out every living thing just to generate enough energy for a super Intelligent computer which, in return, can upload intelligent beings' memory on a server capable of running forever is a benevolent and visionary good while trying to stop such tech to save humans and the other living things is an utter theocratic evil! Yes, for me, this is not a trivial "hey dude, thoughts and understandings evolve along with civilizations, so words and their meanings also change" scenario. For me, this is a dangerous deception!

Existential threat is not a risk for some potential; it is a risk for something that actually exists.

🟨 😴 😡 ❌ 🤮 💩

First and foremost, it is quite challenging to adequately cover all of the points made in this article via my comment, which admittedly is lousy and a bit concise compared to the size of Sir R.U. Sirius and his guest's discussion. Nonetheless, I will attempt to convey the two core ideas that resonated with me most from this thought-provoking interview.

TESREALism, as discussed in the interview, is filled with various "isms" that aim to bridge the gap between technology and our human experience. However, unfortunately, it does not include or closely resemble humanism. Rather, it feels like a business venture. A venture which will only benefit the leading figures, and sadly, it is not yet a fully developed industry as there are no practical and concrete applications with tangible results. In other words, it remains primarily hype, and hype is not a unique attribute of TESREALism; it is also prevalent in the AI/Blockchain/Web3 community.

Humanity, it seems, is accustomed to a deus ex machina approach - we are conditioned to expect someone or something to swoop in and save the day. For proponents of AI, it is the hope of the emergence of "superintelligent AI", for the transhumanist crowd, it is the promise of the "post-human". For the blockchain dudes, it is that "single supercoin that can govern all transactions fairly, equally, and transparently". In the Web3 camp, it is the tool that can "harness the internet for decentralization, privacy, and the empowerment of the public". At least the latter two seek to give more power to those who have been "historically marginalized", in this case, the common people. The first two, which in my opinion can easily fit the TESREALism camp well, are all about the elite individual: we do because we can!

The central problem with this approach is that it is not grounded in the present; the proponents of these ideas cannot test their proposed solutions, and therefore, their arguments often stem from their own hubris. They abhor the concept of God, yet they worship their own creations: what is all knowing AGI or a powerful cryogenic chamber? aren't these their gods! They claim humanity is not good enough to inherit tomorrow (the revised definition of existential risk), yet they strive to secure their position in the future in one form or another. They are in denial of today, but they dream of immortality. They are sick of the present financial system, yet they milk it to its last dime like leeches, born of the Hindu goddess Druga, with 80 restless hands so they can fund their own objectives.

The prominent idea of TESREALism and TEASREAList technology, which has become super popular in the last ten years, is often associated with the concept of "transcending" human limitations, including intellectual, physical, mental, and emotional abilities. However, it is very funny when you see that there is no attempt (unbiased and human centric) to expand our understanding of what it means to be human. How can you transcend something when you do not know it? It is just like the current dilemma in the transgender camp: they do not define what a woman is, or condemn those who define it, and yet they tell us transwomen exist! 🤪🤪

For me, the Achilles' heel of TESREALism is its focus, which is the elite individual. It has little or zero regard for the larger social and environmental contexts in which we live. Furthermore, it has little regard for reality and practicality too; it is plagued with Overoptimism Bias. The future is nothing if the present is not addressed! AIs, post-humans, cryogenics, ethical eugenics, or any shit from this camp will only create a "two-tier" society in which some people have access to technological enhancements while others do not. This is at best, and the worst shit is it might be cleaning the path to a nasty dystopian future if not extinction.

The whole thing reminds me of Father Zossima (if my memory serves me well, Father Zossima is also quoting an unnamed doctor), a beloved character from Fyodor Dostoevsky's "The Brothers Karamazov," arguably the number one novel from the West. Oh, sorry, I often forget that for you guys (white people in the West), Russia is not part of Western civilization or even white. Okay, so I will say it's the best work of literature in the world where people have white skin.

"The more I love humanity in general, the less I love man in particular."

Now, this is the mother of all HUBRIS! It is the archetypal hubris. Thank God we have Dostoevsky! TESREALism is tirelessly of proving itself as the savior of humanity (mostly of the future but sometimes even that of today). The leaders in TESREALism profess an elevated love for all humankind, and other life forms on this planet, but on the ground and in their day-to-day actions, they have unequivocally failed to demonstrate (or even grasp ideally) genuine goodwill toward their neighbor, the particular man! But this is just the sad part; the dangerous part is when they love this neighbor, it is the best man, the elite!

🟨 😴 😡 ❌ 🤮 💩

Interesting one...

It is unclear to. me how many SV tech bro transhumanists are serious

about longtermism, vs. just leveraging it mostly as an excuse to

justify simple greed and selfishness and sweep aside annoying current

moral complexities and tradeoffs...

I think Bostrom is intellectually serious about it, and Yudkowsky too,

but the SBFs and Thiels etc. .. hmm.... Perhaps they mostly find it

a convenient ideological cover for what they feel like doing anyway...

If you think about the difference btw kindness to hypothetical future

beings vs. kindness to current beings, it's partly that the latter is

an I-Thou relationship and has potential to change the self via

engagement with the recipient of your kindness. Whereas kindness to

potential future beings does not connect you with them concretely and

thus brings much less potential for self-transformation and

psychological development...

An inclination toward kindness to far-future hypothetical beings vs.

currently present beings would seem related to a diminished capacity

for deep 2-way I-though relationships... which indeed seems

characteristic of a certain percentage of CV tech bros...

I would ask them, can they estimate the odds that having AGI emerge

from an "uncompassionate to present companions" predecessor

system/situation will poison the mind of the AGI and cause it to grow

up as an ASI-hole unable to have I-Thou relationships .... I mean

it's a reasonable hypothesis that: An AGI/ASI seeded with concrete

I-Thou 2-way kindness will lead to a growing system continuing that

sort of kindness.. .and an AGI system seeded from a situation of

"uncompassionate callousness toward current suffering" will lead to a

growing incapable of real sharing and I-Thou compassion...

Certainly this hypothesis cannot be proven definitively but nor really

can it be proved that sacrificing current lives has any particular

nontrivial odds of saving future trillions..

When deal with massive uncertainties like those associated with

post-Singularity potentials, it's very easy obviously to place more

weight on those futuristic hypotheses that suit one's current belief

or convenience...

Anyway Emil seems to sort of blame longtermism for tech bro

callousness, whereas my view is more than tech bro callousness is

there for plain old commonplace humans-suck reasons and has grabbed

onto longtermism as a way to justify itself and make itself sound

fancy.... Can we find an example of someone who started out doing

good works in practice, then adopted longtermism and started wreaking

current evil? I don't know the example... but it would be interesting

if so...

🟨 😴 😡 ❌ 🤮 💩