Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Stacey Engle – CEO, Twin Protocol | Mindplex Podcast S2EP22

Black Monday 2024: Why Did TradFi and Crypto Markets Crash?

Introduction

The cryptocurrency sector had its usual sluggish performance during the summer, exacerbated with a catastrophic one-day collapse which swept across the entire global economy. Let’s analyze that crash and what has happened since.

The crash in August drew comparisons with 1987’s brutal Black Monday Wall Street crash, and took an especially heavy toll on tech stocks and crypto assets, often seen as high-risk high-reward investments. Markets have recovered in the month since, but talk of a recession still roams out there.

Let’s take a look at what happened on the day, what happened since, and why it was so heavy.

The Crash in Broad Strokes

TradFi Markets

S&P 500

The S&P 500 saw a sharp decline, dropping 3% on August 5: its worst single-day performance in two years. This drop extended a losing run that began weeks prior, influenced by fears of a weakening U.S. economy and overvalued tech stocks. Key factors included disappointing earnings reports from major corporations and broader economic concerns.

Nasdaq Composite

The tech-heavy Nasdaq Composite was hit particularly hard, falling more than 6% in early trading before recovering slightly to close down around 3%. This decline was driven by significant losses in major tech stocks, including Nvidia, Apple, and Microsoft. The combined market capitalization of the leading tech companies, often referred to as ‘The Magnificent Seven’, saw a massive dip, losing nearly $1 trillion intraday.

Crypto Markets

This turmoil spilled over into the crypto market, with Bitcoin plunging about 20% in just three days, from $67,000 to just above $49,000. On August 5, the total crypto market capitalization plummeted by $314 billion: one of the most severe sell-offs in recent history. ETH fell 18% and other cryptocurrencies, such as Solana, were even harder hit, with Solana’s price falling over 30% since late July.

Aftermath: The Bounce

The immediate aftermath of the crash saw heightened volatility across all markets. The Cboe Volatility Index (VIX), which measures market volatility, spiked dramatically, peaking above 65 before settling at 38.6 by the end of the trading day, its highest closing level since 2020.

Since the crash we have seen a significant recovery, indicative of a resilient market with real interest. Bitcoin rebounded by over 20%, returning to around $60,000 where it sits now, as investors bought the dip and market confidence slowly returned. The broader crypto market also showed signs of recovery, as recession fears subsided when Japan vowed to not increase interest rates for the remainder of the year.

Investor sentiment was heavily impacted, with the Crypto Fear & Greed Index dropping to “fear” territory.

What Caused The Black Monday 2024 Crash?

Rate Hike in Japan causes Stock Drop Contagion

The Bank of Japan’s unexpected decision to raise interest rates was definitely a big catalyst. This move caused a surge in the yen’s value, causing yen carry trade to unwind – where investors borrow in yen to invest in higher-yielding assets elsewhere. The rate hike also heightened concerns about global economic stability, contributing to widespread market anxiety.

Japanese stocks declined and it spread to other markets, creating a domino effect as investors reacted to global market turmoil.

US Economy Concerns

In the USA, a few factors combined to bring market growth to an abrupt halt:

- a disappointing jobs report exacerbated fears of an impending recession

- The Sahm Rule recession indicator (which its creator later said was open to interpretation) was triggered and amplified fears.

- Weak economic data and the Federal Reserve’s anticipated rate cuts in September created a risk-off sentiment among investors: they sold off riskier assets, including stocks and cryptocurrencies.

- The Federal Reserve kept upping interest rates in an effort to combat inflation. This, coupled with signs of an economic slowdown, heightened investor concerns about a potential recession.

Global geopolitical tensions

Israel’s assassination of a senior Hamas leader in Iran was just the latest potential spark that could set off a broad regional war in the Middle East. Coupled with other conflicts such as in the Ukraine, markets are extremely jittery about geopolitics driving prices down even further.

Concerns over AI market bubbles

Investors in AI leaders like Nvidia have been on the ride of their lives the last couple years, as they saw absolutely mouthwatering returns on investment. There were growing fears that certain sectors, particularly those related to artificial intelligence, had become overvalued and were due for a correction. This played out as expected.

Crypto sell-offs spook markets

Jump Crypto’s significant selloff of Ethereum (ETH) played a crucial role in the market crash on August 5, 2024. This selloff was part of a broader liquidation strategy by the firm, which involved offloading hundreds of millions of dollars in assets. This large-scale liquidation exacerbated the already fragile market conditions, contributing to the sharp declines in cryptocurrency prices.

The firm, which is behind big crypto plays like Solana’s Firedancer consensus mechanism, Wormhole and Pyth Network, was slated on social media for apparently selling over the weekend prior to the crash. Speculation is that it is preparing a war chest for its legal battles with the SEC over its investment in the collapsed Terra Luna project.

The liquidation of Bitcoin holdings by Mt. Gox creditors and rumors of the U.S. government moving its Bitcoin holdings.

Portfolio insurance hedging strategies

Similar to the 1987 crash, the use of computer-based models to automatically buy or sell index futures based on market conditions may have accelerated the sell-off.

Conclusion

Crypto was invented at the end of the major 2008 recession, and since then it’s faced off against a pretty smooth TradFi market. This changed at the Covid-19 pandemic, which battered it at first, then made it soar to all time highs when stimulus money flooded into the space.

Since then, crypto has struggled to deal with any sustained bearish macro-economic conditions, as we’ve seen with its reaction to the Fed’s interest rate hikes. You could argue that the most important dates each month are the CPI number reports and Fed FOMC meetings, where interest rate changes are announced.

Analysts believe we’re not out of the woods, so keep a close eye on markets and de-risk where you can. We have seen the effects of uncertainty on the market when investors respond to economic data and to policy responses from central banks. The potential for further rate cuts by the Federal Reserve will keep affecting market stability in the immediate future, as will ongoing geopolitical tensions.

The dramatic 5 August market crash once again drove home the interconnectedness of global financial systems and the sensitivity of markets to policy changes and economic indicators.

The immediate losses were substantial, but recovery was quick and longer-term impacts depend on subsequent economic developments and policy responses. Investors remain cautious, with many seeking safer assets amid the heightened volatility and uncertainty.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Bitcoin Nashville 2024: Trump and Others Go All In On BTC

Introduction

Bitcoin Nashville 2024, the annual flagship event for the world’s most important digital asset a month back. Organized by Bitcoin Magazine, it again provided a stellar showcase for BTC, loaded with big personalities, bold predictions, and the kind of energy you only get when money and politics collide in the USA.

This year’s conference, held from July 25-27, was one for the ages, and likely the most anticipated edition to date, with good reason. With over 35,000 attendees packed into Music City, it felt like a political rally crossed with a rock concert – all centered around that digital gold we call Bitcoin.

I attended Bitcoin 2023 in Miami last year as a journalist for a well-known crypto publication, which gave me unfettered backstage access to the event. At that time, around mid-May, Bitcoin was gasping for air at around $27k, getting throttled by mean-spirited US regulators. Still, people were optimistic about the year ahead. I listened to Michael Saylor wax lyrical about BTC for four hours in the press room, and was fortunate enough to ask new Bitcoin convert Vivek Rahmasamy a question or two. Also at the conference were Robert F Kennedy JR (RFK), Tulsi Gabbard, and a few lesser-known politicians.

A month after the event, BlackRock announced their Bitcoin spot ETF application – and we were off to the races. Now 12 months later, at this year’s edition in Nashville, ex-president Donald Trump has become an unlikely crypto evangelist and is speaking on the Nakamoto main stage. Coming on the back of his shocking recent foiled assassination attempt, this was impossible to fathom a year ago, especially if you dig up anti-crypto statements Trump made during his term in office.

But there he was, the former (and possible future) president, standing on stage at Bitcoin 2024 in Nashville, promising to create a ‘strategic BTC reserve’ if he wins in November. If you ever had doubts about Bitcoin going mainstream, retire them.

Trump Steals the Show

Trump’s keynote appearance was always going to be the headline act. Love him or hate him, he has fame and knows how to work a crowd. As he took the stage, the energy in the room was electric. Bitcoiners who’d waited over an hour to hear him speak were practically buzzing with anticipation. You can watch his full keynote speech here.

And he delivered, despite some uneven moments. He came out swinging, vowing to fire SEC Chair Gary Gensler “on day one” and end what he called the Biden administration’s “anti-crypto crusade”. It was music to the ears of many in attendance who’ve felt stifled by recent regulatory crackdowns. He promised to free Silk Road martyr Ross Ulbricht: pitch perfect to his libertarian-leaning audience.

But between the familiar self-praising soundbites and vitriol aimed at his opposers, Trump showed that he did his homework, or had the right crypto people give him the lay of the land. He wasn’t just there to bash the current administration, but ticked all the right boxes that Bitcoin maxis care about.

Trump’s pro-Bitcoin promises

The Donald laid out a surprisingly detailed pro-Bitcoin agenda:

- Establish a “strategic Bitcoin reserve” for the USA.

- Prevent the USA from selling its existing Bitcoin holdings.

- Create a “Bitcoin and crypto presidential advisory council” with members who support the industry.

- Fire SEC Chair Gary Gensler on his first day in office.

- End what he called the Biden administration’s “anti-crypto crusade”.

- Keep 100% of the Bitcoin the U.S. government currently holds or acquires in the future.

- Appoint a new SEC chairman who is more favorable to crypto.

- Shut down the controversial Biden administration-led “Operation Chokepoint 2.0,” which he claimed was aimed at choking crypto businesses out of existence.

- Work to keep Bitcoin jobs and businesses in the United States rather than seeing them flee to other countries.

- Aim to make the United States the lowest-cost energy and electricity provider of any nation on Earth, to support Bitcoin mining.

- Transform the U.S. into the “crypto capital of the world” and the “Bitcoin superpower of the world.”

- Commute the sentence of Ross Ulbricht, the founder of Silk Road.

- Create rules for the crypto industry written by “people who love your industry, not hate your industry.”

- Oppose Central Bank Digital Currencies (CBDCs).

It was surreal hearing a prominent politician shout “Never Sell Your Bitcoin” and compare it to the steel industry of the last century. Whether you’re a Trump fan or not, whether it’s mere political theater or not, it marks a shift in how politicians are approaching crypto.

Not Just a Trump Show

While Trump might have stolen the spotlight, he wasn’t the only political heavy-hitter at the conference. Robert F. Kennedy Jr., then running as a third-party candidate, proposed a reserve of a whopping 4 million Bitcoin. Senator Cynthia Lummis pitched her plan for a 1 million BTC federal stockpile. Even Senator Tim Scott got in on the action, floating the idea of Bitcoin “opportunity zones” for underserved communities.

Bitcoin was the belle of the ball, and every politician there was a-courting. What a time to be alive!

Big Money Takes Notice

It wasn’t all politics, though. The suits showed up in force too. Robert Mitchnick from BlackRock, and Chris Kulper from Fidelity talked about Bitcoin’s investment potential – a reminder of how far we’ve come from the days when crypto was just for rebels and cypherpunks.

And then there was Michael Saylor. The MicroStrategy CEO and Bitcoin über-bull didn’t disappoint, predicting Bitcoin could hit $13 million per coin by 2045.

Market Madness

All this bullish talk had an impact. Bitcoin’s price surged close to $70,000 during the conference, reaching levels not seen in weeks. It was a reminder of how much influence these high-profile endorsements can have on the market.

Regulation Fears Take A Backseat

Of course, it wasn’t all moonshots and lambos. The specter of regulation loomed over the proceedings, but less darkly than in previous years. Trump’s promise to fire Gary Gensler got some of the biggest cheers of the conference, reflecting the frustration many feel with the current regulatory environment.

There was also plenty of discussion about Central Bank Digital Currencies (CBDCs), with most speakers (including Trump) coming out strongly against them. The battle lines are being drawn between centralized and decentralized visions of digital money.

Looking Ahead

The Bitcoin Nashville conference could well be a turning point for crypto, thanks to such a high-profile endorsement by someone who is currently the slight favorite to be the next US president. Factor in that Kamala Harris, his Democrat opponent, apparently wanted to attend too, and is making overtures to the crypto community, and it becomes clear that the crypto vote is now strongly coveted by both US parties. Crypto is especially popular with minorities like African Americans and Latinos, meaning politicians can no longer ignore it.

In 2023 I asked several speakers whether Bitcoin and crypto would be a major political issue in the 2024 election. The overwhelming consensus was that it was still years too early for that. Things have moved faster than expected, with the approved ETFs and patronage from the world’s biggest finance firms. It’s not even a question anymore. Bitcoin adoption is accelerating in all arenas, and the intertwined worlds of finance and politics are no exception.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

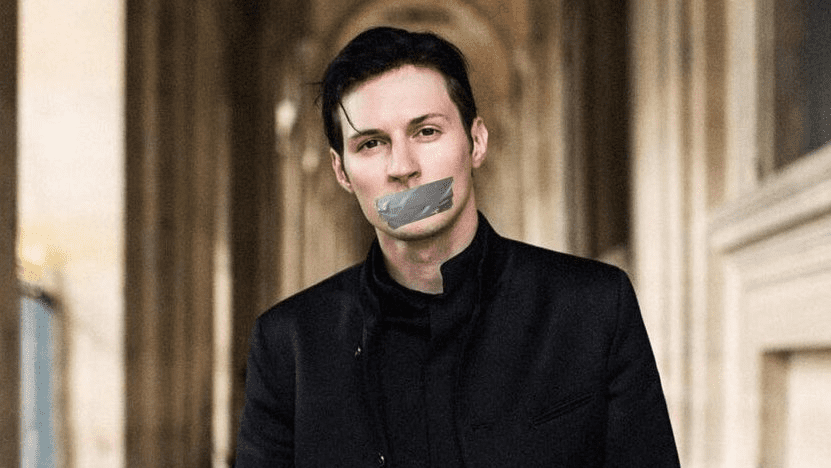

Pavel Durov’s Arrest: Shockwaves Through Crypto, TON, and Data Privacy

The authorities have struck another blow against crypto. Pavel Durov, the mysterious 39-year-old Russian-born billionaire and founder of the popular messaging app Telegram, was arrested in Paris on 26 August, 2024.

This high-profile collaring sent ripples through the tech industry, particularly affecting the cryptocurrency markets due to Durov’s relationship with The Open Network (TON) and raising significant concerns about data privacy in the age of Web3. Let’s delve into the details of this incident and its far-reaching consequences.

The Arrest: Charges and Aftermath

French authorities, specifically the Office for the Protection of Minors on the Internet (OFMIN), issued an arrest warrant for Durov over a range of serious accusations. The charges arise from activities on Telegram including:

- Organized crime

- Drug trafficking

- Fraud

- Cyberbullying

- Promotion of terrorism on Telegram

The prosecutors will need to show that Telegram failed to adequately moderate its content and curb illegal activities by users and groups on the platform.

Durov was held in police and judicial custody for two days before being released on bail. The incident immediately impacted the crypto markets, with Toncoin (TON), a cryptocurrency closely associated with Telegram, experiencing a 20% drop in value within 24 hours of the news. This wiped out approximately $2.7 billion in market value.

Telegram and TON’s Symbiosis

To understand the full implications of Durov’s arrest, it’s crucial to examine the relationship between Telegram and the TON blockchain.

The TON Blockchain

The Open Network (TON) is a blockchain project initially developed by Telegram in 2018. It was abandoned when US regulators came after them them for promoting a security asset and forced them to return $1.2 billion raised in their Initial Coin Offering (ICO).

However, the project was soon revived by the crypto community in 2021 (crypto folks love to use the word CTO or ‘community take over’) and has maintained close ties with Telegram ever since. It has become one of the hottest layer-1 chains in 2024 and has seen various Tap-to-Play games like NotCoin and Hamster Kombat attract hundreds of millions of users thanks to the ability to earn crypto from airdrops and points rewards.

Alex Thorn, Galaxy Digital’s head of research, emphasized in a report that the value of the TON blockchain and its native token, toncoin (TON), are “substantially dependent” on its ties to Telegram. The downside of being close with Telegram? When their founder Pavel Druov gets arrested, Toncoin immediately takes a tumble on the market.

Market Impact

The arrest triggered significant market activity:

- Toncoin lost $2.7 billion in market value, dropping 20% within 24 hours.

- In the 24 hours following the news, $8.2 million in liquidations occurred across the crypto market – $3.82 million from long positions and $4.20 million from short positions.

- Traders bet almost $70 million, causing TON’s open interest in future markets to jump 32%.

- As of 30 August, 2024, Toncoin’s price stood at $5.35, showing signs of resistance.

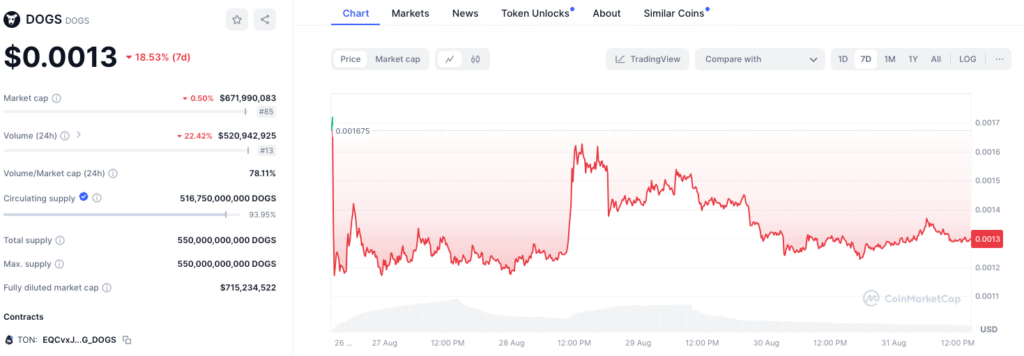

Interestingly, not all TON-related tokens suffered. The DOGS token, a memecoin launched on the TON blockchain with much fanfare and a big airdrop, saw a 23% surge, reaching a market cap of $789 million. This demonstrates the complex and sometimes counterintuitive nature of crypto markets in response to major events. It was also the perfect vehicle for crypto natives to show solidarity with the #FreePavel campaign.

Web3 and Telegram’s Data Privacy Concerns

Durov’s arrest shines a light on the struggle between privacy-focused platforms and government efforts to combat illegal activities online. This tension is particularly relevant in the context of Web3, where data privacy is a basic principle.

Telegram’s Privacy Features

Telegram, with its estimated 800 million users, has long been criticized for its perceived lack of moderation. However, its end-to-end encryption and commitment to user privacy have made it a popular choice for those seeking to avoid surveillance, including both legitimate users and malicious actors. Durov is a fierce advocate for censorship resistance, all the way back to his VKontakte days, and has appeared to be willing to die on his shield several times, even after Russian authorities put pressure on him during the 2012 Russian protests.

The Balancing Act

Privacy-focused platforms must strike a balance between protecting user privacy and complying with local laws in 2024, especially if they’re non-US. Pavel’s arrest shows what happens when you mis-judge this delicate legal balancing act.

It’s increasingly challenging to operate a global service under varying national regulations, particularly in light of the European Union’s Digital Services Act (DSA) and similar legislation worldwide.

Potential Shift to Decentralized Alternatives?

With Telegram’s network distribution and TON’s blockchain capabilities, can we expect to see more interest in truly decentralized alternatives after Durov’s arrest? Very likely. These alternatives can better withstand legal and regulatory pressures.

The crypto community, which relies heavily on privacy-preserving technologies and resistant communication channels, view this encroachment on freedom of speech and freedom of finances as very dystopian. They may turn to blockchain-based messaging solutions that are inherently more resistant to censorship and surveillance.

The TON Blockchain Outage

In a case of bad timing, the TON blockchain went down for nearly six hours on 29 August, 2024. This disruption was caused by a surge in network traffic, possibly linked to the recent airdrop of the DOGS memecoin.

The TON blockchain team explained on Twitter: “Several validators are unable to clean the database of old transactions, which has led to losing the consensus.” They assured users that “no cryptocurrency assets will be lost due to the issue”.

This outage, coming on the heels of Durov’s arrest, raises questions about the resilience of blockchain networks closely associated with centralized entities like Telegram. Maybe they’re not decentralized enough? On the other hand, outages are almost a rite of passage for most blockchains as they scale, and top chains like Solana are notorious for it.

The Future of Privacy Tech and Crypto

The outcome of Durov’s case could set important precedents for how aggressively authorities pursue tech leaders over content moderation issues, and the responsibilities of platforms under regulatory frameworks like the European Union’s Digital Services Act (DSA). It may also influence debates about encryption, backdoors, and the role of technology companies in moderating content.

Potential Outcomes

- Increased scrutiny on how cryptocurrency and related technologies are discussed and promoted on messaging platforms.

- New compliance challenges for crypto projects and crypto exchanges.

- Innovation in decentralized communication tools built on blockchain technology.

- A shift in how encrypted communication platforms are understood and regulated.

Conclusion: A Web3 Watershed?

Pavel Durov’s arrest is an important beat in the ongoing story between privacy-preserving technologies, blockchain ecosystems, and regulators. As the case unfolds, it will spark intense debates about the proper balance between law enforcement, user privacy, and the role of technology companies in moderating content.

Durov’s release on bail provides some comfort, but he’s still a regulatory target. And if the 120th richest person in the world can get in trouble for going too far with speech that offends the powers that be, anyone can.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Sui: Crypto’s New Object of Affection

Introduction

In bearish market conditions, one blockchain has stood out for its recent upward price action and news, which is finally beginning to match its promise as a very unique and powerful decentralized network which can compete with older chains like Ethereum, Solana and Cardano.

Sui is a next-generation layer-1 blockchain platform that’s turning Web3 heads with its object-based design that enables a promise of more speed, scalability, and user-friendliness.

Headquartered in San Francisco and developed by the bright minds at Mysten Labs, former employees of Facebook and its doomed Diem project, Sui aims to make Web3 technology more accessible and user-friendly.

What is Sui?

In essence, Sui is a layer-1 blockchain with a novel approach to transaction processing. Launched in May 2023, it’s aiming to be a blockchain that’s as easy to use as your favorite social media app – that’s Sui’s ultimate goal.

Its name is derived from the Chinese word for ‘water’, symbolizing its adaptability and ease of use in the Web3 space.

Key Challenges Sui Aims to Solve

Sui is addressing several critical issues in blockchain:

- Scalability: Sui’s architecture is designed to scale horizontally, maintaining performance as network demand grows.

- Transaction fees: Sui’s efficient processing aims to keep fees low and predictable, even during peak usage.

- User experience: Sui focuses on simplifying the user interface to make blockchain technology more accessible.

- Programmability: Through the Move language, Sui offers enhanced flexibility for developers.

- Efficiency of data handling: Sui’s object-centric model allows unrelated transactions to be processed in parallel, significantly improving efficiency.

Key Features

Sui boasts several innovative features:

- Horizontal scalability: Network capacity can expand by adding more nodes.

- Low-latency transactions: Processes transactions with minimal delay.

- Object-centric data model: Enables parallel processing of independent transactions.

- Move programming language: Enhances security and simplifies digital asset management.

- Byzantine fault-tolerant proof-of-stake consensus: Ensures network security and efficiency.

The Move Programming Language

Move, Sui’s programming language is specifically designed for secure and flexible smart contract development in blockchain environments (and it’s also used by Aptos). It focuses on securely managing resources and building flexible smart contracts.

Key features of Move include:

- Resource-oriented programming

- Static type system

- Formal verification capabilities

- Efficient module system

By treating assets as first-class citizens, Move allows developers to implement complex payment logic with a high degree of safety and efficiency. This approach significantly reduces the risk of common vulnerabilities found in other blockchain systems.

The combination of these features makes Move particularly well-suited for blockchain environments:

- Security: The resource-oriented approach, and the static typing, help prevent common vulnerabilities like reëntrancy attacks or double-spending.

- Efficiency: Move’s design allows for efficient execution, crucial for blockchain systems where computational resources are limited.

- Flexibility: Despite its focus on safety, Move remains flexible enough to implement complex smart contract logic.

In the context of Sui, developers can create complex, interrelated objects that mirror real-world assets and relationships, all while benefiting from Move’s strong safety guarantees.

Developers building a decentralized finance (DeFi) application on Sui could define custom tokens using Move, create complex financial instruments, and implement sophisticated trading logic, all with a high degree of safety and efficiency. The ability to mathematically verify the correctness of critical functions provides extra peace of mind.

How SUI Works

Sui’s key innovation is its object-centric model. Instead of the usual account-based system, Sui treats everything as objects. Your coins, NFTs, and even the programs running on Sui are all objects.

In Sui’s object model, there are three types of object ownership:

- Owned by an address: Objects like coins or NFTs owned by a specific user address.

- Owned by another object: For example, an NFT that is part of a larger collection.

- Shared: Objects that can be accessed and modified by multiple users.

This model enables Sui to process independent transactions concurrently, increasing throughput and reducing latency. By executing unrelated transactions in parallel, Sui achieves higher transaction processing speeds than traditional blockchain architectures.

The object-centric model also simplifies the development of complex applications, particularly those involving digital assets. Developers can create and manage assets as distinct objects with their own properties and behaviors, leading to more intuitive and efficient smart contract design.

SUI Tokenomics

The native token of the Sui network, SUI, serves several key functions:

- Gas fees: Used to pay for transaction fees on the network.

- Staking: Validators and delegators can stake SUI to participate in network security and earn rewards.

- Governance: SUI token holders can participate in on-chain governance decisions.

The total supply of SUI tokens is capped at 10 billion. This fixed supply model is designed to create scarcity and potentially drive value as network usage increases. SUI tokens have a distribution and vesting schedule designed to incentivize long-term participation in the network and align the interests of developers, users, and investors.

What Drove the Recent SUI Price Surge?

After suffering with all other coins during Black Monday’s market crash, Sui recently experienced significant growth, driven by two key events:

- Mysticeti Upgrade: On August 6, Sui’s mainnet was upgraded to Mysticeti, increasing its theoretical transaction processing capacity to 297,000 TPS. This upgrade demonstrated Sui’s commitment to continuous improvement and its potential to handle large-scale adoption.

- Grayscale Trust: On August 7, Grayscale introduced its SUI Trust for accredited investors, potentially increasing institutional interest in the token. This development signaled growing recognition of Sui in the traditional finance sector.

Bridging the Web2 to Web3 Gap

Sui is on a mission to make blockchain technology more accessible to the average person. Here’s how:

- Easy wallet creation: Use your Gmail or Face ID – no need to remember another password.

- No more seed phrases: This addresses a pain point for crypto users.

- QR code transactions: As easy as scanning your boarding pass.

- User-friendly interface: If you can use Facebook, you can use Sui.

SUI Ecosystem Overview

Despite its relative novelty, Sui is building a diverse ecosystem. Notable projects include:

- Ocean DEX: A hybrid central limit order book and automated market maker decentralized exchange.

- Ethos Wallet: A web3 wallet for Sui with simple email registration.

- SuiNS: The Sui Name Service, providing human-readable addresses for Sui wallets.

- Navi Protocol: A money market protocol contributing to Sui’s recent increase in TVL (total value locked)

- Artificial intelligence-focused protocols like Atoma and Walrus

Cross-chain bridges like Axelar Network and Wormhole interoperate with other major blockchains. Users can transfer assets across these bridges that link Sui to other blockchain networks. This brings more liquidity Sui-based tokens, and expands the set of use-cases and market size for Sui-based applications.

The growth of Sui’s ecosystem is crucial for its long-term success. It currently has a total value locked (TVL) of just under $600 million according to DeFiLlama with the top tokens commanding comparatively small market caps, the biggest at $70 million and the 20th with a fully diluted cap of under $1 million if CoinMarketCap Sui Ecosystem data is accurate.

A diverse range of applications and services built on Sui is therefore crucial if it wants to grow and attract the best developers and investors.

Sui vs Solana: A Concise Comparison

Sui and Solana are both high-performance blockchain platforms, but they differ significantly in their approach:

| Sui uses an object-centric model with the Move language, enabling parallel processing of independent transactions and native sharding. | Solana uses an account-based model with the Rust language, utilizing a global state and Proof of History consensus. |

While Sui’s architecture potentially allows for greater scalability through horizontal expansion, Solana focuses on optimizing single-node performance. Sui offers sub-second finality for single-owner transactions, whereas Solana provides this for all transactions.

Is Sui a ‘Solana killer’?

It’s premature to make such a claim. While Sui’s innovative approach shows promise, Solana has an established ecosystem, massive user numbers (thanks in no small part to memecoins) and a proven track record. Rather than displacing Solana, Sui may carve out its own niche, particularly in use-cases that benefit from its object-centric model and Move language capabilities. The blockchain space is vast, with room for multiple successful platforms serving different needs.

Conclusion

Sui is working hard to solve massive challenges in the blockchain industry. Its innovative features, growing ecosystem, and focus on user experience position it as a noteworthy player in the layer-1 blockchain space.

As Sui continues to develop and attract projects, it has the potential to significantly impact the future of decentralized applications and Web3 technologies.

However, like any new technology, Sui faces challenges and competition. Its success will depend on its ability to deliver on its promises of high performance and scalability, attract and retain developers, and build a robust and diverse ecosystem of applications. This is a big task, but it’s one that all major blockchain networks have had to undertake.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Caring Coexistence: David Brin on the Role of AGIs in Human Life | Mindplex Podcast S2EP21

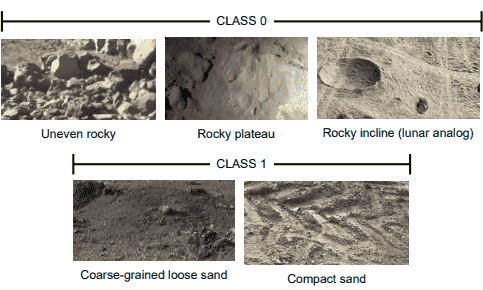

New Age of Terrain Classification: Proprioceptive Sensors and Uncertainty Quantification

The challenges of Terrain Classification

Terrain classification (TC) in rover exploration has traditionally relied on computer vision. However, the dynamic conditions of space—such as fluctuating illumination and atmospheric changes—can compromise visual sensors, affecting classification reliability. To address this, researchers are exploring the use of proprioceptive sensors, like inertial measurement units (IMUs) and motor joint states, to classify terrain. This approach aims to enhance the robustness and accuracy of rovers during extra-planetary missions by training Neural Networks (NNs) with proprioceptive data and integrating Uncertainty Quantification (UQ) techniques.

Innovations in Sensor Technology

Traditional TC research has focused on visual sensors like cameras and LADAR, but these are vulnerable to environmental conditions. Proprioceptive sensors, which measure internal states like IMUs and torque sensors, offer a more robust alternative. Recent studies have used these sensors with machine learning methods, showing promising results. However, they lacked quantifiable confidence in predictions. To address this, probabilistic UQ methods such as Monte Carlo Dropout, DropConnect, and Flipout have been integrated into DL models, enhancing their reliability for critical tasks.

Experimental Platform: The AsguardIV Rover

The AsguardIV rover, a hybrid leg-wheel rover designed for unstructured environments, was used to collect data. It features rimless wheels for better obstacle traversal and energy efficiency. Data was collected from various terrains mimicking lunar surfaces, including compact and loose sand, and rocky areas.

collect an array of data logs from trials executed across

various locations (Credit: De Lucas Álvarez et al., “Terrain Classification Enhanced With Uncertainty for Space Exploration Robots From Proprioceptive Data.”)

Data Collection and Processing

- Sensors: IMU (6-feature vector), Joint data (12-feature vector), and fused IMU-joint data (18-feature vector).

- Data Rate: 100 Hz, approximately six hours of data.

- Splits: 70% training, 30% testing, with further validation splits.

- Labelling: Terrains labelled for binary classification: rocky terrains as Class 0 (uneven and undeformable) and sand terrains as Class 1 (even and deformable).

Advanced Sequence Generation

The researchers have used the following two methods:

- Sliding Window: Extracting subsequences with predefined widths and steps.

- Sequence Subsampling: Selecting every fff-th time step to balance sample number and length.

High-Performance Neural Networks

Three NN architectures—CNN, LSTM, and CNN-LSTM—were optimized using Bayesian Optimization with Hyperband (BOHB).

Uncertainty Quantification Techniques

The researcher have integrated Three UQ techniques to enhance reliability:

- Monte Carlo Dropout (MC Dropout): Drops activations at inference time.

- DropConnect: Drops weights at inference time.

- Flipout: Uses variational inference for weight approximation.

Compelling Results

All networks were trained using Nvidia GeForce RTX 3070 and RTX 2070 graphics cards, employing the Adam optimization algorithm. The Bayesian optimizer had a maximum budget of 50 epochs. 216 BOHB studies were conducted, resulting in 6,480 full-budget candidates.

It is demonstrated as the advantage of integrating UQ techniques into TC models for exploration rovers, ensuring high-confidence outputs with low uncertainty, crucial for navigation safety in space missions. UQ-enhanced models, especially those using Monte Carlo Dropout, show superior performance and trustworthiness.

Future work will involve online testing in analogous scenarios and extending classification to more terrain types. We also aim to use multi-objective optimization techniques, incorporating entropy to generate robust and balanced models that optimise both performance and uncertainty.

Reference

Mariela De Lucas Álvarez et al., “Terrain Classification Enhanced With Uncertainty for Space Exploration Robots From Proprioceptive Data,” July 3, 2024, https://export.arxiv.org/abs/2407.03241.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

A Peek Inside the Incredible AGI Research Assistant | AGI 24 Series – Part 2

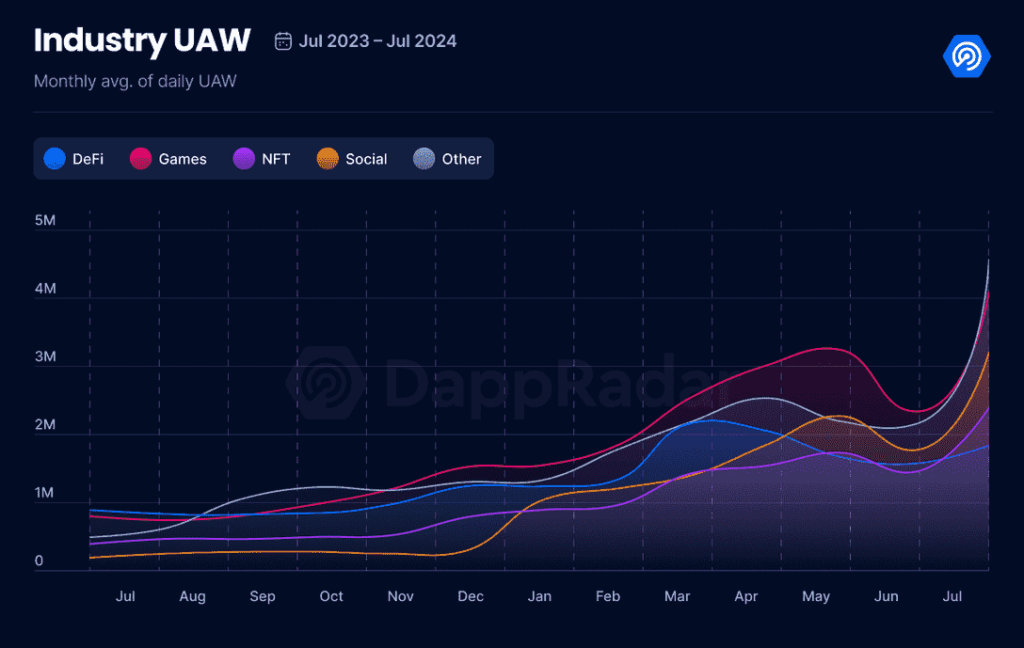

Web3 Wars: GameFi Dapps Overtaken By Crypto AI Growth, What’s Next?

TL;DR

- AI Dapps now have 28% of blockchain activity, surpassing GameFi Dapps at 26%

- GameFi is still growing: 4 million daily active wallets, up 79% month-over-month.

- Q2 2024 saw $1.1 billion investment in blockchain gaming; July dropped significantly.

Introduction

Blockchain’s decentralized application (Dapp) industry has witnessed a significant shift in recent months, with artificial intelligence (AI) Dapps surpassing crypto gaming (GameFi) Dapps as the leading category for the very first time.

This development, highlighted in the July 2024 DappRadar Games Report, means that gaming remains a robust and growing sector, but that AI-powered Dapps are the hotter current tech trends, and hold a bit more mindshare in the space right now.

AI Dapps Take the Lead

- In July 2024, the Dapp industry maintained its impressive milestone of over 15 million daily unique active wallets (dUAW) interacting with blockchain applications.

- However, the most striking development was the rise of DappRadar’s ‘Other’ category, which primarily consists of AI-based Dapps, to the top position with a 28% share of user activity.

- Gaming Dapps still demonstrated significant growth.The sector now represents 26% of DApp activity, engaging 4 million dUAW – a remarkable 79% increase from the previous month.

The rise of AI Dapps reflects a broader trend in the tech industry, where artificial intelligence and machine learning are being integrated into sectors from finance to entertainment. In the blockchain space, AI applications are leveraging decentralized networks that provide services from decentralized AI computations by projects like Render to AI-driven data analysis and prediction markets.

Gaming Sector Plays On

Despite being overtaken by AI Dapps, the blockchain gaming sector continues to show strength and innovation. The report highlights several key developments and trends:

1. Blockchain Diversity: Ronin remains the leading blockchain for gaming activity, driven by popular titles like Pixels and Lumiterra. Other networks like opBNB, Oasys, NEAR, and Immutable zkEVM are also seeing significant engagement, showcasing the diverse ecosystem of blockchain gaming platforms.

2. Emerging Titles: New games like SERAPH: In the Darkness, which launched in mid-July, have quickly gained traction, indicating ongoing innovation and user interest in fresh gaming experiences.

3. NFT Trading: Despite a general decline in metaverse-based NFT collections, gaming NFTs continue to see active trading. Gods Unchained and Axie Infinity remain the most traded gaming NFT collections, while newer entries like Guild of Guardians are gaining popularity.

4. Cross-Platform Integration: The success of games published on major platforms like the App Store and Epic Games Store shows the growing acceptance of blockchain and NFT elements in mainstream gaming channels.

Investment Landscape

The investment climate for blockchain gaming and metaverse projects is a mixed picture. July 2024 saw the lowest investment level since Q3 2020 – just $23 million across three deals – but the preceding quarter (Q2 2024) was notably strong.

Q2 marked the best quarter for blockchain gaming investments since Q3 2022, with $1.1 billion raised – a 314% increase from the previous quarter.

Key investments in Q2 included:

1. a16z Gaming Fund: Raised $600 million for game studios, infrastructure, and the Games x Consumer ecosystem.

2. Bitkraft Venture Fund: Secured $275 million for early-stage investments in gaming and interactive media companies.

3. Metaverse Projects: Significant investments in Baby Shark Universe ($34 million) and The Sandbox ($20 million) demonstrate ongoing interest in metaverse development.

These investments, focused on infrastructure and foundational development, suggest a strategic approach to enriching the Web3 gaming ecosystem. The contrast between the robust Q2 and the subdued July may indicate a temporary summer lull rather than a long-term trend.

GameFi Q2 Industry Snapshot and Analysis

1. User Engagement: Blockchain games remain strong in the Web3 industry, accounting for 26% of all Dapp activity and attracting 2.8 million active wallets daily. This persistent engagement suggests that gaming remains a key driver for Web3 adoption.

2. Blockchain Performance: Ronin has reclaimed the top spot among gaming blockchains, with a 100% increase to 1.9 million dUAW. This indicates the Ronin platform has strong user appeal. Newer platforms like Immutable zkEVM and opBNB grow rapidly.

3. Game Performance: Pixels leads the gaming landscape with 48 million unique wallets this quarter, demonstrating the enduring appeal of well-established titles. The success of newer entries like Guild of Guardians, especially following their mobile launch, shows the potential for growth through strategic platform expansions.

4. Metaverse Developments: Metaverse-based NFT collections saw a 29% decline in trading volume and a 21% drop in sales. Projects like Animoca Brands’ Mocaverse continue to dominate, capturing half of the trading volume. This suggests that while the metaverse concept may be experiencing reduced hype, established projects are maintaining their market positions.

5. Technological Advancements: The industry continues to focus on seamless gameplay experiences, investing in infrastructure and cross-chain compatibility. This focus on user experience is crucial to acquire and retain users.

6. Friend or Foe? The rise of AI Dapps presents both a challenge and an opportunity to GameFi. Gaming developers may need to integrate AI elements to stay competitive, potentially leading to more sophisticated and engaging gameplay experiences.

Looking Ahead

Despite the challenges, the blockchain gaming industry shows promising signs for future growth, especially if the 2024/2025 crypto bull run gets back on track.

New game launches are succeeding, and lots of blockchain platforms are getting traction. These trends show continuing innovation in tech, in gameplay, tokenomics, and user engagement strategies. There is potential crossover with traditional gaming platforms (as we’ve seen with Gunzilla and PS5), and interest from established tech companies. Broader industry collaborations will help the GameFi industry scale beyond what we’ve seen.

Significant investments in gaming funds and infrastructure projects continue to lay the groundwork for more sophisticated and scalable blockchain gaming experiences. Also, the rise of AI Dapps may lead to innovative hybrid models, combining elements of AI and gaming to create new, engaging experiences for users.

The rise of AI Dapps presents both a challenge and an opportunity for the GameFi sector to evolve and integrate new technologies. The capital is there. Substantial investments in Web3 gaming, infrastructure, emerging platforms, and cross-industry collaborations are laying the foundations for an exciting next phase of blockchain gaming evolution.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)