Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Steal This Singularity Part One: The Yippies Started The Digital Revolution

Every fourth one of these Mindplex articles will be an annotated and edited excerpt from my multipart piece titled Steal This Singularity, originally written some time in 2008. This will continue until I get to the end of the piece or the Singularity comes. Annotation is in gray italics.

Part One: Steal This Singularity

1: The notion that the current and future extreme technological society should not be dominated by Big Capital, Authoritarian States or the combination thereof. Also related, a play on the title of a book by 1960s counterculture radical Abbie Hoffman. Abbie may be an obscure figure to today’s young people. Let’s start to fix that here.

2: The notion that in our robotized future, human beings shouldn’t behave robotically. The response to AI isn’t to blow up or hack down AIs. Become so unique and original that no program, however sophisticated, can perform you. Let AI thrive. You have less clichéd paths to follow!

A few years ago, I proposed Big Dada as a response to Big Data. Big Data is the corporate/state/organization tool for exploitation and control, and/or for making effective policy for human benefit. (Life’s rich in ambiguity.)

With Big Dada, I suggested confusing the data by liking what you hate; hating what you like; by lying; by engaging serious issues with surrealistic gestures and language and by generally fucking with data’s logic circuits. I didn’t suspect at that time that a power-hungry, orange-faced, grifter-trickster reality show host would capture Big Dada in a sort of chaos-fascism. Clearly, there were bigger, richer Big Dadas to contend with. Who knew?

The well-rounded posthuman — if any — should be able to wail like a banshee, dance like James Brown, party like Dionysus, revolt like Joan of Arc and illuminate the irrational like Salvador Dalí. Sadly, the ones that aren’t mythological are dead, so a smart-ass immortalist might argue that even being able to wag a finger would be an improvement over the passions or mobility of these three losers.

3: The title for a website in which R.U. Sirius says and does as he pleases. As it turned out, it pleased me to not do much with that website.

The Singularity is, of course, conceived of as the time at which the artificial intelligences that we create become smarter than us. And then it makes itself even smarter and smarter still and yet smarter again and so forth… at an ever-accelerating pace until it becomes incomprehensibly something other to our wormy little minds.

I have to be honest. I’m not sure how seriously to take this. But ‘Steal This Singularity’ has much more of a ring to it than ‘Steal This Future’ or ‘Steal This Transhumanity’. Good sloganeering is intellectually disreputable… but fun. Plus anything that can fit on a T-shirt can be sold. My friend Timothy Leary used to advocate for getting your philosophy down to a bumper sticker. Tim was disreputable… but fun. And the way I see it, The Singularity has become a buzzword for the rad techno-future brought on by NBIC (Nano-Bio-Info-Cogno) or GNR (Genetics, Nanotech, and Robotics) or — to put it in more populist terms, the coming of the machine overlords.

Look, for example, at Singularity University SU had just been established when I wrote this. Here we have the establishment Singularitarians, all hooked up with NASA and Google and Cisco and Genentech. And how seriously did they take the Singularity label? Well, when Alex Lightman and I interviewed founder Peter Diamandis for h+, he made it clear that they were using the word for the same reason that I was: COOL BUZZWORD! That… and to make Ray Kurzweil happy. Ok. He didn’t blatantly say “cool-ass buzzword, dude!” He said: “to be clear, the university is not about The Singularity. It’s about the exponentially growing technologies and their effect on humanity… You know, we toyed with other terms… like Convergence University and others. But in homage to Ray…” Why do I suspect investment capital was involved?

So, in equivalent homage to SU, I call this project ‘Steal This Singularity’ and admit straight out that it may or may not have jackshit to do with ‘The Singularity’, depending on accidents, random moods and possible funding.The question, then, may be asked, smarter-than-human AIs aside, does ‘Steal This Singularity’ presume the rather technotopian possibilities promised by transhumanism, but believe that it will be necessary to STEAL it from the so-called 1%? Is that what I’m up to here? Well, maybe. How does one steal a Singularity (or something like it) from corporate ownership? I think this is a serious question. It’s almost certain that, barring a revolution, the digital other-life will be privately owned (In case of a revolution, it will probably be controlled by the vanguard state… and then, eventually, also privately owned). If, for example, humans can upload themselves into data-based quasi-immortality, it will be owned and the options will be more locked in than North Korea on a bad day. And one fine day, the powers that be or some nasty 12-year-old hacker will drag you into the garbage icon. (Yes, the garbage icon is eternal.) OK, fun’s fun but let’s get back to the real, old school fun, i.e. the Yippies.

Part Two: The Yippies Started The Digital Revolution

In 1971, a revolutionary prankster/celebrity named Abbie Hoffman, who had started the radical group the Yippies (Youth International Party) released STEAL THIS BOOK, a manual for living on the fringes of a wealthy society by grabbing up some free shit from corporate powers while committing some Blows Against the Empire (another influence on this project, btw).

See, 1971 was the last year that the vanguard of the counterculture thought that they were going to make a total cultural and political psychedelic/anarchistic/left wing revolution before realizing… fuck it. Let’s campaign for McGovern. But more to my point here and the milieu it attempts … true story… the Yippies started the phreakin’ digital revolution! To wit: The hacker culture started as the phone phreak culture. The phone phreak culture came out of the Steal This Book attitude about getting free stuff from the detritus of corporate culture, in this case, the phone company. I wonder how shoplifting and other forms of gutter-freak theft plays today among some leftists – the ones who seem to have become “inlaws in the eyes of Amerika” (Jefferson Airplane reference)… inclined towards lawful good behavior and even occasional pompous respect for American institutions. This must have emerged in reaction to a lawless lunatic right that has taken a much more visible and colorful role in the zeitgeist. There’s some extreme code-switching when it comes to the romance of insurrection (Yippies, for example, dug the Weather Underground… which, in those days, wasn’t a website for following weather conditions). And QAnon Shaman – with his war paint and animal howls – seems like someone who would only have been at home in a Yippie! prank back in ’71. There’s so much more I could say about code-switching. Maybe some other column. The first legendary phone phreak, John Draper aka Captain Crunch, who built the blue boxes, used to hang out at 9 Bleeker Street, NYC, Yippie headquarters. The first magazine that focused primarily on phone phreaking was YIPL (Youth International Party Line), which was started by Hoffman and “Al Bell.” In 1973, it transmorgified into TAP, which is more broadly remembered as the initiatory phone phreak periodical.

Phone phreaks were computer hackers. Draper famously noted that the phone system “is a computer.” From this milieu, the personal computer arose. Famously, Steve Jobs and Steve Wozniak funded the birth of Apple by selling Blue Boxes for phone phreaking.

Another Yippie contribution is the use of McLuhanism as a weapon in the countercultural revolution. Hoffman, Jerry Rubin and the other original YIPs took an idealistic youthful new left that was sort of basic and organic, and a mirror of the folk music that they loved, and made it “go electric” (a term used for when Bob Dylan started using rock ’n’ roll to communicate his increasingly surrealistic cultural critique.) That the medium is the message was central to their strategy for an anarchic left-wing sex, drugs & rock ’n’ roll youth revolution. Hoffman’s 1969 book ‘Revolution For the Hell of It’ is saturated with McLuhan references and strategies for how a freak left could take over America, end war and racism. and bring about a post-work celebratory psychedelic utopia. ‘Do It!’ yippie prankster/leader Jerry Rubin’s 1969 book was ‘zapped’ (i.e. designed) by Quentin Fiore, the same force behind ‘The Medium is the Massage’, McLuhan’s most successful incursion into the popular mind. The YIPs had faith that, being native to television and rock ’n’ roll radio, they had an intuitive understanding of the era that outmatched the dinosaurs of the establishment. They could bring the already rebellious rock ’n’ roll media babies into their utopian revolution.

As things evolved (or devolved), young people did become increasingly rebellious, and even riotous. The counterculture drifted from the intellectual class in the leading colleges out into the broader youth culture, and the emblem of rebellion shifted from Jane Fonda’s progressive activism to Peter Fonda giving the world the finger in ‘Easy Rider’. I bet some of those tangled up in this inchoate rebellion reemerged in 2020, in the Capitol Building on January 6 as hairy old dudes being disrespectful to Nancy Pelosi’s desk.

McLuhan wrote, “The global village absolutely ensures maximal disagreement on all points.” Wow! Sure seems to have called modern digital culture! This can be traced to the hippie/yippie reframing and idealization of mediated pop cultural hipness, and then on through Stewart Brand, who became obsessed with the idea that a picture of the whole earth would create a shift in human consciousness that would have us identify as citizens of earth (the global village) rather than members of a tribe or nation. Brand, with his Whole Earth Catalogs in tow, went on to become, arguably, the central figure of the emerging digital revolution in the late 1980s, sponsoring the first hackers’ conference, the first intellectual (maybe the last) social media site — a bbs called The Well — and helping create ‘Wired’ magazine, which idealized accelerated change as a world-improving hip cultural and business revolution. This may seem like a long distance from the Yippies’ original intentions — although it may be that where we landed was inevitable, the view of the essay ‘The California Ideology’ by Andy Cameron and Richard Barbook in 1995.

Indeed, the rise of the computer-enthusiastic hacker movement of the 1980s, which was made up pretty much entirely of counterculture enthusiasts, was well-timed to the Reaganite agenda for setting the entrepreneurial impulse free from regulation. It was these two forces in tandem that made the digital revolution happen. But I’m trying to cover too much ground in one column – a rant for another time.

Read the follow up article Steal This Singularity Part 2: The More Things Change, The More You’ll Need To Save

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Mindplex Podcast: Episode 6 | How TrueAGI Will Transform the Global Economy

AGI Lessons from Holography

When we think about creating an artificial general intelligence (AGI), we mostly think about what types of tasks it should be able to solve, which environments it should be able to perform in, or what types of learning it should be capable of. However, for most people’s vision of AGI, there is often a desired implicit ability of an AGI that is overlooked.

If we want our AGI to be able to solve many of the tasks that humans solve, then there is a basis of shared reality. So first, reality can be likened to the fundamental substrate of all things, which the scientific field of physics strives to find laws of order for. Now, to understand this shared basis of reality, indulge in this thought experience.

Suppose you are playing the video game Super Mario Bros, but instead of playing the game as you would on your computer, you are the character in the game; controlling your own in-game choices. Super Mario Bros is your universe and the mechanics of that game is your reality. Now you want to complete a task in Super Mario Bros. You’ve been living in the Super Mario Bros game as a player all your life, so you have some understanding of how the universe of Super Mario Bros works. You’ve built a model of what actions you can and can’t take, and how you may plan to achieve your task. Now suppose you’re suddenly dropped from Super Mario Bros into the old Atari game Pong. Without forgetting everything you know about the universe of Super Mario Bros and learning the universe of Pong, you won’t be able to operate because there is virtually nothing shared between the two universes. You will be playing as if the rules of Super Mario Bros are the rules of Pong, you’ll fail.

This is what it’s like trying to create an AGI. We have something that doesn’t understand the rules of the game and we’re expecting it to operate as if it understands the world as we do. A computer program doesn’t understand, or at least have a conceptual understanding, of our reality. We need to teach it how our universe works, the best we can. Even more so, we need to teach our AGI how to learn for itself how our reality works. That it can include video games, quantum mechanics, and how cats can sometimes act as liquids even though they should be solids, among other things.

If we, as humans, have learned how to interpret whatever reality really is, then our learning method, or rather interpretation method, might benefit an AGI. So when we are considering how to make AGI, let us consider the nature of reality.

After all, the more advanced lifeforms on Earth share an experience, some sort of objective reality which we can all agree upon. This points to the brain as an interpretation device. It takes in the signals from the universe and converts them into a representation that benefits brain owners for navigating and learning to survive in their environment. But, how does the brain do this?

There are many answers to this question provided from neuroscience, ancient civilizations, mystics, and the likes. However, there is one particular answer that affords an interesting perspective for AGI design: The Holographic Theory. Although, since it’s what most scientists wouldn’t classify as a real theory, complete with tests to assert assumptions, we’ll refer to it as the holographic model. The holographic model was first and independently introduced by David Bohm and Karl Pribram in the 80’s. The model is explored in depth by Michael Talbot’s good read, “The Holographic Universe”.

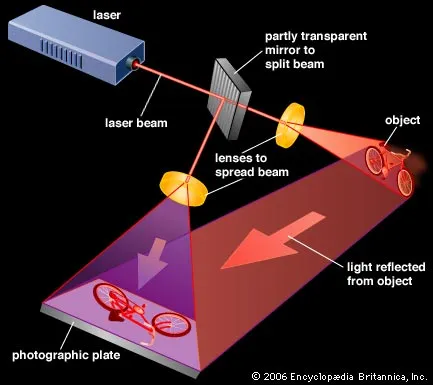

Starting with Pribram’s work, Talbot describes how various neuroscience and psychology studies suggest that neurons fire according to input stimuli represented as frequencies. For vision, the stimulus is a light wave, the cochlea in the ear captures sounds according to frequencies, and skin responds to frequencies of vibrations. Neurons then fire according to these input frequencies and interfere with surrounding neurons. As we know, when neurons fire the connections between neurons get stronger. Thus, neurons are thought to respond to wave frequency and the strengths between neurons store the pattern of interference created by wavefronts.

The second facet of the holographic model comes not from brain related research, but rather from one of Einstein’s colleagues, David Bohm. Experimental results that weren’t possible under the current quantum mechanics interpretation, were possible under Bohm’s new quantum philosophy. Amongst other results, early research on spooky action at a distance is the most useful for understanding Bohm’s idea. Particles (e.g. electrons) behave in such a way that two of them can be observed very far away from each other and both the particles have the exact same spin behavior. Think of spin like a special dance electrons do when they are floating around. So how could two different objects exhibit the same behavior even if they are very, very far away from each other?

Bohm postulated that it is because the particles are actually not very far away from each other, they are in fact, in the same location. Or what physicists like to call, non-locality, meaning they have no location. This concept can be sort of confusing and a full understanding can’t be described so briefly. Bohm attributes this ability to explicate and implicate states of reality. That reality is enfolded in the implicate state with non-locality and when we observe it, reality is unfolded in the explicate state with locality, patterns, and distinction. When the electrons are unfolded to measure spin states, they come from the implicate state where they are enfolded and information is shared and distributed across the whole, because everything is one. Thus when they are unfolded, the information of the spin state is still retained from the enfolded state.

What Bohm and Pribam have in common is that both touch on the nature of reality being a hologram. A hologram is made by two waves interfering with each other as they collide on a photographic plate. The plate records the interference pattern such that when the light is shined through the plate, only the object recorded is projected.

The analogy of the holographic model helps us understand a different kind of system. A system that can’t be reduced to the sum of its parts. This means that a reductionist approach is the wrong approach to understanding reality with the holographic model. In science, reductionism is a way of breaking down a larger concept or phenomena into a minimal number of smaller parts and the interactions of those parts. In complex system science, emergent phenomena occur when the parts of a system are combined to create the whole. Here, the parts don’t add up to the whole system because new parts emerge as other parts interact. Complex systems are non-reductionist; where disorder is organized into order, in which true randomness doesn’t exist. Every action has a precise reaction and the cycle continues.

Likewise, when the brain processes information (according to the holographic model) it doesn’t break up the incoming information into bits and store each bit as a part. If this was the case, it would be impossible to store each memory we have as a neuron, we just don’t have enough neurons, and we’re not growing new ones every time we make a memory. Instead, we multiplex neurons. We distribute information, such as memories, all over large modules in the brain.

Even so, traditional artificial intelligence and some neuroscientists still cling to the reductionist model. Deep learning constructs feature maps that build up parts of the original inputs in order to classify the correct output. With enough feature layers, we can investigate what object was seen from a particular neural net output.

Instead of storing and processing information bit-by-bit, perhaps we should consider a non-reductionist approach. One that doesn’t try to force the disorder of reality into order, but instead one that tries to interpret the disorder in a meaningful way.

Results are likely to lead to an AGI that is more like humans, more understandable in the same way that you can try to understand another person’s decisions. It may also lead to a stronger interpretation of reality and afford some measures of safety. The more we share, the more likely we are to come to the same conclusion, the same goals, like protecting the human race and making the world a better place to live.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Jim Rutt Explains How Housing Might Work In “Proto B” Communities | Highlights from Episode 5

Jim Rutt Explains How Game B “Parasitizes” Game A | Highlights from Episode 5

Ben Goertzel and Jim Rutt On Energy Infrastructure | Highlights from Episode 5

Jim Rutt on “Game B” and Complex Systems | Highlights from Episode 5

Ben Goertzel and Jim Rutt discuss “Raising AGIs” | Highlights from Episode 5

Accelerating to Nowhere

Accelerating To Nowhere

In an excellent conversation right here on Mindplex, Cory Doctorow went on a bit of a rant about how there were more changes over the 20th century leading up to the digital revolution than in this virtualized century. It’s worth sharing most of it: “mid century America, from post-war to 1980, is probably the most dynamic era in industrial history. In terms of total ground covered, we’re talking about a period that went from literal horse drawn carriages as a standard mode of transportation for a significant fraction of Americans to rocket ships… the number of changes you had to absorb from cradle to grave over that period are far more significant than the ones we’ve had now… someone born, like me, in 1971, has had to deal with computers getting faster and more ubiquitous, but not the invention of computers per se…. not the invention of telecommunications per se…”

Accelerationists, check under the pedal. It may be bricked. (ed: R.U. Sirius uses the term accelerationist to mean those wishing to intensify technological change and not specifically to the neoreactionary use of the term.)

Accelerating Into Digital Delirium

The point is well taken.

I would only counter that, in a sense, Cory is comparing oranges to apples (or Apples, if you prefer). The 21st century is seeing a particular type of extreme acceleration: an acceleration out of physicality into nonphysical virtual space. And as the result of a number of factors, not least of which are already distorted economic and political cultures — this appears to be an acceleration towards something of a mass psychotic break with reality. From people shooting a lot of people at once as a lifestyle choice (over the last few days, shooting anyone that unexpectedly enters your personal space has become trendy), to the predations of the followers of the cult of QAnon, the evidence is all around us, particularly in the hypermediated hot zone that is the USA.

On Twitter, Chris Stein reports on an example of this confusion: “Today I saw a guy with two hundred and eighty thousand followers promoting a story about McDonald’s in the UK serving dead babies and there were numerous comments that were like ‘yeah! Criminal! We are outraged! This is bad’” A few days ago Vice reported that “someone is selling computer generated swatting services.” Automated terror as an amusement for some young males. The very fact that swatting seems like a fun game to some young people is one of the myriad examples of the degree to which people today are buffered from physicality by mediation… divorced from the consequences of their actions. Taken alone, these examples may not strike the reader as being as impactful as, say, the twentieth century killing fields of Cambodia. But I would aver that the plague of bad weird actions caused by digital interference in our ability to separate reality from virtuality are the first signs of a fast spreading mass delirium.

In a 1991 MONDO 2000 interview, the late avant-garde novelist Kathy Acker said: “When reality—the meanings associated with reality—is up for grabs, then the body itself becomes the only thing you can return to.” Today, virtuality assaults that body as if it were its most potent appendage.

A Kind Of Social Singularity

Vernor Vinge’s original concept of the Singularity suggested that we can’t understand or predict who (or what) we will be, or what life — our societies, psychologies, politics, technologies etc. — will be beyond the point when we develop smarter-than-human AIs. It would, according to Vinge, all be a kind of unimaginable blank slate. My less extravagant thought is that we have induced a kind of Social Singularity when we herded billions of humans onto the net.

Giving everyone access to the means of global communication both as communicator and receiver has shattered consensus realities into individual and small-group reality tunnels. From this point on, we can no longer comprehend or predict what the cognitive and sociopolitical results will be. Of course, unlike in Vinge’s Singularity, this Social Singularity doesn’t replace humans as the main actors in our history.

On The Other Hand

I’ll confess that I may be going a bit overboard in saying that a Social Singularity can be caused by just the presence of billions of people on the internet. At the end of the ‘90s, I was already saying it was impossible to get people to focus on the same narrative. Then 9/11 happened. One event decidedly brought people into the same narrative and, it must be said, a harmful consensus was generated that led to the Patriot Act, the American torture gulags and the preposterous invasion of Iraq. There are good things and bad things about smashing consensus reality.

Perhaps climate breakdown could refocus us on a common narrative, but there are greater financial interests in sowing confusion about blame and solutions there than there was after 9/11 (although the Iraq invasion was a money spinner for companies owned by friends and even members of the George W. Bush administration).

Money For Nothing & Your Clicks For Free

In his essay ‘Of Flying Cars and the Declining Rate of Profit’, the anarchist writer and philosopher David Graeber wrote about how the end of bipolar competition between the US and the USSR might have been instrumental in changing the priorities of the US. Specifically, we backed off the Space Race – but we abandoned most other big physical/material projects too. (The culture theorist Arthur Kroker referred to the period after the fall of the Soviet Bloc as “the recline of Western Civilization”). Graeber wrote “Where… are the flying cars? Where are the force fields, tractor beams, teleportation pods, antigravity sleds, tricorders, immortality drugs, colonies on Mars, and all the other technological wonders any child growing up in the mid-to-late twentieth century assumed would exist by now? Even those inventions that seemed ready to emerge—like cloning or cryogenics.” Graeber goes on to note that we’re in “a technological environment in which the only breakthroughs were those that made it easier to create, transfer, and rearrange virtual projections of things that either already existed, or, we came to realize, never would.”

Graeber points out that social analysts identified the Space Race as key to the 20th century public’s exaggerated expectations of transformative technological magic. But Graeber goes further than that. He writes that the Soviet Union constituted a greater challenge to American technological superiority than is usually recognized. Graeber: “There was the awesome space race, alongside frenetic efforts by U.S. industrial planners to apply existing technologies to consumer purposes, to create an optimistic sense of burgeoning prosperity and guaranteed progress that would undercut the appeal of working-class politics.

“These moves were reactions to initiatives from the Soviet Union. But this part of the history is difficult for Americans to remember, because at the end of the Cold War, the popular image of the Soviet Union switched from terrifyingly bold rival to pathetic basket case—the exemplar of a society that could not work. Back in the fifties, in fact, many United States planners suspected the Soviet system worked better. Certainly, they recalled the fact that in the thirties, while the United States had been mired in depression, the Soviet Union had maintained almost unprecedented economic growth rates of 10 percent to 12 percent a year.”

Graeber’s piece does not claim the end of the Cold War was the sole reason (or even the most important reason) for the retreat from making stuff — real material stuff that might have transformed our lives. It’s just an element of the essay that has stuck in my mind ever since I read it about a decade ago.

Still, it seems that the fall of the Soviet Bloc and, with it, the final closure of any sense that there was a competition for bragging rights, was perfectly timed for a lot of capital to abandon the physical and relocate in cyberspace, resulting in what has been — in terms of real material productivity — largely a massive circlejerk. IDC FutureScape and Business Wire recently reported that by 2022, reported that “more than half the global economy will be based on or influenced by digital.”

That “giant sucking sound” Ross Perot thought was going to come from Mexico and Canada is the sound of all the investment of time, energy, imagination and creativity being sucked into virtuality. When we think of the massive amounts of capital that have flowed in and out of the monsters of online life like Facebook, TikTok,YouTube, etcetera, we understand that it has produced sound and fury signifying nothing, certainly not many improvements that justify this mass shift in priorities.

Era of the Techno Medicis

Today the US space program has been largely removed from the political agenda, and has been privatized along with many other hoped-for big projects out here in the material world. These hopes are now the playthings of billionaires, something they can do with their excess. For some undeterred utopians, these projects justify concentration of capital in a few hands — a concentration that only someone who’d been fed decades of free market propaganda could palate. These projects assuage the egos of the very few while alienating most people from any techno-progressive dreams. The excitement about technology that was so ubiquitous in the 1990s, and even at the start of this century, has turned almost entirely bitter.

Return To the Hacker Sharing Ethic

In the 1990s and earlier in this century, there was much talk of a ‘digital divide’. Divides still exist. There are cases in which, to do their schoolwork, poor kids will work sitting outside some institution that has WiFi. But, for the most part, everybody is, at least, online. The new digital divide might be between the people who are still techno-optimists and the people who see only over-privileged tech bros. It’s an emotional and attitudinal divide. I’m disinclined towards seeing any solution, although the early sensibility of hacker culture that was largely based on sharing and mutualism still has the hearts and minds of many of the brightest tech workers. I think we should direct whatever hope, energy and support we can muster toward that.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)