Scientists have identified 14 “evolutionary traps” for human societies at large, ranging from global climate tipping points to misaligned artificial intelligence, chemical pollution, and accelerating infectious diseases.

The Anthropocene—the geological epoch shaped by us humans—is showing more and more cracks, says Peter Søgaard Jorgensen, researcher at the Stockholm Resilience Centre at Stockholm University and the Royal Swedish Academy of Sciences.

Multiple global crises, such as the Covid-19 pandemic, climate change, food insecurity, financial crises, and conflicts have started to occur simultaneously in a “polycrisis.”

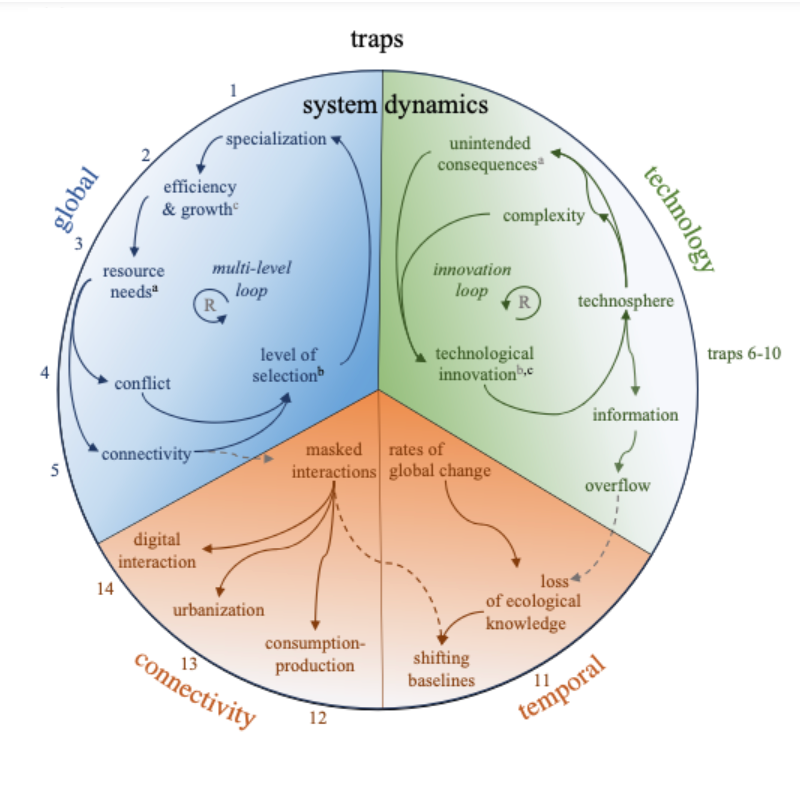

Evolutionary traps

“The human species has been too successful and, in some ways, too smart for its own future good,” says Jørgensen, lead author of a new landmark study published today in the open-access journal Philosophical Transactions of the Royal Society B, with insights from a wide range of different scientific disciplines across the natural and social sciences and humanities.

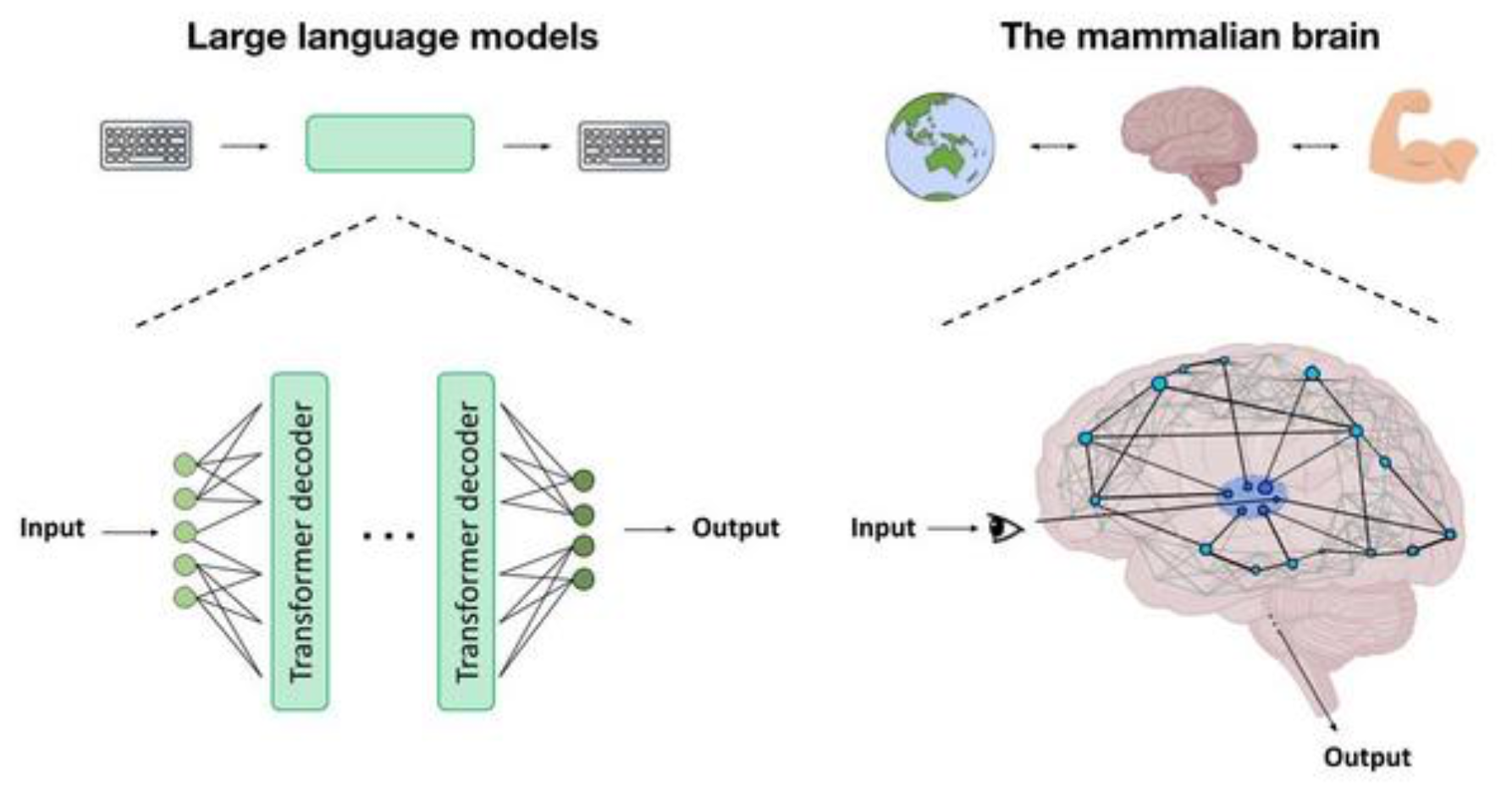

The new study shows how humanity could get stuck in “evolutionary traps”—dead ends that occur from initially successful innovations. The study identifes 14 of these, including the simplification of agriculture, economic growth that does not deliver benefits for humans or the environment, the instability of global cooperation, climate tipping points, and artificial intelligence.

Simplifying agricultural systems is an example of such a trap—relying on a few highly productive crops such as wheat, rice, maize, and soya—means that calories produced have skyrocketed over the past century. But the food system has become very vulnerable to environmental change, such as weather extremes or new diseases.

On the verge of getting stuck

Of the 14 evolutionary traps, 12 are in an advanced state, meaning that humankind is on the verge of getting stuck to a degree where it becomes very difficult to get out. These evolutionary traps tend to reinforce one other.

The researchers suggest that two dead ends that are currently kess advanced are the autonomy of technology (AI/robotics) and loss of social capital through digitalization, the researchers suggest.

Evading the traps

The new assessment also looks into why societies struggle so hard to move out of these traps. “The evolutionary forces that created the Anthropocene do not work well in today’s global systems, where social and environmental problems grow in places that seem distant to the societies that could prevent them.

Also, “addressing them often requires global collaboration on a scale that many evolutionary forces often do not align well with,” says co-author Lan Wang-Erlandsson, researcher at the Stockholm Resilience Centre at Stockholm University and the Royal Swedish Academy of Sciences’ Anthropocene laboratory.

Transforming societies

This doesn’t mean that humanity is doomed to fail, argue the researchers. But we must start to transform our societies actively.

“It’s time for humans to become aware of the new reality and to collectively move where we want to as a species,” explains Jørgensen. “Our creativity, and our power to innovate and collaborate equip us with the perfect tools to actively design our future. We can break out of dead ends and business-as-usual, but for that, we must nurture the capacity for collective human agency and design settings where it can flourish.”

Citation: Peter Søgaard Jørgensen et al. Evolution of the polycrisis: Anthropocene traps that challenge global sustainability. Philosophical Transactions of the Royal Society B: Biological Sciences. Vol. 379, Issue 1893. https://doi.org/10.1098/rstb.2022.0261 (open-access)

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)