Introduction

While the traditional crypto wisdom is to sell in May and go away, Ethereum had other plans. On 23 May 2024, the U.S. Securities and Exchange Commission (SEC) surprisingly greenlit spot Ethereum (ETH) exchange-traded funds (ETFs) in principle. This has sent its price skyrocketing and turned its future very bright. Most crypto investors thought this would never come, due to the United States’ onslaught on cryptocurrency technology, or would require a multi-year political struggle.

It marked a pivotal and triumphant moment for the cryptocurrency market that will boost its development even further and bolster a beleaguered US crypto sector dragged down by heavy-handed regulation the last few years. It also again showed that politics and finance always intertwine, coming soon after Donald Trump’s embrace of crypto in the US essentially forced that country’s Democrat party to make a dramatic U-turn on the sector in what will be a tough election year to keep Joe Biden in power.

In this article, we’ll explore the implications of this approval, the impact its had on Ethereum and the broader crypto market, and the potential benefits for retail investors.

What is an Ethereum ETF?

An Ethereum ETF is a financial product that allows investors to buy shares representing a stake in Ethereum, without needing to directly purchase or manage the cryptocurrency itself. ETFs are traded on traditional stock exchanges, making them accessible to a wider range of investors. The approval of these ETFs means institutional and retail investors can now gain exposure to Ethereum through regulated financial products.

The Road to Approval

The journey to the approval of Ethereum ETFs has been long and difficult, and fraught with regulatory hurdles since Vitalik Buterin – with co-founders including Cardano’s Charles Hoskinson and Polkadot’s Gavin Wood – launched Ethereum in 2015 as a novel application of blockchain technology.

The SEC’s approval of Bitcoin ETFs earlier in the year laid a path down for Ethereum ETFs to follow. This path was studded with rigorous analysis and a public comment period, during which stakeholders provided feedback on various aspects of the proposed ETFs, including custodianship, sponsor fees, and creation and redemption models.

Market Reactions and Expectations

Impact on Ethereum’s Price

A tweet from influential analysts Eric Balchunas and James Seyffart increased the estimated odds of an Ethereum ETF to 75%. The result: the price of ETH spiked within hours to nearly $4,000, before retracing.

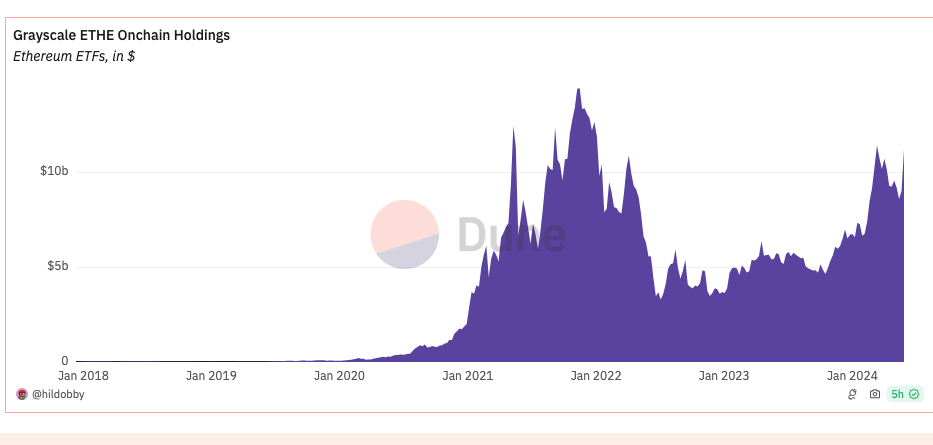

Following the announcement, the price of Ethereum remained largely unchanged, soon dropping to as low as $3,500 in typical “sell the news” behavior. A number of other factors also impacted the ETH price blowoff. Notably, additional hurdles must be cleared first before an actual Ethereum ETF is approved, such as the final filing of amended S1 applications for each issuer (BlackRock is pushing for a July 4th launch) as well as another round of Mt Gox FUD. And of course, there’s also the question of whether we’ll see another huge sell-off from Grayscale holders.

This price movement is reminiscent of the initial reactions seen with Bitcoin ETFs, suggesting a pattern of bullish sentiment following regulatory endorsements. However, investors had more time to ladder into their BTC buys, knowing that it was almost certainly coming, whereas Ethereum went into the ETF battle as an underdog.

What does an Ethereum ETF mean longterm for crypto?

Regulatory Greenlight

The SEC’s decision brings Ethereum closer to being classified as a commodity, like Bitcoin. The devastation of being labeled a security has been hanging like a sword over its head for years, despite earlier statements by both the SEC’s William Hinson in 2018 and the CFTC in 2022 that it was not a security. Classification as a commodity will simplify regulation for Ethereum, providing clearer guidelines for future developments and investments in the ecosystem. Additionally, the recent crypto-friendly legislative movements in Congress have created a more supportive environment for digital assets.

This regulatory clarity helps to clear the way for the network to scale and become the world’s computer. Ethereum’s ongoing technological upgrades – such as the implementation of zero knowledge roll-up technology and sharding – are poised to increase the network’s transaction capacity and efficiency which will help maintain Ethereum’s competitiveness against rivals such as Solana, Sui and Cardano and support its growing ecosystem of layer-2 networks such as Arbitrum, Optimism, Base, Linea, Stark and ZkSync. On all these networks in turn reside hundreds of thousands of decentralized applications (dApps).

Web3 Stays Free

Classifying Ethereum as a security would significantly impact the Web3 industry, affecting dApps, DeFi, and NFTs that rely on Ethereum’s layer-2 solutions. With Ethereum leading the DeFi space, holding over 60% market share and $100 billion in total value locked, regulatory challenges could arise that could kill any other crypto layer-1 and layer-2 network, especially proof-of-stake ones.

Benefits for Retail Investors

One of the primary benefits of Ethereum ETFs for retail investors is the ease of access. Investing in ETFs does not require the digital know-how or cybersecurity measures needed for direct cryptocurrency investments. There’s no private key management, no hacking and scam risks, which cost crypto investors billions each year. This accessibility can encourage more widespread participation in the crypto market.

ETFs offer a diversified investment option: they can include a range of assets within a single product. For retail investors, this means a more balanced exposure to Ethereum, potentially reducing the volatility risks of individual cryptocurrency investments, and also means insured investments.

On the downside though, ETF investors will not earn any ETH staking rewards yield (at least not initially). This reward is around 5%. Ethereum’s Shanghai upgrade last year kicked off a liquid staking (LST) and Eigenlayer restaking frenzy that has rejuvenated its struggling DeFi sector.

Trading Strategies Around Ethereum ETFs

With the approval of several Ethereum ETFs now just over the horizon, traders can adopt various strategies to navigate the evolving market landscape. We won’t speculate on the future price of Ethereum, as there are too many variables at play, both on an industry and macro-economic level. However, market sentiment is quite bullish for the next year, especially if the bull market resumes and ETH can turn deflationary again thanks to its EIP-1559 upgrade.

Conclusion

The approval of Ethereum ETFs is a transformative event for the cryptocurrency market that could unlock a new era of mainstream crypto adoption. This regulatory milestone validates Ethereum’s role in the financial ecosystem, and opens the door for increased institutional investment and greater market liquidity. It also boosts the development of Web3 technology and infrastructure, particularly in the USA.

For retail investors, Ethereum ETFs offer a convenient and regulated way to participate in the growth of the crypto market, providing opportunities for diversification and risk management. As Ethereum continues to innovate and evolve, ETFs will shape its future trajectory and solidify its position as a leading digital asset.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)