‘Holodeck’ helps robots navigate the real world

Apr. 12, 2024.

2 min. read

9 Interactions

How to create millions or billions of simulated environments

Virtual interactive environments are currently used to train robots prior to real-world deployment, in a process called “Sim2Real.” But these complex environments, created by artists, are in short supply.

“Generative AI systems like ChatGPT are trained on trillions of words, and image generators like Midjourney and DALL-E are trained on billions of images,” notes Callison-Burch, Associate Professor in Computer and Information Science (CIS) at the University of Pennsylvania.

“We only have a fraction of that amount of 3D environments for training ‘embodied AI.’ If we want to use generative AI techniques to develop robots that can safely navigate in real-world environments, then we will need to create millions or billions of simulated environments.”

Star Trek-inspired

Enter Holodeck, a system for generating interactive 3D environments, co-created by Callison-Burch and others at Penn, and collaborators at Stanford, the University of Washington, and the Allen Institute for Artificial Intelligence (AI2).

Holodeck generates a virtually limitless range of indoor environments, using AI to interpret users’ requests.

Holodeck engages an LLM in conversation, using a carefully structured series of hidden queries to break down user requests into specific parameters. The system executes this query by dividing it into multiple steps. The floor and walls are created, then the doorway and windows.

Next, Holodeck searches Objaverse, a vast library of premade digital objects. Holodeck queries a layout module, which the researchers designed to constrain the placement of objects.

Reality check

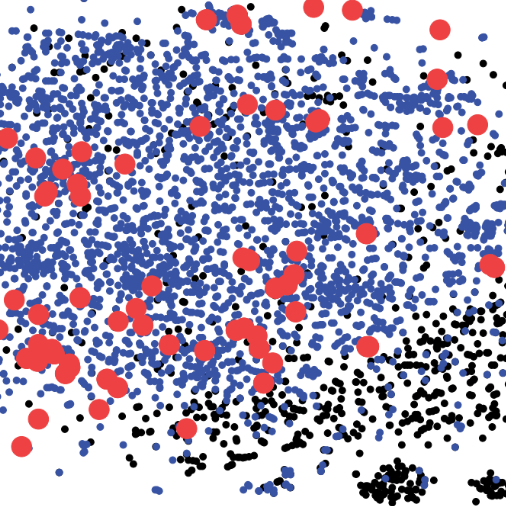

To evaluate Holodeck’s abilities, the researchers generated 120 scenes using both Holodeck and ProcTHOR, an earlier tool created by the School of Engineering and Applied Science and the

University of Pennsylvania Institute for Artificial Intelligence. Students then evaluated the results.

The researchers also tested Holodeck’s ability to generate scenes that are less typical in robotics research and more difficult to manually create than apartment interiors, like stores, public spaces and offices. The researchers then used scenes generated by Holodeck to “fine-tune” an embodied AI agent.

In June, the researchers will present Holodeck at the 2024 Institute of Electrical and Electronics

Engineers (IEEE) and Computer Vision Foundation (CVF) Computer Vision and

Pattern Recognition (CVPR) Conference in Seattle, Washington.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)

1 Comments

One thought on “‘Holodeck’ helps robots navigate the real world”

greatttt article...

🟨 😴 😡 ❌ 🤮 💩