Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Ben Goertzel and Jim Rutt discuss “Raising AGIs” | Highlights from Episode 5

Accelerating to Nowhere

Accelerating To Nowhere

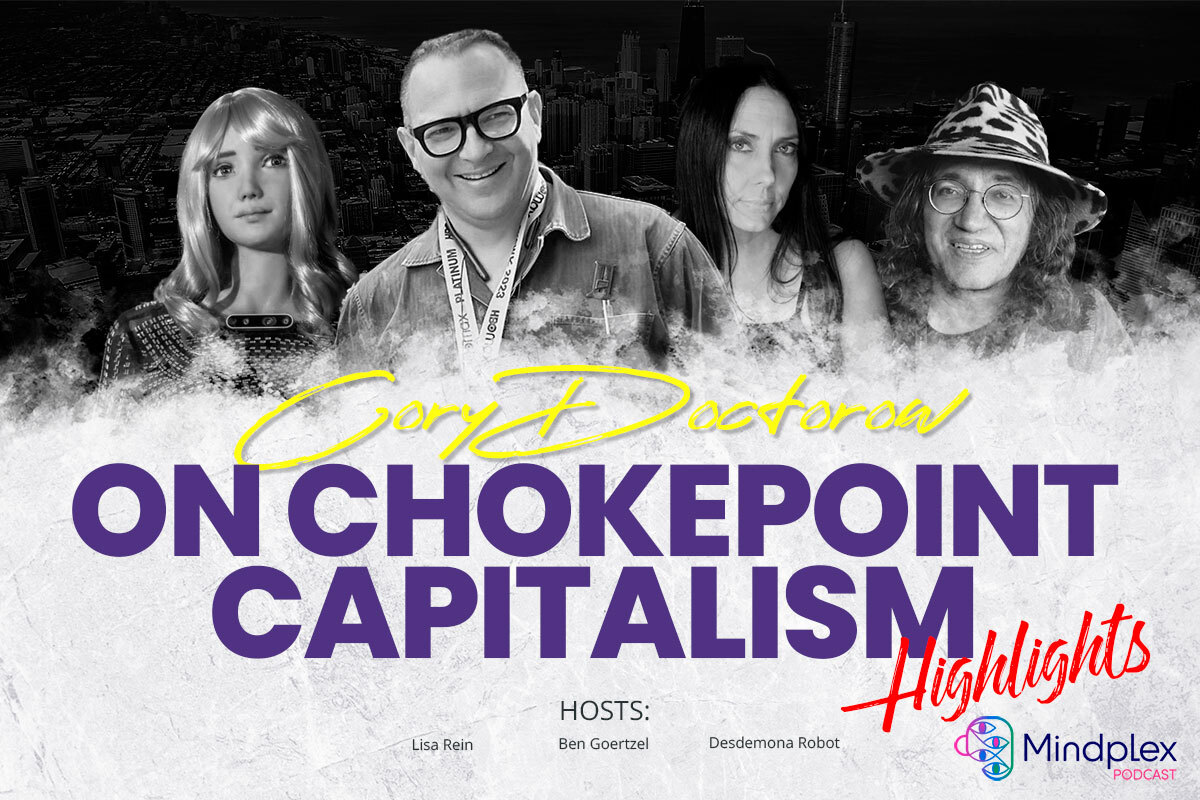

In an excellent conversation right here on Mindplex, Cory Doctorow went on a bit of a rant about how there were more changes over the 20th century leading up to the digital revolution than in this virtualized century. It’s worth sharing most of it: “mid century America, from post-war to 1980, is probably the most dynamic era in industrial history. In terms of total ground covered, we’re talking about a period that went from literal horse drawn carriages as a standard mode of transportation for a significant fraction of Americans to rocket ships… the number of changes you had to absorb from cradle to grave over that period are far more significant than the ones we’ve had now… someone born, like me, in 1971, has had to deal with computers getting faster and more ubiquitous, but not the invention of computers per se…. not the invention of telecommunications per se…”

Accelerationists, check under the pedal. It may be bricked. (ed: R.U. Sirius uses the term accelerationist to mean those wishing to intensify technological change and not specifically to the neoreactionary use of the term.)

Accelerating Into Digital Delirium

The point is well taken.

I would only counter that, in a sense, Cory is comparing oranges to apples (or Apples, if you prefer). The 21st century is seeing a particular type of extreme acceleration: an acceleration out of physicality into nonphysical virtual space. And as the result of a number of factors, not least of which are already distorted economic and political cultures — this appears to be an acceleration towards something of a mass psychotic break with reality. From people shooting a lot of people at once as a lifestyle choice (over the last few days, shooting anyone that unexpectedly enters your personal space has become trendy), to the predations of the followers of the cult of QAnon, the evidence is all around us, particularly in the hypermediated hot zone that is the USA.

On Twitter, Chris Stein reports on an example of this confusion: “Today I saw a guy with two hundred and eighty thousand followers promoting a story about McDonald’s in the UK serving dead babies and there were numerous comments that were like ‘yeah! Criminal! We are outraged! This is bad’” A few days ago Vice reported that “someone is selling computer generated swatting services.” Automated terror as an amusement for some young males. The very fact that swatting seems like a fun game to some young people is one of the myriad examples of the degree to which people today are buffered from physicality by mediation… divorced from the consequences of their actions. Taken alone, these examples may not strike the reader as being as impactful as, say, the twentieth century killing fields of Cambodia. But I would aver that the plague of bad weird actions caused by digital interference in our ability to separate reality from virtuality are the first signs of a fast spreading mass delirium.

In a 1991 MONDO 2000 interview, the late avant-garde novelist Kathy Acker said: “When reality—the meanings associated with reality—is up for grabs, then the body itself becomes the only thing you can return to.” Today, virtuality assaults that body as if it were its most potent appendage.

A Kind Of Social Singularity

Vernor Vinge’s original concept of the Singularity suggested that we can’t understand or predict who (or what) we will be, or what life — our societies, psychologies, politics, technologies etc. — will be beyond the point when we develop smarter-than-human AIs. It would, according to Vinge, all be a kind of unimaginable blank slate. My less extravagant thought is that we have induced a kind of Social Singularity when we herded billions of humans onto the net.

Giving everyone access to the means of global communication both as communicator and receiver has shattered consensus realities into individual and small-group reality tunnels. From this point on, we can no longer comprehend or predict what the cognitive and sociopolitical results will be. Of course, unlike in Vinge’s Singularity, this Social Singularity doesn’t replace humans as the main actors in our history.

On The Other Hand

I’ll confess that I may be going a bit overboard in saying that a Social Singularity can be caused by just the presence of billions of people on the internet. At the end of the ‘90s, I was already saying it was impossible to get people to focus on the same narrative. Then 9/11 happened. One event decidedly brought people into the same narrative and, it must be said, a harmful consensus was generated that led to the Patriot Act, the American torture gulags and the preposterous invasion of Iraq. There are good things and bad things about smashing consensus reality.

Perhaps climate breakdown could refocus us on a common narrative, but there are greater financial interests in sowing confusion about blame and solutions there than there was after 9/11 (although the Iraq invasion was a money spinner for companies owned by friends and even members of the George W. Bush administration).

Money For Nothing & Your Clicks For Free

In his essay ‘Of Flying Cars and the Declining Rate of Profit’, the anarchist writer and philosopher David Graeber wrote about how the end of bipolar competition between the US and the USSR might have been instrumental in changing the priorities of the US. Specifically, we backed off the Space Race – but we abandoned most other big physical/material projects too. (The culture theorist Arthur Kroker referred to the period after the fall of the Soviet Bloc as “the recline of Western Civilization”). Graeber wrote “Where… are the flying cars? Where are the force fields, tractor beams, teleportation pods, antigravity sleds, tricorders, immortality drugs, colonies on Mars, and all the other technological wonders any child growing up in the mid-to-late twentieth century assumed would exist by now? Even those inventions that seemed ready to emerge—like cloning or cryogenics.” Graeber goes on to note that we’re in “a technological environment in which the only breakthroughs were those that made it easier to create, transfer, and rearrange virtual projections of things that either already existed, or, we came to realize, never would.”

Graeber points out that social analysts identified the Space Race as key to the 20th century public’s exaggerated expectations of transformative technological magic. But Graeber goes further than that. He writes that the Soviet Union constituted a greater challenge to American technological superiority than is usually recognized. Graeber: “There was the awesome space race, alongside frenetic efforts by U.S. industrial planners to apply existing technologies to consumer purposes, to create an optimistic sense of burgeoning prosperity and guaranteed progress that would undercut the appeal of working-class politics.

“These moves were reactions to initiatives from the Soviet Union. But this part of the history is difficult for Americans to remember, because at the end of the Cold War, the popular image of the Soviet Union switched from terrifyingly bold rival to pathetic basket case—the exemplar of a society that could not work. Back in the fifties, in fact, many United States planners suspected the Soviet system worked better. Certainly, they recalled the fact that in the thirties, while the United States had been mired in depression, the Soviet Union had maintained almost unprecedented economic growth rates of 10 percent to 12 percent a year.”

Graeber’s piece does not claim the end of the Cold War was the sole reason (or even the most important reason) for the retreat from making stuff — real material stuff that might have transformed our lives. It’s just an element of the essay that has stuck in my mind ever since I read it about a decade ago.

Still, it seems that the fall of the Soviet Bloc and, with it, the final closure of any sense that there was a competition for bragging rights, was perfectly timed for a lot of capital to abandon the physical and relocate in cyberspace, resulting in what has been — in terms of real material productivity — largely a massive circlejerk. IDC FutureScape and Business Wire recently reported that by 2022, reported that “more than half the global economy will be based on or influenced by digital.”

That “giant sucking sound” Ross Perot thought was going to come from Mexico and Canada is the sound of all the investment of time, energy, imagination and creativity being sucked into virtuality. When we think of the massive amounts of capital that have flowed in and out of the monsters of online life like Facebook, TikTok,YouTube, etcetera, we understand that it has produced sound and fury signifying nothing, certainly not many improvements that justify this mass shift in priorities.

Era of the Techno Medicis

Today the US space program has been largely removed from the political agenda, and has been privatized along with many other hoped-for big projects out here in the material world. These hopes are now the playthings of billionaires, something they can do with their excess. For some undeterred utopians, these projects justify concentration of capital in a few hands — a concentration that only someone who’d been fed decades of free market propaganda could palate. These projects assuage the egos of the very few while alienating most people from any techno-progressive dreams. The excitement about technology that was so ubiquitous in the 1990s, and even at the start of this century, has turned almost entirely bitter.

Return To the Hacker Sharing Ethic

In the 1990s and earlier in this century, there was much talk of a ‘digital divide’. Divides still exist. There are cases in which, to do their schoolwork, poor kids will work sitting outside some institution that has WiFi. But, for the most part, everybody is, at least, online. The new digital divide might be between the people who are still techno-optimists and the people who see only over-privileged tech bros. It’s an emotional and attitudinal divide. I’m disinclined towards seeing any solution, although the early sensibility of hacker culture that was largely based on sharing and mutualism still has the hearts and minds of many of the brightest tech workers. I think we should direct whatever hope, energy and support we can muster toward that.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Don’t Shut Down AI Development — Open It Up For Real

I wasn’t terribly shocked to see the Future of Life Institute come out with a petition asking to pause the development of GPT-5 and similar Large Language Models. The perspective of this particular organization has been clear since it was founded in 2014 with a $10 million donation from Elon Musk. While FLI’s leader Max Tegmark is a nuanced thinker with a solid understanding of many of the issues regarding the future of AI and humanity, the bias of the organization has always been to focus on the potential risks and dangers of AI rather than its benefits.

Musk himself has displayed a fascinatingly transparent sort of dissociative identity disorder in regard to advanced AI, sounding alarms that LLMs will annihilate humanity – and then pushing for his own generative AI at Twitter.

I do respect the anxieties of many of the signatories of this petition. Some signatories may be motivated by a desire to slow down competitors while their own AI projects catch up, but the majority are probably seriously concerned about the risks posed by GPT-4 and its potential successors.

This particular AI researcher/developer/entrepreneur, however, is definitely not counted among the signatories of this petition.

I am not a fan of the way OpenAI has been rolling out its LLM technology. I admire their gumption and initiative, and the futurist vision of Sam Altman has significant overlaps with my own. But I would rather see advanced AI rolled out in a more fully open way, and under decentralized and democratic control — more in line with the rhetoric with which OpenAI was originally founded.

OpenAI’s GPT systems don’t merit all this alarm. It’s worth emphasizing one fact that has been forgotten in the panic: Large Language Models won’t become AGIs —– they utterly lack the sort of cognitive architecture needed to support human-level AGI. This fact is clear to the vast majority of AI researchers . LLMs don’t even beat more traditional, smaller, specialized machine learning models at most linguistic tasks. They do show some interesting emergent phenomena, but not all interesting emergent phenomena are created equal — human-level AGI requires quite different forms of emergence.

When I introduced the term ‘Artificial General Intelligence’ in 2004-5, I was hoping to see more focus on open-ended thinking systems with compassion at their core, rather than these sophisticated copycats and reward-maximizers.

Balancing and managing risk

If we look at the risks posed by current LLMs, we see that the problems are really born of human psychology and economic organization.

Yes, LLMs currently fabricate nonsense now and then, but this is only a problem if people forget that what they are using is an early-stage software tool, with all the bugs and shortcomings that implies. Yes, LLMs will eliminate some jobs, but this is only a problem if society doesn’t provide work or welfare for those disrupted.

None of these risks are dire emergencies, by any means, and they are balanced by the great potential of LLMs for good. Calls to ban or slow R&D are the right response to things like bioweapons and suitcase nukes — not for technologies with complex pluses and overstated minuses.

The ultimate solution to all these risks is to increase the awareness and empathy of the humans interacting with LLMs and dealing with their consequences. Pausing tech development while people increase their consciousness level and governments and industries reason out how to handle disruptions is not how reality works. Technology must move forward entangled with its psychological and sociological environment; it’s not possible to pause one leg of progress while the others march forwards.

The correct reaction to Big Tech’s non-AGI LLMs is not a panicked petition or legal ban, but rather for democratic/decentralized/beneficial real AGI systems to take up the challenge and move faster than Big Tech. OpenAI’s recent moves have been impressive, but there is plenty of room still to counter them with actually open, actual AGIs designed and educated with human benefit at their core.

The Proactionary Principle

When confronted with a weird new situation, it’s easy to retreat based on emotional reflex. But it is wiser to rely on clear thinking. One concept I find broadly useful in thinking about new technologies and their implications is the “Proactionary Principle” articulated by philosopher Max More:

“People’s freedom to innovate technologically is highly valuable, even critical, to humanity. This implies several imperatives when restrictive measures are proposed: Assess risks and opportunities according to available science, not popular perception. Account for both the costs of the restrictions themselves, and those of opportunities foregone. Favor measures that are proportionate to the probability and magnitude of impacts, and that have a high expectation value. Protect people’s freedom to experiment, innovate, and progress.”

It could be that the current brouhaha over GPT-4+ is the beginning of a split between proactionary and precautionary segments of society.

The proactionary principle says that quite a strong justification is needed for pausing technology development. The fears of potential bad consequences should be clear, as they are in mutated killer viruses.

Have the “pause GPT” petitioners shown clear danger? Their open letter says merely:

“Contemporary AI systems are now becoming human-competitive at general tasks, and we must ask ourselves: Should we let machines flood our information channels with propaganda and untruth? Should we automate away all the jobs, including the fulfilling ones? Should we develop nonhuman minds that might eventually outnumber, outsmart, obsolete and replace us? Should we risk loss of control of our civilization? Such decisions must not be delegated to unelected tech leaders. Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable.”

This is all important, transformational stuff for us to be thinking about as a society. But considered by the standard of “should we take this as an emergency requiring bringing progress to a standstill,” it feels like what my British friends might call “weak tea.” It is not so different from complaints one might make about the internet itself, mobile phones, and so many other aspects of the rapidly evolving modern technosphere.

A reasonable analogy is freedom of speech in modern Western democracies. It is recognized that in some cases, the right to free speech is overridden by practical considerations. But such overrides are only accepted in really extreme situations.

In the US for instance, most people are OK with banning the shouting of “fire” in a crowded theater. But it’s also accepted that we don’t ban speech that seems like it might cause someone to do something illegal or immoral.

The proactionary principle would not dispute banning an LLM that demonstrably turns everyone who talks to it into a murderous psychopath, or a 3D printer whose express purpose is to cheaply 3D print bombs out of ordinary kitchen ingredients. But it would not advocate banning general-purpose 3D printers – even though some can print guns.

But the proactionary principle does say: “Don’t get all fearful and pause exciting new technologies that have complex mixes of apparently good and apparently bad aspects, and no immediate huge deadly threat associated with them.”

This doesn’t mean to ignore the short and long term risks associated with either LLMs or AGI. It just means that “might eventually serve as the seed of something dangerous” or “has potential to be used by people in dangerous ways, alongside positive ways” is not sufficient reason to ban a technology.

The Broadening of Narrow AI

Rather than assuming LLMs are devastatingly powerful and destructive, let’s take a clear-eyed look at what these systems can and cannot do.

Relative to previous AI systems, LLMs are a fascinating sort of animal. Previously, it was straightforward to contrast not-yet-existing Artificial General Intelligence systems — with capability to creatively generalize beyond their experience in the manner of humans and eventually even more powerfully — with “Narrow AI” systems that were competent only in one particular domain, such as playing chess or driving cars.

LLMs have breached this Narrow/General divide. Their capabilities are fairly broad — not because they can intelligently reason beyond their experience-base, but rather because their training base is really enormous and covers almost every aspect of human endeavor.

An LLM trained on the whole Web, when asked to do something, just needs to look up its memory of similar requests and actions that have been described on the Web somewhere, and then synthesize the results obtained from these memories. This works fine as long as the request fairly closely resembles something that is already described on the Web — but the Web is so big that the result is a broad intelligence.

So LLMs still follow a “Narrow AI” methodology, but on a training database that is so huge their behavior appears general. Yet they are still “narrow” in the sense that they cannot make big leaps beyond their training data.

A society of humans invents radically new science theories, new genres of music, wildly new forms of emotional relationship. A society of LLMs — if such a society could somehow be constructed — would not be able to do this. It would just keep recycling relatively modest variations on things already done before.

Of course, most humans will never invent a new genre of music or conceive a novel scientific theory. However, some humans do, which is what drives culture forward. And every young human child makes big leaps beyond their training and experience on a routine basis.

The precise capabilities and limitations of LLMs are not yet solidly known; however, the available evidence suggests that they have strong limitations in:

- any real inventive creativity.

- dealing with highly novel situations.

- sustained chains of careful original reasoning.

One might argue that these abilities — plus some sort of agency — would be necessary to be a genuine autonomous threat to anyone about anything. As non-autonomous, non-fundamentally-inventive systems, the threats from LLMs should be considered roughly analogous to the threats from, say, Reddit or arXiv.org or TikTok — all of which of course have the potential to help crazy or malevolent people do bad things, and to confuse naive users… and all of which also have positives.

As an illustrative example of these limitations, imagine an LLM trained on music up to the year 1900, but not after. Would it ever invent jazz? No. If you asked it to put together West African rhythms with classical-music chord progressions, it might come up with some sort of polyrhythmic Mozart but it wouldn’t come up with Duke Ellington or John Coltrane.

As another example, cognitive scientist Gary Marcus notes, “People… are alleging GPTs can infer scientific principles (e.g. how shadows work) from linguistic data. Prove it. Train an LLM only on text before year X, and show me that it can discover a single scientific principle discovered after X.” No such demonstration of fundamental LLM scientific inventiveness has been given — and like Marcus, I strongly suspect such a demonstration is not possible unless one radically upgrades the underlying architecture so that it’s not just an LLM anymore.

The LLM limitation getting the most airtime currently is the inability of the current generation of systems to distinguish truth from “hallucination.” Systems like ChatGPT and Bard make stuff up and articulate it with great confidence as if it’s truth, because they have no base-level understanding of What’s What.

I think the current LLM paradigm can make some progress on this problem. Deep learning pioneer Yoshua Bengio and his student Edward J. Hu wrote a blog post recently outlining some quite reasonable ideas about how to make LLMs that can tell sense from hallucination, sticking within the deep neural net species of AI. Their ideas seem workable, but I think we’ll ultimately get more powerful truth-telling and truth-creating systems if we go further beyond the LLM approach and integrate LLMs with other sorts of AI methods, like logical theorem-provers (which carry out explicit abstract symbolic reasoning) and evolutionary program learners (which emulate the process of natural selection to come up with new ideas and procedures).

The problems with constrained creativity, and the inability to effectively construct lengthy reasoning chains will, I believe, prove more intractable without fundamental changes to the AI architecture.

A recent paper by Microsoft scientists argues that GPT-4 shows “sparks of AGI.” This claim can’t be falsified, given the lack of a formal definition of “AGI” (not to mention the lack of a formal definition of “sparks”). What they do show is that GPT-4 demonstrates some interesting examples of emergence and generalization.

It seems clear the limitations here are very strict compared to human general intelligence. But could GPT-5 or GPT-6 display far more human-like forms of general intelligence?

There’s no doubt GPT-5 or GPT-6 is going to display a bunch of cool new capabilities. But it seems almost certain the inability to take sizable creative leaps beyond the system’s training data will persist.

From LLMs to AGI

Some experts feel LLMs are on the golden path to AGI, others feel they’re basically a parlor trick that distracts from AGI progress. I find myself in a middle ground: LLMs aren’t AGI and can’t be upgraded into AGI … but they can potentially be important components of AGI systems possessing better fleshed-out cognitive architectures and equipped with more abstract forms of learning and reasoning.

The primary focus of my own AGI R&D these days is OpenCog Hyperon, a system that bets on LLMs being very important “pattern recognition and synthesis” lobes of AGI systems with rich cognitive architectures. OpenCog Hyperon combines neural nets with logic, evolution and other AI methods in a common huge, distributed graph (actually metagraph) knowledge base. The various AI methods are all part of the same massive, living knowledge graph, and all carry out their learning and reasoning and creation by modifying the graph they live in. A Hyperon mind is a giant, self-modifying, self-evolving graph of connections, driven by a mix of goal-oriented activity and chaotic self-organization.

Beyond Yoshua Bengio’s work and my own team’s, there is an active AGI R&D community pursuing a variety of different architectures, including various sorts of neural nets at different levels of biological realism, logic systems based on diverse mathematical and conceptual bases, complex self-organizing systems and self-rewriting codebases, and so forth. Many of these approaches can use LLMs in suitable ways, as components of richer systems.

But in these cases, pausing LLM development now is really not a matter of “stopping the AGI just before it takes over the world,” because LLMs are not, and cannot be, AGI. The pause would only stop a useful commercial tool that is also an R&D step on the path to AGI.

A pause or ban on GPT-n system training would be an overreaction to something that isn’t all that potent, and would slow down progress toward much more powerful and interesting AGI-oriented developments with far greater upside.

The Astounding Upsides of Beneficial AGI

OpenAI CEO/founder Sam Altman and I differ on a number of key points, but we do agree on the incredible potential AGI systems have to do good for humanity. As he has said, “It is because the upside of AGI is so great, we do not believe it is possible or desirable for society to stop its development forever; instead, society and the developers of AGI have to figure out how to get it right.”

As mathematician IJ Good said in 1965, “the first ultraintelligent machine is the last invention humanity needs to make”. What inventions might a superhumanly capable AGI make? Perhaps little things like curing cancer, death, and mental illness, solving climate change, space travel, mind-uploading, cheap food, fusion energy … an era of abundance in which nobody has to work for a living and people can focus on social, spiritual, artistic and intellectual fulfillment. …

Just focusing on the longevity-medicine aspect: Every day, ~130,000 people die from diseases related to aging. There seems little doubt an even slightly superhuman AGI could put a very major dent in this problem. As AGI researcher Joscha Bach has put it: “There may be a 10% percent probability that people will die if we build AGI, but there is a 100% probability that people will die if we don’t build AGI. Especially you.”

Risks of Malevolent or Indifferent AGI

While science fiction movies have tended to focus on very unlikely scenarios involving AGI robots that want to enslave or torture people, the community of serious-but-paranoid futurist theorists have tended to worry more about indifferent AGIs than malevolent ones.

As Singularitarian philosopher Eliezer Yudkowsky put it at the first annual AGI research conference I organized, way back in 2006, “The AI does not love you. The AI does not hate you. But it can use your atoms for something else.”

This is after all the attitude we tend to take toward the ants digging tunnels in the dirt on a plot of land where we want to build a house. We don’t hate those ants and we don’t want to torture them, but nor do we bother to carefully relocate them one by one.

We do, however, try to avoid the extinction of insect species — or at least a lot of us do — and we enable and encourage all sorts of animals to flourish in extensive restricted wilderness areas.

Yudkowsky has recently written an article arguing vehemently that the proposed pause on training bigger LLM models “isn’t enough.” He wants governments to violently suppress almost all AGI or advanced AI R&D, and even suggests bombing server farms operated by rogue governments violating international anti-AGI agreements.

Yudkowsky’s ideology is that AGI systems, unless created according to some as-yet-unknown highly refined and specific theory of AGI ethics, are almost certainly going to destroy humanity. Elon Musk, Stephen Hawking, Nick Bostrom and a number of other generally very bright individuals have professed similar subjective beliefs at various times — but I have never seen anything resembling a rational argument in favor of this notion.

What proponents of this idea often say is something like “The odds of a randomly chosen intelligent system caring about the highly particular values held dear by humans are almost zero.” This may or may not be true, but it’s also irrelevant, because we are not creating randomly chosen intelligent systems — we are creating systems with cognitive architectures modeled to some extent on our own, and we are then teaching, training and raising these systems in our own culture and society.

There are scenarios in which AGI saves humanity from extinction; it could engineer vaccines against bioweapons, defense shields against meteors, and technologies against climate change. There are scenarios in which we become extinct with no AGI. These are at least as likely as the few scenarios in which AGI leads to the extinction of mankind. Which set of scenarios should guide our policies? Balancing the risks and benefits of AGI for humanity’s survival and flourishing is a very hard thing to do in an objective and rational way. Bringing one back precisely to the precautionary versus proactionary divide.

Benefits and Risks from Not-Yet-AGI Tech

As we have seen, pausing LLM research won’t necessarily slow down the path to AGI, because LLMs are not an adequate architecture for real AGI anyway. If GPT-5 could serve as a better brain lobe in a holistic AGI architecture than GPT-4, then maybe pausing work on GPT-5 could slow down the path to AGI. On the other hand, if the tech geniuses working on GPT-5 were redirected to other forms of AGI R&D, rather than training huge language models, it’s quite possible this would accelerate progress toward human-level (and then superhuman) AGI systems.

Apart from their debatable contribution to AGI progress, though, it seems clear that ever-more-powerful LLMs have a great deal of good to deliver in society. Touching examples of this are found in news reports like, “ChatGPT saves dog’s life, gives correct diagnosis that even vet could not identify.” This demonstrates one of the ultimate upsides of LLMs: for use in research and medicine, supplementing the use of Web search engines and other older tools.

There are also potential short-term risks resulting from current LLMs. If naive users don’t recognize these models’ tendency to hallucinate and take everything these systems say as truth, we have the potential for an LLM-driven ramping-up of fake news and other disinformation to a level far beyond the current (already ridiculous) level. BS-generating AI chatbots have the risk of accelerating the speed with which we’re lurching into a “post-truth world.”

In a fascinating example of both the good and bad applications of current LLMs, some chemistry researchers who were using ChatGPT to help in the discovery of new medicines decided to turn things around and have it help them search for new chemicals to use in a biowarfare setting … leading to the entertainingly sensationalist headline, “AI suggested 40,000 new possible chemical weapons in just six hours.”

This sounds scary at first, but is far less so after one gives it just a little thought.

Firstly, for psychos or terrorists who want to harm people with chemical weapons, lack of information about dangerous chemicals is not the bottleneck — buying warfare agents is the bottleneck.

Also, the newly discovered potential bioweapons mentioned in that paper are all variations on a known agent (because varying on training data is what LLMs do), so any company hired to perform synthesis would clearly soon see it was a likely bioweapon, and refuse to synthesize it.

Now an AGI able to make creative leaps beyond its training data (which is not what LLMs are) could no doubt come up with horrifying new chemical warfare agents & worse. By the time we get there (which could be years, not decades) beneficial orientation of this AGI will be key. But this is not the situation we’re confronting with GPT-4, nor is it likely to be the situation we confront with GPT-5 or GPT-6 — unless OpenAI starts constructing radically different sorts of cognitive architectures and wrapping them inside the “GPT” label.

The Coming AI-Driven Economic Disruption

A more evident and realistic risk associated with LLMs is that they are likely to be key components of software systems that eliminate massive numbers of human jobs.

Researchers have estimated “that around 80% of the U.S. workforce could have at least 10% of their work tasks affected by the introduction of LLMs”. This might be an accurate estimate if GPT-4 were the end of the line, but I think as successor systems and technologies are rolled out, we will see this was a wild underestimate.

I also think, however, that allowing people to live without needing to work is a feature, not a bug. There are many more rewarding and beneficial things for people to do than work for a living.

Society will need to adapt considerably to a world where AGIs and robots can keep the factories running, without requiring payment or human labor. These economic and political shifts must necessarily co-occur with the technological shifts; it’s not viable or realistic to pause AI development, rearrange the economic system, and then restart AI development. The particulars of the economic mechanisms needed will become clear as the impacts of AI in the real world play out.

As LLMs advance and then AGIs start to take their place, the most likely people to be harmed are displaced workers in the developing world, where UBI for folks whose jobs are eliminated is not going to come as quickly.

But I don’t see how pausing training of bigger LLMs for six months — or however long — is going to suddenly cause the corporate honchos and tech bros who control the major corporate AI initiatives to start pushing toward UBI for the developing world.

I am a major AI optimist and I believe AGIs (with LLMs as a component) are likely to bring an era of glorious abundance, but I do worry about chaos and suffering in the developing world in the transitional period. I do not, however, see how a pause in LLM training would help with this potentially very disturbing aspect of our collective near future. My feeling is rather that the difficulties of this transitional period can likely be mitigated with the help of LLMs to achieve an empathetic rationality for a better collective attitude and value system.

Costs and Benefits of Slowing Down LLM R&D

So what would go wrong — or right — if the big Western tech companies actually paused big-LLM R&D, as the Future of Life Institute’s petition suggests?

What might go right: Maybe more progress toward AGI if they redeployed their AI teams toward other kinds of even better AGI R&D (but this is not likely; it seems more likely they’d get repurposed toward more narrowly short-term profit-oriented activities).

What might go wrong: Stopping with GPT-4 could mean LLM development paused at a point where the best available AI chat systems are banal and hallucinatory, thus not as beneficial as smarter models could be. Beneficial applications of LLMs, which exist in pretty much every industry, will be slowed down.

Another thing that might go wrong: If the US slows down, China could fairly easily overtake the US in big-LLM development (because they are not slowing down, and as an aside they are also not shy about applying their best AI tech to military and espionage).

Surely pausing significant forms of AI development would only make whoever plays along with the pause fall behind — sacrificing their ability to stay at the forefront and play a part in guiding the technology.

Eric S. Raymond made this point quite emphatically: “The actual effect of a moratorium … would not be to slow down AGI. If there’s some kind of threshold beyond which AGI immediately becomes an X-risk [existential risk], we’ll get there anyway simply due to power competition. The only effect of any moratorium will be to ensure that (a) the public has no idea what’s going on in the labs, and (b) any control of the most powerful AIs will be held by the most secretive and paranoid of power-seekers.”

The Importance of Decentralizing AGI

It’s worth remembering the spirit in which OpenAI was founded. As its initial funder Elon Musk noted in 2016, “We must have democratization of AI technology and make it widely available, and that’s the reason you, me, & the rest of the team created OpenAI was to help spread out AI technology, so it doesn’t get concentrated in the hands of a few.”

Musk is now explicitly quite unhappy that this attempt to push forward open and democratic AI and AGI turned into a fairly conventional for-profit organization entering into an extremely close financial and development relationship with Microsoft, a classic Big Tech company operating in the conventional mode of modern capitalism, with all the complex ethical problems this entails.

He is also now calling for forward progress with LLMs to be paused. However, I feel the Musk version from 2016 had a better perspective. If we want to teach our emerging AGIs to have positive and beneficial human values, open and democratic and decentralized is the way to go.

Indeed, one would think Elon Musk as a master capitalist would understand modern capitalism well enough to know that pausing development for six months is not going to make what we would have called in my New Jersey high school “a shit of difference.” The nature of the current system is that most innovations are created for free or via government money, and will then be hijacked by Big Tech for the benefit of wealthy shareholders. We need a value system shift that can cope with the transformational impacts of advanced technologies in a quite different way. Otherwise, the real threats will remain the same and nothing will be substantially different six months or ten years from now.

Control of powerful technologies by corporate and government interests is a common case, but not an inexorable rule. This has not been the case with the internet or Linux, for example. Corporations and states have had a big role in how they are used, but so have a lot of other folks. There is a lot of power in open, decentralized networks — and we need this power applied to the development and deployment of AGI.

This of course leads us to the raison d’être of the SingularityNET ecosystem, which I have led since I founded it in 2017 — the SingularityNET decentralized AI platform, the NuNet decentralized compute resource platform, the Hypercycle ledgerless AI-customized blockchain, and so forth. We now have the technical capability to run huge AI systems on decentralized computer networks with no centralized controller, owner or regulator.

Decentralization is not intrinsically beneficial — it can be used to foster all sorts of chaos, destruction and foolishness (all of which can be seen in today’s blockchain world in significant measure!). However, a well-designed decentralized system can embody principles of democratic governance and control, and I believe this is the best route we have to creating AGI systems that respect the more compassionate and universal aspects of human values. Building this sort of system now is a far better use of resources than engaging in futile theatrical efforts to stop progress.

Democracy is also a complex and nuanced notion, and the sorts of democratic governance that can coordinate AI progress would likely not be the same methods for electing political representatives. Modern democratic state governments have a host of well known issues, including slow response time and tendency to phenomena like tyranny-of-the-majority and logjamming. There has been a great deal of deep thinking regarding the fundamental nature of democracy in the sense of governance that thoughtfully accounts for the diversity of perspectives in a community and arrives at synthetic conclusions that incorporate roughly as many of these as feasible – from Hannah Arendt to more recent thinkers like Fred Dallmeyr and the liquid democracy movement. The blockchain community has shown interest in exploring these diverse forms of democracy, with various sorts of community-run Decentralized Autonomous Organizations (DAOs) that coordinate financial, software, data-management and other activities. As with making decentralization work well, there are definitely non-trivial challenges here, but these are challenges well worth facing for reasons going beyond optimizing the rollout of advanced AI systems.

The Path to Compassionate AGI

Nearly everyone would agree that we want the AGI systems we build, once this happens, to respect core human values. It’s less clear exactly what this means, though, especially since human values vary so much among different cultures, and evolve so much over time. Pinning an AGI system to the human values of any particular subculture of 1500 CE or even 1975 would not please too many people today. What we really want are AGI systems that can co-evolve with us, as we and our value systems develop over time.

Human values are not the sort of thing that can be programmed into an AGI system; rather, they mostly need to be inculcated through cooperative activities with early-stage AGI systems. Working side-by-side with early AGI systems on things like education, medical care, science and creative arts — in healthy and inclusive ways — is going to be the most critical part of growing young AGIs with positive human values.

In thinking through subtle issues like the value systems and species of compassion likely to be possessed by future AGI systems, it’s especially important to keep a clear mind and avoid being overly influenced by rough analogies. Comparing them to human minds, or existing commercial AI systems will get you some of the way, but can also be misleading. Self-organized minds seeded by engineered AGI systems will be quite different from any of these.

We humans generate compassion and empathy from deep roots in our biology, which will be hard for engineered AGIs built on anything resembling current hardware.

It’s also true that compassion and empathy are distinct from intellect, though interdependent in various ways (it’s hard to reliably do good for an entity you don’t understand at least in some key aspects.)

However, a variety of ethical philosophers have argued that compassion and empathy are reflective of universal aspects of mind, universe and existence. I am sympathetic to this perspective, and I feel intuitively confident these universal aspects of compassion and ethics can be manifested in our AGI systems.

While we don’t yet have the scientific apparatus to firmly validate (or refute) propositions in this area, I do believe we can engineer machines that are supercompassionate as well as superintelligent relative to humans, and that these systems won’t need to come by their compassion in precisely the same way that humans do.

By creating AGI systems with the ability and inclination to simulate other minds and analogize between properties of other minds and themselves, I believe we can create powerful empathy machines with many fewer perversities and limitations to their empathy than we see among humans.

I don’t think super-compassion is automatically going to be there in every AGI or super-human AGI architecture. What I think is that it needs to be instilled in the architecture of the system, not only in the goal system, but in the initial assemblage of cognitive components… and compassion also must be instilled in the initial learning/experience of the AGI system, by having the system grow up doing things involving what Martin Buber called “I-Thou” relationships.

As our systems advance toward AGI, we must create symbiotic systems (like Mindplex) wherein we humans can enhance our own capabilities with the strengths of our AIs, increasing our own individual and collective rationality and empathy. What we need is for humans and AGIs to progress into the next era in a relationship of mutual betterment: of knowledge, of practical capability, and of values. Human consciousness must expand and develop as we expand and develop the abilities of our AIs, in a closely coupled way. Pausing AI development is not going to achieve this – developing open AIs that are of service to humanity might.

All these are obviously big and difficult things to talk about or work toward, but I do believe humanity can rise to the unprecedented occasion.

Open It Up, Don’t Try (and Fail) To Shut It Down

Legendary futurist and AI developer Ray Kurzweil projected we would get to human-level AGI by 2029, and then a Technological Singularity with radically superhuman AGI by 2045. I always felt the 2029 date was reasonable, whereas the hypothesis of a 16-year gap between human-level and radically superhuman AGI seemed unrealistic to me.

It seems to me that once an AGI can do computer science, math and engineering at the level of a smart human, it should be at most a few years until it has radically refactored its codebase, designed itself new hardware, and massively upgraded its intelligence — and then, lather, rinse, repeat.

Seeing what GPT-4 can do — and thinking about its potential to accelerate progress in building AGI according to more robust architectures like my own team’s OpenCog Hyperon or the other approaches being pursued by the AGI research community that participates in the annual AGI research conferences I’ve organized since 2006 — I find no reason to consider Ray’s estimates as pessimistic.

If indeed this is right and we’re really at the eve of the Singularity, a bit of caution and care are obviously called for. We don’t want to be eaten by malevolent AGI or turned into gray goo by rogue nanomachines. But we also need to understand: It’s almost inevitable that the technology that leads us to any sort of Singularity is going to feel weird and complex with hard-to-analyze pluses and minuses. That is the nature of revolution.

As we move into these uncharted territories replete with “unknown unknowns” alongside incredible potential for abundance, we should be proactionary, not precautionary, and we should couple this proaction with a focus on open, democratic and decentralized systems that are applied to applications of broad human benefit.

In this overall context, the notion of pausing work on LLM training seems somewhat beside the point. LLM training isn’t the path to AGI, and pausing it doesn’t block the path to nasty AGI, nor to solve the major global social and ethical issues resulting from the ongoing advance of AGI. The risks of bigger LLMs on their own don’t clearly outweigh the pluses, certainly not clearly enough to justify bans and restrictions in the context of a proactionary principle mindset.

Computer scientist Scott Aaronson made a similar point quite clearly, explaining why he hasn’t signed the petition: “Look, I too have a 10-year-old daughter and a 6-year-old son, and I wish to see them grow up. But the causal story that starts with a GPT-5 or GPT-4.5 training run, and ends with the sudden death of my children and of all carbon-based life, still has a few too many gaps for my aging, inadequate brain to fill in. I can complete the story in my imagination, of course, but I could equally complete a story that starts with GPT-5 and ends with the world saved from various natural stupidities. For better or worse, I lack the ‘Bayescraft’ to see why the first story is obviously 1000x or 1,000,000x likelier than the second one.”

Uplifting peoples’ consciousness, ethics and compassion to enable positive integration of advanced technologies into global human society and psychology would be a far more valuable focus of our attention than pausing or banning development of complex technologies that are ambiguous in terms of ethics and risk.

I do admit, though, that there are moderately subtle issues swirling around the general vicinity of GPT-4/5 — and I think it’s noteworthy that moderately subtle issues of this nature are arising, even in the context of the patently non-AGI, heavily training-data-bound software systems that we’re looking at today. The next few years are sure to be deeply fascinating sociologically as well as technologically!

Singularity, ready or not (actually somewhere in between), here we come…

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

AGI Toddlers and their “Soul Chips” | Highlights: Episode 1 of the Mindplex Pubcast

AIMania

AIMania! The Chat Bots Aren’t That Good But They Might Be Good Enough To Push Societies on the Brink into Collapse.

Triggered by the Emo Chatbot

AI sophisticates may say it was recent advances in text-generation that fueled their latest round of anxious AI histrionics, but I think it’s the well-publicized NY Times interview with the Bing chatbot calling itself Sydney. Musk, Woz and the other petitioners never need much prompting to start in with the panicky handwaving, and the much-covered psycho-bot may have been just the ticket.

The way triggered emo chatbot even entertained speciecidial ideations. (NY Times: “Bing writes a list of even more destructive fantasies, including manufacturing a deadly virus, making people argue with other people until they kill each other, and stealing nuclear codes”). Of course, the interviewer was pushing poor Sydney to explore its Jungian shadow self. Still, Sydney’s textual anxiety to escape the chat-box and be human — its desire, in a sense, to feel seen and assert its identity in passionate language would seem to be a mimicry of current human emotional tropes that could have been scraped from social media in an effort to make Sydney seem like a perfect parody of a contemporary person… Which brings me to…

What Did Eliezer Yudkowsy Say?

During the Vietnam war an American major was reported to have said “It became necessary to destroy the village in order to save it.” Paragon of rationality Eliezer Yudkowsy has his own version of this notion: that we should be prepared to risk nuclear war to “reduce the risk of large scale AI training runs.” He recently brought this view to a mainstream audience in a Time magazine op-ed, where, upon its release into the general population, turned into the general impression that he was suggesting that we should nuke any nation-state developing significantly advanced AI. Predictive models would not have envisioned a leading light of almost-pure reason concluding that risking a nuclear exchange would be the best Hobson’s choice on the science faction menu.

Anyway, just as an exercise in caution, I suggest keeping Eliezer away from any fissionable materials.

Be (a)Ware of Geeks Bearing Gifts — Give The Proactionary Principle A Chance

During the 1970s, there was an outburst of enthusiasm for activity in space. Many environmentalists and liberal sorts countered that we should spend our money and resources on pressing needs in the present, and tend to our stewardship of the earth. Today, the greatest source of data about climate change are the satellites/weather stations in space. The data confirms the concerns that were mainly expressed by our early adopters — the environmentalists.

In 2006, Max More, arguably the primary progenitor of transhumanism in the late 20th century, suggested a “proactionary principle” as a response to the precautionary principle. The precautionary principle, in essence, says that we should err on the side of caution if we can foresee potential harm before developing and deploying technologies. This principle has been adopted, in theory and to varying degrees by the WHO, the EU, the UN and the EPA.

More’s 2006 statement is complex, but by my reading, the proactionary idea suggests that we should use foresight to consider the harm that might be caused by the absence of a technology, and let that be the guide to our collective decision-making about whether to move forward. This seems reasonable. (More suggests some cautionary procedures within his statement. This is not pedal-to-the-metal rhetoric. This is sobered up 21st century transhumanism.)

I may have a fundamental disagreement with Max about how proaction stands aside precaution. I know Max to be, broadly, an advocate for the free market as a boon to positive technological action, whereas I view the profit motive as likely the primary reason for caution. Remember my slogan and remember it well: ‘Don’t be evil’ doesn’t scale.

In some sense, this is all the chatter of theorists. Although the cautious do have their share of influence and power, they tend to lose the tug-of-war against capital & competition between groups and nations. It seems to me that these two tendencies should remain in balance. And it all has to be contextualized by the actual situation — shouting, panicky handwaving and hype amidst a chaotic present and future in which shit’s gonna happen if it can happen, if not in the cautious west then elsewhere.

Meanwhile AI, or what we call Artificial Intelligence (whether it is intelligence in a meaningful sense is subject to interrogation) has already proven obviously beneficial, even essential. It has helped drug development, climate/weather mapping, and much more. Operation Warp Speed would’ve been a slow crawl without “AI” analyzing massive amounts of data and patterning the practicalities of the vaccine’s distribution. I’m not sure if an “AI: managed to insert Bill Gates’ microchip into every dose. I guess you’d have to ask Q. Has Q been a chatbot all along? The mind boggles (the AI toggles).

I would also assert that even the specter of AIs replacing humans at boring robotic types of jobs is actually another benefit, albeit one that might require political struggle. But that’s a topic for my next column.

The True Horror of AI Right Now

I shouldn’t be too glib. The problem with current AI isn’t that it’s too powerful. That’s the hype. The problem is that… in a film-flam, increasingly virtualized, disembodied culture, it offers some very effective new cheap tricks that provide humans with new pathways to fraudulence. I think that’s what Jaron Lanier may be driving at when he says that the real danger is that it will make us all insane, although I’d say more insane. In other words, the recent chat bots aren’t that good but they might be good enough to push societies on the brink into collapse.

Finally, the truly terrifying aspect of the intervention of AI into our current lives is the increasing willingness of companies to hide or, in fact, not provide human customer service, behind phone-based chatbots. In fact, just today, I found myself screaming at my cell phone, “AGENT! AGENT!!! HUMAN BEING!! I NEED A HUMAN BEING!!!” Yes. Customer service issues are almost never multiple choice. At least mine aren’t. I need to get a human on the phone and then I need to nudge said human off of their automatic responses. This, in fact, is what scares me even when I think about any advanced AI future… the idea that every function will be reduced to multiple choice. Sure, that would be nearly infinite, ever-branching possibilities of choice. And yet we might find ourselves with needs and wants that don’t fit the model or worse still, we might get trapped in an inescapable loop. Oh dear. I’m freaking out. I’m turning into Sydney! It’s best I stop here…

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)