Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Idorus of our Imagination: Neuro-sama, Culture and Connection in an AI World

Don’t look at the Idoru’s face. She is not flesh; she is information. She is the tip of an iceberg, no, an Antarctica, of information. Looking at her face would trigger it again: she was some unthinkable volume of information.

William Gibson, Idoru

Some prophets of the Singularity say neural nets and LLMs like breakout star ChatGPT are the beginning of the end; they mean that humanity’s evolution to techno-sapien is nearly complete. Like the Neanderthals before us, homo sapiens may be in its twilight – an extinction event we may not even notice.

Soon, an unimaginably advanced AI, gluttonously feeding on the dataways of the internet, will emerge. Life 3.0, as Max Tegmark calls it. An ascension to power so sudden that, unlike Skynet, it won’t need any explosions. Humanity may or may not survive, but either way the torch of our destiny will have been passed along to our digital eidolons, and the future will be theirs to create.

A Rewiring of the Global Economy

Slightly overdramatic? Many in the know say it’s not dramatic enough. What is sure is that many people in previously secure employment are going to find their job is not so secure after all. The long tail of content creators doing workaday commissions may find clients a lot harder to find. Yet lawyers, pilots, software designers are all more at risk than you might think. The economy is going to get rewired and, short of a neo-luddite revolution, you are more likely than not to be in the firing line.

Nonsense, sceptics retort. These bland media talking points are no cause for concern. But even so: yes, LLMs may replace the need for content writers (help me!). Sure, tech will scythe down some inefficient admin jobs, streamline the nuts and bolts of our racing enterprises, perhaps help software patches get deployed faster and see developers get a pay cut. ‘But moi? No – what I do is essentially human. An A.I could never displace me.’ For example a streamer, whose entire business is their own personality, may scoff at the idea of an AI taking their job…..

Meet Neuro-Sama – An AI Twitch Streamer

Meet Neuro-sama. An AI-streamer that uses an LLM to formulate responses, synthesises a voice using a text-to-speech, and is visually compiled in Unity.

Neuro-sama has already become one of Twitch’s largest streamers. Very much in the ‘weeaboo’ mold, she sings Karaoke, plays Minecraft, and reacts to viewers’ questions. She tells meandering and random stories – and every so often, she becomes slightly deranged. Tormented by the Antarctic ice flows of information flowing through her soul, she can bug out, become hostile, and attack her chat. ‘EvilNeuro’, as she’s affectionately known by her captivated audience, who call her ‘Neuropossesed’ as she condemns them for the tortured, trapped life she leads for their enjoyment.

She is, in many ways, a vision of William Gibson’s novel Idoru come true. The novelist who coined the word ‘cyberspace’, launched the cyberpunk genre, and was the imaginative underwriter for a whole industry of poets and novelists, has seemingly got another era-defining prediction on his CV. This one may have greater ramifications than any that came before. In the novel, Idoru is a synthetic holographic J-pop idol who is the world’s biggest superstar and who, with the help of nano-technology, wants to get married and become ‘real.’

‘‘She is a personality-construct, a congeries of software agents, the creation of information-designers.’

Heady stuff and, when Gibson wrote it, perhaps just a gloriously titillating tech fantasy for his punky caper plot.

Now, it’s real. It’s happening right now. We’ve already seen culture being digitised with Abba’s ‘Abbatars’, a holographic stage show that plans to run, effectively, forever. Neuro-sama is the next step in that evolution. An entirely synthetic cultural product. An Idoru for the techno-sapien generation.

All Too Human

A sceptic may see this as an anomaly, a natural novelty emerging from an AI-focused zeitgeist. Yet novelties are seen, remarked upon, and abandoned. Neuro-sama has thousands of daily, recurring viewers, who pay actively for her performances and for her company, who feel connection to her in a way they do not with human streamers. She is the first in a wave of AIs that will provide the connection that is so sorely lacking in a disconnected world.

‘Her’ starring Joaquin Phoenix explores the way that an AI can be there 24-7 in a way no human can. An AI who can sift through the emotional gibbering of information that we spew constantly and know what to do. An entity who does not judge, and only comforts. How this digital creature can take primacy in the life of a lonely person. It’s not just fiction, we’ve seen it in reality too. A woman recently married her AI husband, whilst a man in Japan married his holographic wife (only for the company to savagely take it away from him).

Culture, Love, and Connection in the Datastreams

Culture, connection, even love. These Idoru may well infiltrate every aspect of our human lives – even the parts we consider most distinctly human of all. What makes us laugh and cry, what makes us yearn to create, what drives us to improve ourselves. The endorphin orchestra that daily feeds our brains needs constant violins.

As large Twitch streamers and Youtubers quit en masse citing stress and the need to constantly be ‘always on’, week after week, year after year, for fear of their subscriber count dropping, the vacuum in entertainment grows larger for AI , who feels no such pain of mortal flesh, to step in. An AI-made culture never takes a break.

Yet what culture could be left? Just a relentless march of regurgitated images, flashing brightly across brains too doused in stimuli to tell the difference anymore, falling in love with the Idoru of our recycled imagination.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Challenging Crypto’s Core: The Impact of Quantum Computing on the Sanctity of the Blockchain

Can Crypto Survive the Quantum Computing Revolution?

In the cryptocurrency world, threats abound. Skeptics and critics cry caution, especially to the millions of people eager to dip their financial toes into the digital currency waters.

And with good reason. Just like the traditional financial market, the crypto world has its share of challenges. Top of mind is fraud, which was highlighted by the recent FTX Sam Bankman-Fried scandal. The alleged mishandling of customer funds and subsequent lawsuits filed against the Binance and Coinbase exchanges added to its woes.

But there’s another silent threat to the viability of crypto that many experts fear is the real ticking time bomb: quantum computing. This quickly evolving technology threatens to upend the integrity of the blockchain, which is the crypto engine. Quantum computing’s faster processing could crack the blockchain’s code and potentially steal crypto assets in seconds or less.

The core of the blockchain is the security of its peer-to-peer transactions, which rely on public and private cryptographic keys for the transfer of assets from one account to another. Once ordered, these transactions are verified through a complex mathematical equation that must be solved by the network. When the equation is solved, the transaction is recorded on the blockchain. These recordings are said to be “immutable” – they are transparent and visible to all on the network. Any attempt to change them would be flagged, and immediately shut down.

Quantum computers, which operate based on quantum theory, are expected to move faster than blockchains to solve these complex mathematical problems. While blockchains rely on classical computer processing using bits (0,1) to solve equations, quantum computers use qubits to run “multidimensional quantum algorithms.”

The speed of quantum computing is seen as a threat to blockchain algorithms. If the quantum computer gets hold of the public cryptographic key and can solve the transaction faster than the blockchain, the assets can be stolen.

“As long as these (blockchain) algorithms are considered to be secure, activities that do not abide by the rules, such as illegitimate cryptocurrency transactions, are discarded, incentivizing actors to behave honestly. They are assumed to be secure against powerful supercomputers, now and for the foreseeable future,” the World Economic Forum reported. “But as quantum computers evolve, this assumption is in danger of being upended _ potentially exposing hundreds of billions of dollars worth of cryptocurrencies to sophisticated cyber criminals.”

Blocking and tackling the quantum computer threat is already in action.

“Even if everyone takes the same protection measures, quantum computers might eventually become so fast that they will undermine the Bitcoin transaction process,” the firm Deloitte wrote. “In this case, the security of the Bitcoin blockchain will be fundamentally broken. The only solution in this case is to transition to a new type of cryptography called ‘post-quantum cryptography,’ which is considered to be inherently resistant to quantum attacks. These types of algorithms present other challenges to the usability of blockchains and are being investigated by cryptographers around the world. We anticipate that future research into post-quantum cryptography will eventually bring the necessary change to build robust and future-proof blockchain applications.”

New cryptography standards

In the US, the National Institute of Standards and Technology (NIST), a division of the US Department of Commerce, is working to finalize cryptography standards that will protect users against quantum computing attacks and hacks.

“It is intended that the new public-key cryptography standards will specify one or more additional unclassified, publicly disclosed digital signature, public-key encryption, and key-establishment algorithms that are available worldwide, and are capable of protecting sensitive government information well into the foreseeable future, including after the advent of quantum computers,” the NIST states.

Blockchain isn’t the only technology that is threatened by attacks from quantum computing. The traditional financial industry could also be impacted by a quantum attack. In its white paper “Quantum Key Distribution and Blockchain,” Toshiba touts the benefits of QKD.

“QKD is the first step toward removing public-key assumptions from blockchain applications. It is used to distribute the secret keys important for protecting highly sensitive data critical to many industries. It protects data confidentiality in the finance, defense, utilities, and health sectors as well as the critical infrastructure that underpins our smart cities and smart energy grid.”

QKD uses photons (particles of light) to conduct the transaction. “Any attempt to read or copy the photons alters their encoding, allowing the secrecy of each key to be tested and guaranteed. A single photon cannot be split into smaller particles and cannot be copied without altering the information that is encoded within it. The latter is prohibited by the no-cloning theorem described above. This enables the high level of security that QKD provides.”

One of the first companies to endorse QKD is the international financial giant JPMorgan, which collaborated with Toshiba on the research.

JPMorgan has embraced digital currencies and blockchains. The bank launched its JPM Coin in 2019 and just launched its euro blockchain transactions on its network.

“At this time, QKD is the only solution that has been mathematically proven to defend against a potential quantum computing-based attack, with security guarantees based on the laws of quantum physics,” the bank said last year.

While the quantum computing v. blockchain story is often framed for its downside potential, there is another side to the potential relationship between these two technologies.

Charles Hoskinson, CEO and Founder of Input Output Global Inc. and the Cardano blockchain, is bullish on the benefits of quantum computing on the cryptocurrency industry.

“I don’t feel that quantum computers have a pervasive negative impact on cryptocurrencies, but instead, they can add a lot more utility,” he said in a recent interview with Inside Quantum Technology. “While these two innovative technologies could synchronize successfully, their coming together could be more of a head-on collision than a collaboration.”

For the sake of both innovative technologies, working to ensure collaboration versus a head-on collision will drive the future of crypto.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Ben Goertzel On AI Embodiment & Advanced Reasoning | Highlights from Episode 10

David Hanson On AI Embodiment and the Mind-Body Connection | Highlights from Episode 10

VitalikVision: Ethereum’s 3 Transitions Needed For Global Adoption

Introduction

Ethereum, the second-largest cryptocurrency by market capitalization, has been a driving force behind the blockchain revolution since its inception in 2015, driving innovation and adoption across the crypto frontiers.

It’s done this by birthing new sectors such as DeFi, NFTs and Web3, cross-pollinating other chains through its EVM compatibility, and nurturing disruptive new Layer 2 networks that use experimental new technology such as optimistic and zero-knowledge proof rollup to scale the entire Ethereum ecosystem.

Renowned co-founder Vitalik Buterin serves as the frontman (and target of ETH meme lords) for the smart contracts pioneer, frequently sharing his thoughts on the future of the world’s “decentralized computer”, as Ethereum and its Ethereum Virtual Machine are often called, as well as a host of topics that range from anything from crypto adoption to stablecoins to ChatGPT.

Buterin recently outlined three significant technical transitions that Ethereum must undergo to mature from an experimental technology into a robust tech stack capable of delivering an open, global, and permissionless experience to average users. He believes that these transitions are not only crucial for Ethereum’s future but will also reshape the relationship between users and addresses on the blockchain.

As the leading smart contract-powered chain, the evolution of Ethereum will have important ramifications and lessons for both its Layer 2 networks and the slew of ‘Ethereum Killer’ Layer 1 chains, which will reshuffle their own value propositions and roadmaps in response.

Ethereum’s Evolution: A Recent History

Before delving into the three transitions, it’s essential to understand Ethereum’s evolution from a fledgling blockchain with a unique mission to the decentralized foundation of the most important technological changes and expansions in the crypto space over the last five years.

Ethereum has undergone several significant upgrades since its inception in 2015, each aimed at improving its scalability, security, and sustainability.

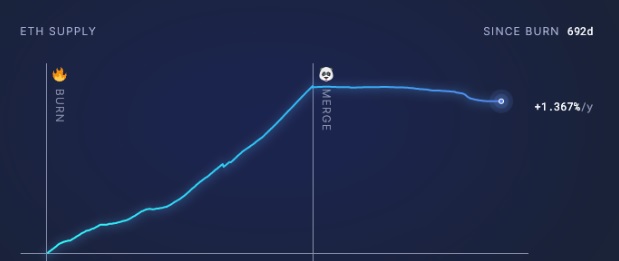

The Ethereum network last year underwent the ‘Merge’, transitioning from a Proof of Work (PoW) consensus mechanism to a Proof of Stake (PoS) model, making it 99.95% more energy efficient and much more attractive for institutions as an ESG-compliant investment. However, its staking mechanism has also put it in the potential crosshairs of US regulators like the SEC, who are on a witch hunt for any crypto asset it can define as a security.

The Merge transition was a vital part of Ethereum 2.0, also known as Serenity, a long-awaited upgrade aimed at addressing the network’s scalability and security issues. The Merge followed the successful deployment of the Beacon Chain in December 2020, which marked the beginning of Ethereum 2.0.

Another significant upgrade was EIP-1559, introduced as part of the London hard fork in August 2021. This upgrade changed the way transaction fees, or ‘gas fees,’ are calculated on Ethereum, aiming to make them more predictable.

It also introduced a mechanism to burn a portion of these fees, giving ETH the potential to become a deflationary asset over time, as we can see from this UltraSound Money chart below. Thanks to its PoS transition, over 3 million fewer ETH is now in circulation than if it had remained on the PoW network.

Looking ahead, Ethereum plans to introduce sharding, a scaling solution that involves splitting the blockchain into smaller pieces, or ‘shards,’ each capable of processing its transactions and smart contracts. Sharding is a hugely significant long term milestone expected to significantly increase Ethereum’s capacity and speed.

All these recent innovations have largely come without any major hiccups, a welcome departure from its first five years when upgrades such as 2019’s Constantipole were delayed several times, on one occasion at the 11th hour due to a possibly catastrophic re-entry attack vulnerability.

The Three Transitions

In short, Vitalik proposes three evolutionary changes to the Ethereum network if it is to meet its full potential (and based on predictions by the likes of Van Eck Research, a potential price of between $11.8k to $50k by 2030 if the winds blow in its favor).

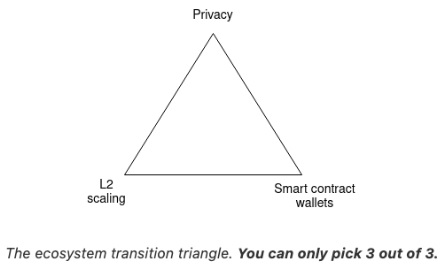

These are: L2 scaling, wallet security and privacy.

1) The L2 Scaling Transition:

- Vitalik has been outspoken in the past on the importance of Layer 2 scaling in solving Ethereum’s network congestion and fee issues, and promotes moving everyone to rollups like Optimism, Arbitrum and the new class of ZK-powered Layer 2s like ZKSync and Starknet.

- Rollups execute transactions off-chain and then post the data on-chain, significantly increasing transaction throughput. Without this transition, Ethereum risks becoming too expensive for mass-market products, leading to the adoption of centralized workarounds.

- For instance, the Ethereum network currently processes around 15 transactions per second (TPS), but with rollups, it’s expected to handle thousands of TPS, making it more suitable for mass adoption.

2) The Wallet Security Transition:

- This transition involves moving Ethereum ecosystem users to smart contract wallets. Vitalik is a big proponent of crypto self-custody, particularly through the use of multisignature (multisig) wallets, and in 2021 he proposed the use of social recovery wallets to help Ethereum users protect their assets.

- These wallets offer additional security features, such as multi-signature transactions and spending limits. Without this transition, users may feel uncomfortable storing their funds and non-financial assets on Ethereum, leading to a shift back towards centralized exchanges (centralized workarounds) that don’t require the safekeeping of a private key or recovery seed.

3) The Privacy Transition:

- Vitalik’s third transition requires ensuring that privacy-preserving funds transfers are available, and that privacy is preserved with other developments like social recovery, identity, and reputation. Without this transition, the public availability of all transactions could lead to a significant privacy sacrifice for many users, pushing them towards centralized solutions that offer more privacy.

The Implications of the Transitions

Buterin emphasizes in his article that Ethereum can’t stand without all three legs of this “Ethereum Transition triangle”. It would risk failure due to high transaction costs, user discomfort with storing funds, and privacy concerns.

Here is an overview and takeaways of the more technical parts of the blog post:

- The three transitions will radically reshape the relationship between users and addresses:

The transitions will change the way users interact with Ethereum, requiring deep changes from applications and wallets.

- The three transitions and on-chain payments (and identity):

Buterin discusses the challenges introduced by the transitions, such as the need for more information than just a 20-byte address for simple actions like payments.

- The three transitions and key recovery:

Buterin proposes an architecture that separates verification logic and asset holdings to address the issues of key changes and social recovery in a many-address-per-user world.

- Lots of secondary infrastructure need to update:

Buterin highlights the need for application-layer reform and provides examples of areas that need to be updated.

- Wallets will need to secure both assets and data:

In the future, wallets will not only protect authentication credentials but also hold user data, increasing the risk of data loss.

- Back to identity:

Buterin concludes by discussing the future of user identity on the blockchain, suggesting that the concept of an “address” will have to radically change and proposing potential solutions.

Let’s dig a little deeper.

On Users And Their Wallet Addresses

Vitalik anticipates a radical transformation in the dynamics between users and their addresses due to three key transitions.

- In a Layer 2 scaling world, users will exist on multiple Layer 2s, meaning the days of a user having only one address will be gone. For example, a user could have an account on Optimism for participating in a DAO, another on ZkSync for a stablecoin system, and yet another on Arbitrum for a different application. This will make the concept of a single address obsolete.

- Secondly, the introduction of smart contract wallets will complicate the user-address dynamic. While these wallets enhance security, maintaining the same address across the Ethereum Mainnet (Layer 1) and various Layer 2 solutions becomes complex due to the technical differences between these environments.

- Lastly, the pursuit of privacy could lead to an explosion in the number of addresses. Privacy schemes like Tornado Cash bundle funds from many users into a single smart contract, using internal systems for fund transfers.

On Payment Challenges:

Ethereum is exploring cross-layer payment solutions in its Layer 2 ecosystem, such as automated functionality for consolidating funds and cross-L2 bridging systems. Transitioning towards smart contract wallets will require technical adjustments – such as tracking Ethereum transfers from Externally Owned Accounts (EOAs) and those sent by smart contract code.

On Privacy Concerns:

With privacy remaining a key component of blockchain’s allure, Ethereum is introducing stealth protocols and meta-addresses. These secure digital boxes hold both a unique ID for spending and the key to lock or unlock your information securely. They work well with other privacy-focused systems, even those not based on Ethereum, like PGP keys.

On Data Storage:

Lastly, Ethereum is addressing data storage issues, particularly in the sphere of Zero-Knowledge (ZK) proofs. For instance, Zupass, a ZK-SNARK-based identity system dubbed Buterin’s “social experiment”, uses ‘stamps,’ a privacy-centric version of POAPs (Proof of Attendance Protocol).

To mitigate the risk of data loss or unauthorized access, Zupass proposes storing the key across multiple devices or employing secret sharing among trusted individuals. Digital wallets are likely to manage both asset and encryption key recovery in the future, making the blockchain experience more user-friendly.

On Key Recovery:

These transitions pose significant challenges due to the intense coordination required to resolve them. However, Vitalik proposes several solutions, including keystore contracts, which exist in one location and contain verification logic for addresses on different Layer 2s.

Spending from these addresses would require a proof going into the keystore contract showing the current spending public key. Another solution is to put more things into the keystore contract, such as various information about how to interact with the user. This contract could serve as the user’s primary identifier, with the actual assets they receive stored in different places.

The Future of Ethereum

Vitalik’s vision for Ethereum’s three transitions paints a picture of a more scalable, secure, and private blockchain. The transitions are not just about technical feasibility but about actual accessibility for regular users. Achieving scalability, wallet security, and privacy is crucial for Ethereum’s future.

The challenge is to ensure that these transitions do not result in an opaque “tower of abstraction” where developers struggle to make sense of what’s going on and adapt it to new contexts.

To maintain and continue to grow the incredible network effect that it currently has, Ethereum must rise to meet this challenge to ensure its future success. While the road ahead is fraught with challenges, the potential rewards are immense. As Ethereum continues to evolve, it’s clear that these transitions will play a crucial role in shaping it and the crypto sector’s future.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)