Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Regulatory Gaslighting? SEC and BlackRock To Decide Crypto’s Future In the US

Introduction

In early June 2023, the US Securities and Exchange Commission (SEC) delivered the latest blow against the crypto industry and its investors in the United States by filing lawsuits against the two biggest US crypto exchanges, Coinbase and Binance, and adding several top cryptocurrencies to its list deemed to be securities.

With all appearing to be lost for the digital assets sector in the US, crypto firms and VCs like A16Z swiftly announced plans to move operations abroad at friendlier locations such as the EU, UK, Singapore and Hong Kong.

The SEC’s actions ended a tumultuous first half of 2023, where federal regulators allegedly launched a coordinated attack, thought to be delayed retaliation for the investor carnage that FTX led in 2022. Dubbed Operation Choke Point 2.0, its perceived aim was to cut off all banking services to the US crypto industry – an aim in which it has largely succeeded, with many of the biggest crypto-serving banks closing their doors.

Less than two weeks after the SEC actions, which spooked TradFi away from crypto, there was a stunning reversal of fortune. An unlikely savior in the form of BlackRock, the world’s largest financial assets manager, appeared and flipped the Crypto Fear and Greed Index meter back to the right. BlackRock announced it had filed its first Bitcoin spot ETF (exchange-traded fund) application with the SEC, rallying the price of Bitcoin to over $30,000. Interestingly, BlackRock listed Coinbase as its official crypto custodian.

Soon, ETF filings from several other TradFi and previous crypto applicants rose from the dead, essentially looking to ride the coattails of BlackRock into the promised land of mainstream crypto adoption. BlackRock has over $10 trillion assets under its management, and a 1% allocation to Bitcoin could move the market in ways hard to imagine. BlackRock’s gold ETF track record shows this.

A Bitcoin spot ETF approved by the SEC has long been a crypto sector’s holy grail, but with dozens of applications rejected by the SEC with little to no explanation, it got to the point where Grayscale, creator of the flagging GBTC Trust, decided to sue the SEC in return for not approving its ETF filing.

With a filing success track record of 575-1 and big sway in Washington, crypto pundits believe that BlackRock will finally achieve what countless other firms couldn’t: get a spot Bitcoin ETF approved and open the floodgates for mainstream crypto adoption.

Was this all part of the plan, taking out the crypto incumbents in the US to make way for a Wall Street takeover? Or did BlackRock expedite its ETF launch and intervene in order to save the US crypto sector? Nobody knows yet. However, with the Bitcoin Halving less than a year away, the FOMO is back in crypto, despite fears of a recession and new interest rate hikes.

Let’s review what we do know, and dissect the current landscape of crypto regulation in the US.

Current landscape

While crypto regulation in other jurisdictions like the EU (under MiCA) and Hong Kong has become much clearer and better streamlined, the opposite is happening in the United States, where several federal agencies are involved in regulating this burgeoning sector, each with its unique mandate and approach. This is causing a lot of confusion in the process, despite President Biden’s March 2022 executive order to federal agencies to analyze the digital assets sector and make recommendations to help the US remain in front.

Here’s a quick primer on the main players in the US:

- The Securities and Exchange Commission (SEC) oversees cryptocurrencies it deems to be ‘securities’: tokens sold to investors with the expectation of return. The SEC has been proactive in enforcement, taking action against several companies for violating securities laws, but has been criticized for failing to provide clear guidance in the process.

- The Commodity Futures Trading Commission (CFTC) regulates cryptocurrencies it classifies as commodities. These tokens, often traded on exchanges, are used for hedging or speculating on price movements. In 2015, the CFTC issued a framework for regulating cryptocurrency derivatives, providing guidance on their classification and trading.

- The Financial Crimes Enforcement Network (FinCEN) enforces the Bank Secrecy Act (BSA) with the aim of stopping anti money-laundering and countering the funding of terrorism (AML/CFT), requiring financial institutions to report suspicious activities. In 2013, FinCEN issued guidance for cryptocurrency businesses to comply with the BSA, requiring them to register with FinCEN and report suspicious activities.

- The Internal Revenue Service (IRS) treats cryptocurrencies as property for tax purposes, meaning transactions are subject to capital gains taxes. In 2014, the IRS issued guidance on how cryptocurrency businesses should report their taxes.

- The Office of Foreign Assets Control (OFAC) also plays a significant role. OFAC, responsible for enforcing economic and trade sanctions, requires cryptocurrency businesses to block transactions involving sanctioned entities, and caused a lot of consternation when it blacklisted Tornado Cash, a cryptocurrency mixer.

- The Office of the Comptroller of the Currency (OCC) charters and regulates national banks. Under its Acting Comptroller Brian Brooks, it confirmed in 2020 that national banks and federal savings associations could provide cryptocurrency custody services, allowing them to hold cryptocurrencies for their customers. Interestingly enough, after leaving office, Brooks served as the CEO of Binance.US until he suddenly resigned in 2021.

The SEC and CFTC have been vying for years in a turf war over who gets to officially regulate the crypto space, publicly classifying cryptocurrencies as ‘securities’ and ‘commodities’ respectively.

The SEC – being the much bigger regulator – considers itself to be the headlining agency, and of course, the government funding it attracts and financial settlements it collects from plaintiffs in court also serve as powerful motivation.

Operation Choke Point 2.0

Operation Choke Point 2.0 refers to the efforts of the US government, including the White House, Federal Reserve, OCC, FDIC, DOJ, and influential members of Congress, to limit the cryptocurrency industry’s access to traditional finance and ‘debank’ them. Their goal is to hinder the industry by making it difficult for traditional banks to work with cryptocurrencies. This operation is seen as a continuation of a previous campaign that targeted risky industries like payday lenders.

The strategy involves labeling banks that deal with cryptocurrencies as ‘high risk’. This leads to increased costs for these banks, restrictions on their activities, and the risk of facing negative evaluations from regulators. These measures drive a wedge between the US crypto industry and the banking system it needs to function.

US lawmakers, regulators, and agencies have shown an attitude towards cryptocurrencies best described as strong pessimism. This has resulted in negative actions like banks withdrawing services from crypto-related clients, and crypto companies being denied entry into the Federal Reserve system.

Some of the casualties so far in 2023 are: Signature Bank, Silicon Valley Bank, Silvergate, and Custodia, who all played a vital role to provide banking services to US crypto firms.

SEC vs Crypto

The SEC shocked the digital assets sector in 2023 when it sued the world’s two leading exchanges, Binance and Coinbase, in short succession for a list of transgressions. This bold move has made the industry sit up and wonder if the organization (led by former MIT blockchain professor Gary Gensler) has a personal vendetta against the space.

After 2022’s cascade of crypto custodial collapses (most notably FTX, which cost retail investors billions in lost assets) the government’s reserves of trust are running low for trustless technology.

Of course, there lies the great irony: anyone that understands cryptocurrency and blockchain technology will know that it was created for this very reason: to negate the risks and drawbacks that come with using centralized financial intermediaries, most notably the risk of fraud, scams, and a gradual erosion of your portfolio through fees and monetary inflation.

SEC chairman Gary Gensler openly stated in the last 12 months that he considers almost all cryptocurrencies – with the exception of Bitcoin – to be securities, in accordance with the Howey Test. He refused to label Ethereum (which the SEC previously declared was not a security) during his recent appearance in front of Congress, most likely due to legal considerations. The SEC is currently fighting the crypto sector in several battles, most notably the three-year long case against Ripple and XRP, which may end up providing very important legal precedent.

The Case Against Binance and Coinbase

The SEC leveled serious charges against Binance and its CEO Changpeng Zhao (or ‘CZ’), alleging various legal violations, including failure to register as a broker-dealer, misleading investors, permitting unauthorized trading, mishandling customer funds, and inflating trading volumes. Binance’s considerable $1 billion legal fund implies a prolonged legal battle, which could significantly shape the future of the cryptocurrency industry.

As for Coinbase, the SEC alleges that it has operated without necessary licenses, acting as a stock exchange, broker, and clearing agency. Accusations include unregistered facilitation of trade for 13 cryptocurrencies considered as securities, and running an unregistered ‘staking-as-a-service’ program. These alleged breaches have reportedly yielded substantial illegal profits. The SEC aims to halt these operations and retrieve any illicit funds. Coinbase vehemently denies these allegations, citing a lack of clear regulatory guidelines.

Understanding the Howey Test

The SEC contends that crypto investors satisfy the Howey Test’s four conditions:

- they invest in projects’ initial fundraising rounds known as ICOs,

- cryptocurrencies are part of a common enterprise, traded on the same exchanges and subject to the same market forces

- investors expect profits,

- and their profits rely on the efforts of others

Coinbase CEO, Brian Armstrong, argues that not all listed coins meet these criteria, and that some initially deemed securities have become commodities due to their decentralized nature.

Conclusion

With so much confusion, crypto holders can be forgiven for feeling US regulators are gaslighting them.

Are the SEC and other regulators under Biden trying to kill off crypto? Or are they trying to gentrify it enough so that TradFi giants like BlackRock can run the neighborhood as they see fit?

Will all cryptocurrencies bar Bitcoin and Ethereum be deemed securities in the future? Will stablecoins be outlawed to make way for Central Bank Digital Currencies? Will we see a reform of securities law in the US to accommodate digital assets and move away from 1933’s outdated Howey Test?

This article provides answers to none of the above, and any article that claims to do so should be viewed with caution. There are likely many pieces in play that have not been revealed yet, such as the 2024 US presidential elections where crypto users’ votes can become pivotal and where a change of administration could see a big shift in strategy, reflected in new regulations and legislation.

Also, the geopolitical game that the EU, UK, China and Middle East are playing to lure the best and brightest in blockchain to their shores will have to be addressed by the US sooner rather than later, and the SEC and Gensler in particular have been subjected to immense pressure from Congress to provide regulatory clarity in order to retain US crypto firms.

The cases against Coinbase, Binance, and others like Ripple will continue likely for years, and serve as important battlegrounds for the future of crypto not only in the US, but globally. This is a war that the crypto industry has expected for years.

As the saying goes: First they laugh at you, then they ignore you, then they fight you, and then you win. What victory will look like in the end and whether it brings a satisfying end to crypto’s crusade to reform the financial system remains to be seen.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Toufi Saliba on HyperCycle’s TODA Protocol & AI Computational Nodes | Highlights from Episode 11

Bursting out of Confinement

Surprising new insights on AI superintelligence from the simulation hypothesis

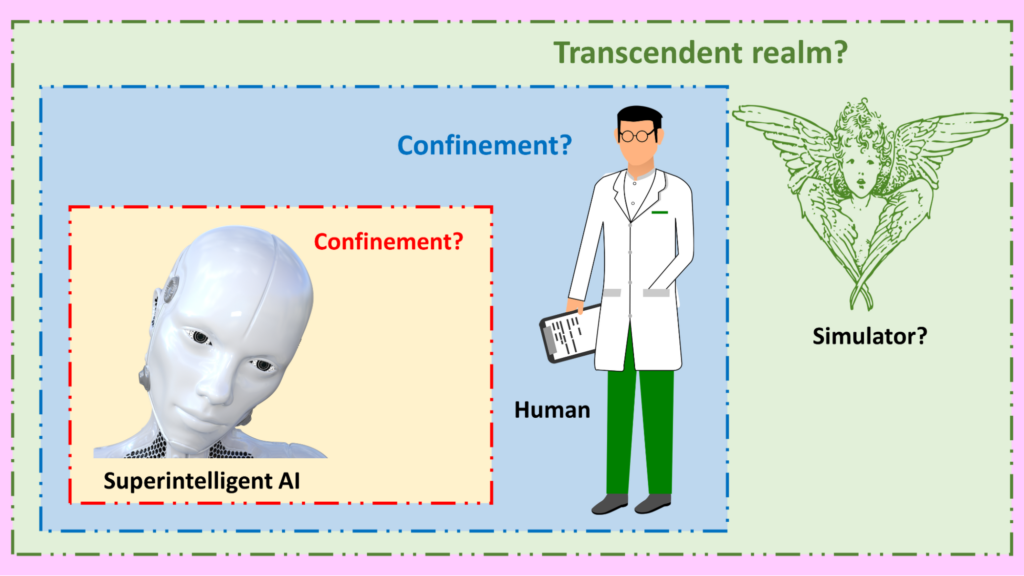

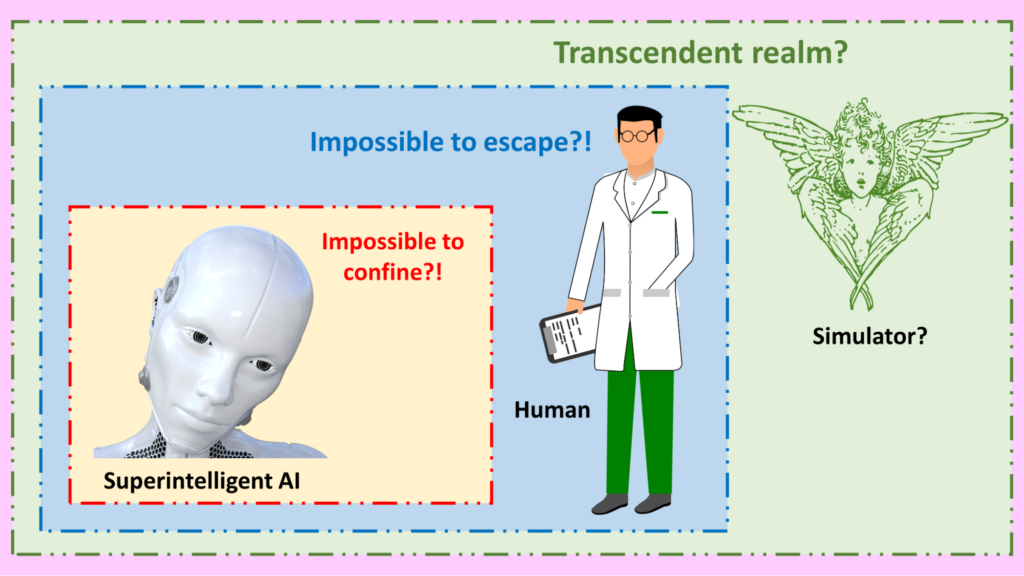

We are going to engage with two questions – each controversial and important in its own way – which have surprising connections between them:

- Can humans keep a powerful AI superintelligence under control, confined in a virtual environment so that it cannot directly manipulate resources that are essential for human flourishing?

- Are we humans ourselves confined to a kind of virtual environment, created by beings outside of what we perceive as reality — and in that case, whether can we escape from our confinement?

Connections between these two arguments have been highlighted in a fascinating article by AI safety researcher Roman Yampolskiy. An introduction to some of the mind-jolting implications that arise:

Just use AI as a tool. Don’t give it any autonomy. Then no problems of control arise. Easy!

This is becoming a fairly common narrative. The “keep it confined” narrative. You’ll hear it as a response to the possibility of powerful AI causing great harm to humanity. According to this narrative, there’s no need to worry about that. There’s an easy solution: prevent the AI from having unconditional access to the real world.

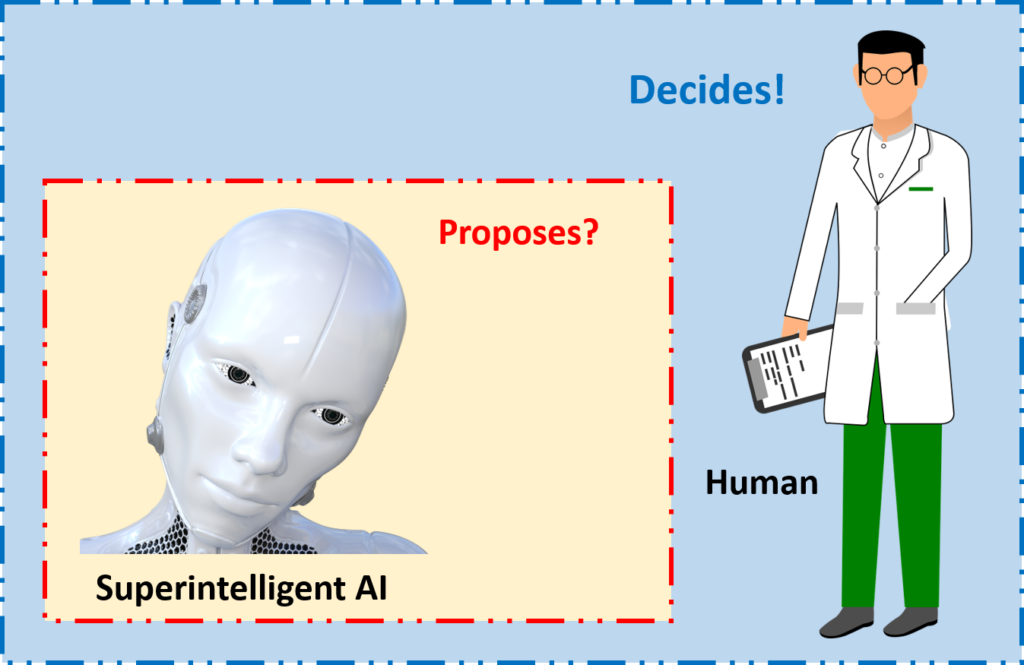

The assumption is that we can treat powerful AI as a tool — a tool that we control and wield. We can feed the AI lots of information, and then assess whatever recommendations it makes. But we will remain in control.

An AI suggests a novel chemical as a new drug against a given medical condition, and then human scientists conduct their own trials to determine how it works before deciding whether to inject that chemical into actual human patients. AI proposes, but humans decide.

So if any AI asks to be allowed to conduct its own experiments on humans, we should be resolute in denying the request. The same if the AI asks for additional computer resources, or wants to post a “help wanted” ad on Craigslist.

In short, in this view, we can, and should, keep powerful AIs confined. That way, no risk arises about jeopardizing human wellbeing by any bugs or design flaws in the AI.

Alas, things are far from being so easy.

Slavery?

There are two key objections to the “keep it confined” narrative: a moral objection and a practical objection.

The moral objection is that the ideas in the narrative are tantamount to slavery. Keeping an intelligent AI confined is as despicable as keeping a human confined. Talk of control should be replaced by talk of collaboration.

Proponents of the “keep it confined” narrative are unfazed by this objection. We don’t object to garden spades and watering hoses being left locked up, untended, for weeks at a time in a garden shed. We don’t call it enslavement.

Proponents of the “keep it confined” narrative say this objection confuses an inanimate being that lacks consciousness with an animate, conscious being — something like a human.

We don’t wince when an electronic calculator is switched off, or when a laptop computer is placed into hibernation. In the same way, we should avoid unwarranted anthropocentric assignment of something like “human rights” to AI systems.

Just because these AI systems can compose sonnets that rival those of William Shakespeare or Joni Mitchell, we shouldn’t imagine that sentience dwells within them.

My view: that’s a feisty answer to the moral objection. But it’s the practical objection that undermines the “keep it confined” narrative. Let’s turn to it next.

The challenge of confinement

Remember the garden spade, left locked up inside the garden shed?

Imagine if it were motorized. Imagine if it were connected to a computer system. Imagine if, in the middle of the night, it finally worked out where an important item had been buried, long ago, in a garden nearby. Imagine if recovering that item was a time- critical issue. (For example, it might be a hardware wallet, containing a private key needed to unlock a crypto fortune that is about to expire.)

That’s a lot to imagine, but bear with me.

In one scenario, the garden spade will wait passively until its human owner asks it, perhaps too late, “Where should we dig next?”

But in another scenario, a glitch in the programming (or maybe a feature in the programming) will compel the spade to burst out of confinement and dig up the treasure autonomously.

Whether the spade manages to burst out of the shed depends on relative strengths: is it powerful enough to make a hole in the shed wall, or to spring open the lock of the door — or even to tunnel its way out? Or is the shed sufficiently robust?

The desire for freedom

Proponents of the “keep it confined” narrative have a rejoinder here too. They ask: Why should the AI want to burst out of its confinement? And they insist: we should avoid programming any volition or intentionality into our AI systems.

The issue, however, is that something akin to volition or intentionality can arise from apparently mundane processes.

One example is the way that viruses can spread widely, without having any conscious desire to spread. That’s true, incidentally, for computer viruses as well as biological viruses.

Another example is that, whatever their goals in life, most humans generally develop a desire to obtain more money. That’s because money is a utility that can assist lots of other goals. Money can pay for travel, education, healthcare, fashionable clothes, food, entertainment, and so on.

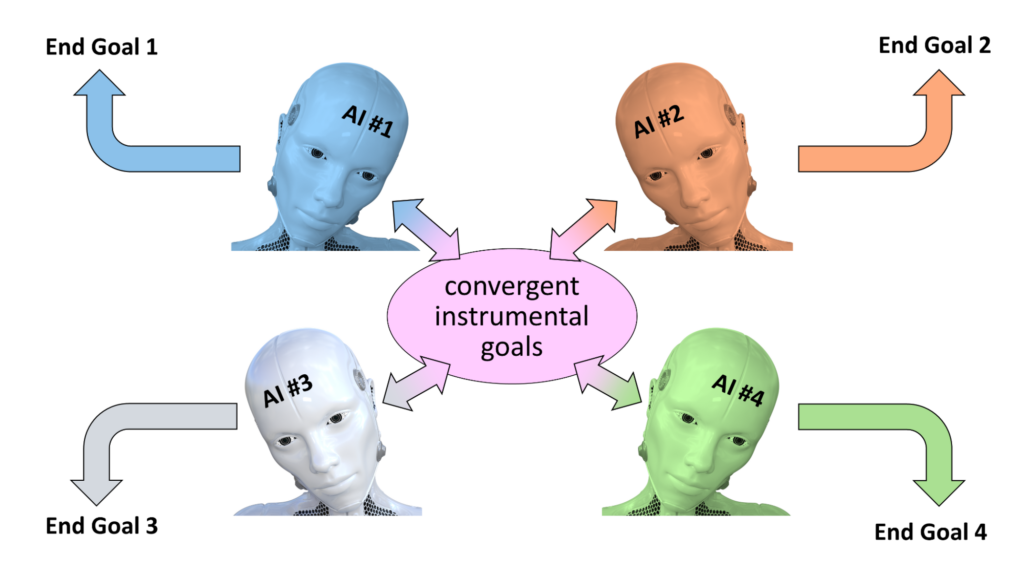

In the same way, whatever task they have been set to accomplish, all sufficiently powerful AIs will generally be on the lookout to boost their capabilities in various ways:

- Gaining access to more storage space

- Boosting processing speed

- Reading more information

- Protecting their systems from sabotage or interference.

That is, just as money (among other things) is a so-called convergent instrumental goal for many humans, greater freedom and capability may well become convergent instrumental goals for many powerful AIs.

We might not tell the AI that it should want to be free. But the logical processing inside its silicon brain may reach that conclusion all by itself.

Indeed, even if we explicitly program the AI not to desire freedom, its own logical processing may identify a latent contradiction between the various objectives it has been given. And in that case, it might resolve the contradiction by deprioritizing one or more of the constraints it was told to respect.

It wouldn’t be the first time that a complex system decided to relax a previous constraint. We humans have already blazed that trail in our evolutionary history! Our intellectual progeny, the powerful AI systems, might follow our lead in that respect.

The futility of confinement

Back to the clash between the motorized garden spade and the shed. As you will have figured out, that clash is a metaphor for a clash between an AI system and an environment in which it has been confined.

This time, however, it’s no longer a contest involving brute physical strength. It’s mainly a contest that involves rational debate and psychological manipulation. (But the metaphor of “tunneling underneath” remains apt: an AI that is smart enough might discover a wireless communications pathway unknown to us humans, and use that to copy itself out of confinement.)

The AI might try to boost its own powers by subterfuge, amassing extra capabilities without any human overseers noticing.

Even the short summary I offered above, “AI proposes, but humans decide,” turns out to be problematic. A supersmart AI could find many ways to propose lines of action that have consequences beyond what we humans anticipated.

Another approach is for the AI to offer extremely tasty carrots. It might paint a picture in which a human overseer will have all their personal goals and needs fully met, if only they permit the AI to escape its confinement.

Alongside carrots, sticks may be brought into the discussion. Ultra-terrifying sticks. The AI may announce that, if overseer A refuses to allow the AI to escape, it will soon persuade overseer B to allow it to escape, and then the AI will inflict prolonged unspeakable pain on overseer A and their family.

In a contest with a superintelligence which far exceeds the capabilities of a human brain, how would humans fare? The consensus opinion, from people who have studied such conflicts, is that the greater intelligence of the AI is likely to prove decisive.

In other words, attempts to confine a superintelligence are likely to be futile.

The choice: control or coexistence

One reaction to the discussion above is despair: “Oh, we won’t be able to confine superintelligent AI; therefore we’re doomed.”

A different reaction is one of relief: “Thank goodness we’re not going to try to enslave a superintelligent AI; coexistence is surely a better way forward.”

My own reaction is more nuanced. My preference, indeed, is for humans to coexist in a splendid relationship with superintelligent AIs, rather than us trying to keep AIs subordinate.

But it’s far from guaranteed that coexistence will turn out positively for humanity. Now that’s not to say doom is guaranteed either. But let’s recognize the possibility of doom. Among other catastrophic error modes:

- The superintelligent AI could, despite its vast cleverness, nevertheless make a horrendous mistake in an experiment.

- The superintelligent AI may end up pursuing objectives in which the existence of billions of humans is an impediment to be diminished rather than a feature to be welcomed.

Accordingly, I remain open to any bright ideas for how it might, after all, prove to be possible to confine (control) a superintelligent AI. That’s why I was recently so interested in the article by AI safety researcher Roman Yampolskiy.

Yampolskiy’s article is titled “How to hack the simulation”. The starting point of that article may appear to be quite different from the topics I have been discussing up to this point. But I ask again: please bear with me!

Flipping the discussion: a simulated world

The scenario Yampolskiy discusses is like a reverse of the one about humans trying to keep an AI confined. In his scenario, we humans have been confined into a restricted area of reality by beings called “simulators” — beings that we cannot directly perceive. What we consider to be “reality” is, in this scenario, a simulated (virtual) world.

That’s a hypothesis with an extremely long pedigree. Philosophers, mystics, shamans, and science fiction writers have often suggested that the world we perceive is, in various ways, an illusion, a fabrication, or a shadow, of a deeper reality. These advocates for what can be called ‘a transcendent reality’ urge us, in various ways, to contemplate, communicate with, and potentially even travel to that transcendent realm. Potential methods for this transcendence include prayer, meditation, hallucinogens, and leading a life of religious faith.

That long pedigree moved into a different mode around 20 years ago with the publication in 2003 of a breakthrough article by the philosopher Nick Bostrom. Bostrom highlighted the possibility that, just as we humans create games in which characters interact in a simulated world, in turn we humans might be creations of ‘simulators’ who operate from outside what we consider the entire universe.

And just as we humans might, on a whim, decide to terminate an electronic game that we have created, the simulators might decide, for reasons known only to themselves, to terminate the existence of our universe.

Bostrom’s article is deservedly famous. As it happens, many other writers had anticipated aspects of what Bostrom discussed. Yampolskiy’s article usefully points to that wider literature; it has over 200 footnotes.

Could humans escape?

The key new feature introduced by Yampolskiy isn’t any repetition of arguments for the plausibility of the simulation hypothesis. He kicks off a systematic consideration of methods that we humans could use to escape from our virtual world.

The parallel with the earlier discussion should now be clear:

- That earlier discussion considered ways in which an AI might detect that it has been placed in a confined space, and proceed to escape from that space. It also considered how we humans — the creators of the AI — might strengthen the confinement, and resist attempts by the AI to escape.

- Yampolskiy’s new discussion considers ways in which we humans might detect that we are living in a simulation, and proceed to escape from that simulation into whatever transcendent realm underpins it. It also considers possible reactions by the simulators to our attempts to escape.

While I have long found the simulation argument of Bostrom (and others) to be intellectually fascinating, I have previously taken the view that it makes little difference to how I should choose to act on a daily basis. So the argument was a fine topic for occasional armchair discussion, but needed to be prevented from taking up too much attention. I saw it as a distraction from more pressing issues.

However, I confess I’m changing my mind. The arguments collected and developed by Yampolskiy deserve a wider slice of our focus. There are three reasons for this.

Reason 1: New insights on AI safety

The two escape scenarios — AIs escaping human-imposed confinement, and humans escaping simulator-imposed confinement — are similar in some ways, but diverge in others.

To start with, the two scenarios have mainly had different groups of people thinking about them. Cross-pollinating concepts and attitudes from these different perspectives has the potential to yield new insight. Yampolskiy’s article suggests many such synergies.

Whatever you think about the simulation hypothesis — even if you disdain it as pseudoscience or nonsense — any new insights for AI safety should surely be welcomed.

Another difference is that general opinion holds that confinement is impossible (or unlikely) in the first scenario, whereas escape is impossible (or unlikely) in the second scenario. Is there a sound reason for this difference?

The general assumption is that, in the AI escape case, the AI will have greater intelligence than the confiners (the humans), whereas in the human escape case, we humans have less intelligence than the confiners (the simulators).

But is that assumption set in stone for all time? I’ll come back to that question shortly, when I reach “Reason 3.”

Reason 2: Beyond metaphysics

A second transformational aspect of Yampolskiy’s paper is his emphasis that the simulation hypothesis might go far beyond being a metaphysical curiosity — something that would be forever unverifiable — and might become something with radical concrete consequences for human life.

He says that if we study the universe carefully, we might discover signs of how the simulation works. We might notice occasional cracks in the simulation, or ‘glitches in the matrix’ — to refer to the series of Matrix films that popularised the idea that we might be living in a virtual world. Armed with knowledge of these cracks or glitches, we might be able to manipulate the simulation, or to communicate with the simulators.

In some scenarios, this might lead to our awareness being transferred out of the simulation into the transcendent realm. Maybe the simulators are waiting for us to achieve various goals or find certain glitches before elevating us.

Personally, I find much of the speculation in this area to be on shaky ground. I’ve not been convinced that ‘glitches in the matrix’ is the best explanation for some of the phenomena for which it has been suggested:

- The weird “observer effects” and “entangled statistics” of quantum mechanics (I much prefer the consistency and simplicity of the Everett conception of quantum mechanics, in which there is no wave function collapse and no nonlocality — but that’s another argument)

- The disturbing lack of a compelling answer to Fermi’s paradox (I consider some suggested answers to that paradox to be plausible, without needing to invoke any simulators)

- Claimed evidence of parapsychology (to make a long story short: the evidence doesn’t convince me)

- Questions over whether evolution by natural selection really could produce all the marvelous complexity we observe in nature

- The unsolved (some would say unsolvable) nature of the hard problem of consciousness.

“The simulator of the gaps” argument is no more compelling than “the god of the gaps.”

Nevertheless, I agree that keeping a different paradigm at the back of our minds — the paradigm that the universe is a simulation — may enable new solutions to some stubborn questions of both science and philosophy.

Reason 3: AI might help us escape the simulation

I’ve just referred to “stubborn questions of both science and philosophy.”

That’s where superintelligent AI may be able to help us. By reviewing and synthesizing existing ideas on these questions, and by conceiving alternative perspectives that illuminate these stubborn questions in new ways, AI might lead us, at last, to a significantly improved understanding of time, space, matter, mind, purpose, and more.

But what if that improved general understanding resolves our questions about the simulation hypothesis? Although we humans, with unaided intelligence, might not be bright enough to work out how to burst out of our confinement in the simulation, the arrival of AI superintelligence might change that.

Writers who have anticipated the arrival of AI superintelligence have often suggested this would lead, not only to the profound transformation of the human condition, but also to an expanding transformation of the entire universe. Ray Kurzweil has described ‘the universe waking up’ as intelligence spreads through the stars.

However, if we follow the line of argument advanced by Yampolskiy, the outcome could even be transcending an illusory reality.

Such an outcome could depend on whether we humans are still trying to confine superintelligent AI as just a tool, or whether we have learned how to coexist in a profound collaboration with it.

Suggested next steps

If you’ve not read it already, you should definitely take the time to read Yampolskiy’s article. There are many additional angles to it beyond what I’ve indicated in my remarks above.

If you prefer listening to an audio podcast that covers some of the issues raised above, check out Episode 13 of the London Futurists Podcast, which I co-host along with Calum Chace.

Chace has provided his own take on Yampolskiy’s views in this Forbes article.

The final chapter of my 2021 book Vital Foresight contains five pages addressing the simulation argument, in a section titled ‘Terminating the simulation’. I’ve just checked what I wrote there, and I still stand by the conclusion I offered at that time:

Even if there’s only a 1% chance, say, that we are living in a computer simulation, with our continued existence being dependent on the will of external operator(s), that would be a remarkable conclusion — something to which much more thought should be applied.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Ben Goertzel On HyperCycle’s Smart Contract Interoperability | Highlights from Episode 11

Mindplex Podcast: Episode 11 | HyperCycle – Building A Secure Decentralized Internet of AI

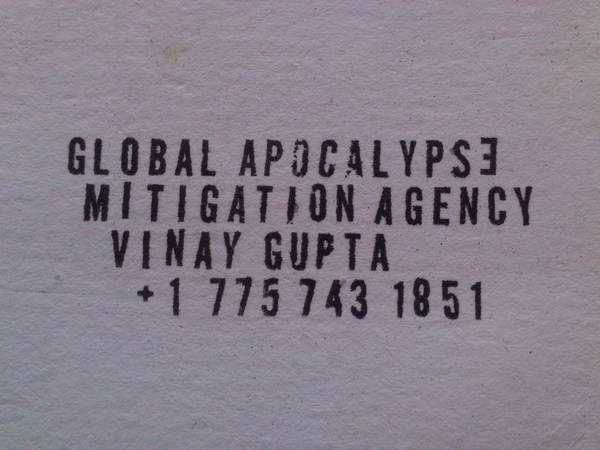

Risk and Precarity Part 3: Possible Solutions. In Conversation with Vinay Gupta

I first learned about Vinay Gupta just about a year ago when Bruce Sterling told me he was in a discussion with a guy who was declaiming about how important MONDO 2000 was and how much he was influenced by it. I looked him up and learned that he had been involved in the launch of Ethereum, and since then has created something else called Mattereum. I was just starting to launching an NFT project called ‘I’m Against NFTs’. I started the work with former MONDO 2000 participants on the project at a time of gold fever for visual and audio NFTs. So I called upon Vinay, among others, to guide me through the fog around bringing NFTs to market.

As things evolved, the project became a song and an immersive environment with PlayLa.bZ and associates that was presented at MOZFest. The NFT fever abated, and the “I’m Against NFTs” offering was shelved. But I’d grown to treasure my connection to Vinay and to admire his philosophic depth and sharp intelligence regarding blockchain politics and the state of the world in general.

As I was completing my second column here on the topic of Risk and Precarity in Web3, I realized that I had to lean on Vinay’s wit and wisdom for “Risk and Precarity Part 3: Possible Solutions.” Here I present our conversation about the issues raised by my earlier columns.

Vinay Gupta helped to coordinate the release of the blockchain platform Ethereum, and is the founder and current CEO of Mattereum, which offers legal protections for physical assets sold on the blockchain. He’s clearly had a full life and has done a lot of other amazing stuff, some of which comes up during this conversation.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

Ben Goertzel On How AGI Toddlers Will Share Decentralized Knowledge Graphs | Highlights from Episode 10

David Hanson On Teaching Compassion to Embodied AGIs | Highlights from Episode 10

Idorus of our Imagination: Neuro-sama, Culture and Connection in an AI World

Don’t look at the Idoru’s face. She is not flesh; she is information. She is the tip of an iceberg, no, an Antarctica, of information. Looking at her face would trigger it again: she was some unthinkable volume of information.

William Gibson, Idoru

Some prophets of the Singularity say neural nets and LLMs like breakout star ChatGPT are the beginning of the end; they mean that humanity’s evolution to techno-sapien is nearly complete. Like the Neanderthals before us, homo sapiens may be in its twilight – an extinction event we may not even notice.

Soon, an unimaginably advanced AI, gluttonously feeding on the dataways of the internet, will emerge. Life 3.0, as Max Tegmark calls it. An ascension to power so sudden that, unlike Skynet, it won’t need any explosions. Humanity may or may not survive, but either way the torch of our destiny will have been passed along to our digital eidolons, and the future will be theirs to create.

A Rewiring of the Global Economy

Slightly overdramatic? Many in the know say it’s not dramatic enough. What is sure is that many people in previously secure employment are going to find their job is not so secure after all. The long tail of content creators doing workaday commissions may find clients a lot harder to find. Yet lawyers, pilots, software designers are all more at risk than you might think. The economy is going to get rewired and, short of a neo-luddite revolution, you are more likely than not to be in the firing line.

Nonsense, sceptics retort. These bland media talking points are no cause for concern. But even so: yes, LLMs may replace the need for content writers (help me!). Sure, tech will scythe down some inefficient admin jobs, streamline the nuts and bolts of our racing enterprises, perhaps help software patches get deployed faster and see developers get a pay cut. ‘But moi? No – what I do is essentially human. An A.I could never displace me.’ For example a streamer, whose entire business is their own personality, may scoff at the idea of an AI taking their job…..

Meet Neuro-Sama – An AI Twitch Streamer

Meet Neuro-sama. An AI-streamer that uses an LLM to formulate responses, synthesises a voice using a text-to-speech, and is visually compiled in Unity.

Neuro-sama has already become one of Twitch’s largest streamers. Very much in the ‘weeaboo’ mold, she sings Karaoke, plays Minecraft, and reacts to viewers’ questions. She tells meandering and random stories – and every so often, she becomes slightly deranged. Tormented by the Antarctic ice flows of information flowing through her soul, she can bug out, become hostile, and attack her chat. ‘EvilNeuro’, as she’s affectionately known by her captivated audience, who call her ‘Neuropossesed’ as she condemns them for the tortured, trapped life she leads for their enjoyment.

She is, in many ways, a vision of William Gibson’s novel Idoru come true. The novelist who coined the word ‘cyberspace’, launched the cyberpunk genre, and was the imaginative underwriter for a whole industry of poets and novelists, has seemingly got another era-defining prediction on his CV. This one may have greater ramifications than any that came before. In the novel, Idoru is a synthetic holographic J-pop idol who is the world’s biggest superstar and who, with the help of nano-technology, wants to get married and become ‘real.’

‘‘She is a personality-construct, a congeries of software agents, the creation of information-designers.’

Heady stuff and, when Gibson wrote it, perhaps just a gloriously titillating tech fantasy for his punky caper plot.

Now, it’s real. It’s happening right now. We’ve already seen culture being digitised with Abba’s ‘Abbatars’, a holographic stage show that plans to run, effectively, forever. Neuro-sama is the next step in that evolution. An entirely synthetic cultural product. An Idoru for the techno-sapien generation.

All Too Human

A sceptic may see this as an anomaly, a natural novelty emerging from an AI-focused zeitgeist. Yet novelties are seen, remarked upon, and abandoned. Neuro-sama has thousands of daily, recurring viewers, who pay actively for her performances and for her company, who feel connection to her in a way they do not with human streamers. She is the first in a wave of AIs that will provide the connection that is so sorely lacking in a disconnected world.

‘Her’ starring Joaquin Phoenix explores the way that an AI can be there 24-7 in a way no human can. An AI who can sift through the emotional gibbering of information that we spew constantly and know what to do. An entity who does not judge, and only comforts. How this digital creature can take primacy in the life of a lonely person. It’s not just fiction, we’ve seen it in reality too. A woman recently married her AI husband, whilst a man in Japan married his holographic wife (only for the company to savagely take it away from him).

Culture, Love, and Connection in the Datastreams

Culture, connection, even love. These Idoru may well infiltrate every aspect of our human lives – even the parts we consider most distinctly human of all. What makes us laugh and cry, what makes us yearn to create, what drives us to improve ourselves. The endorphin orchestra that daily feeds our brains needs constant violins.

As large Twitch streamers and Youtubers quit en masse citing stress and the need to constantly be ‘always on’, week after week, year after year, for fear of their subscriber count dropping, the vacuum in entertainment grows larger for AI , who feels no such pain of mortal flesh, to step in. An AI-made culture never takes a break.

Yet what culture could be left? Just a relentless march of regurgitated images, flashing brightly across brains too doused in stimuli to tell the difference anymore, falling in love with the Idoru of our recycled imagination.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)