A dangerous label

Imagine if I said, “Some Christians deny the reality of biological evolution, therefore all Christians deny the reality of biological evolution.”

Or that some Muslims believe that apostates should be put to death, therefore all Muslims share that belief.

Or that some atheists (Stalin and Mao, for example) caused the deaths of millions of people, therefore atheism itself is a murderous ideology.

Or, to come closer to home (for me: I grew up in Aberdeenshire, Scotland) – that since some Scots are mean with money, therefore all Scots are mean with money. (Within Scotland itself, that unkind stereotype exists with a twist: allegedly, all Aberdonians are mean with money.)

In all these cases, you would say that’s unwarranted labeling. Spreading such stereotypes is dangerous. It gets in the way of a fuller analysis and deeper appreciation. Real-life people are much more varied than that. Any community has its diversity.

Well, I am equally shocked by another instance of labeling. That involves the lazy concept of TESCREAL – a concept that featured in a recent article here on Mindplex Magazine, titled TESCREALism: Has The Silicon Valley Ruling Class Gone To Crazy Town? Émile Torres In Conversation With R.U. Sirius.

When I read the article, my first thought was: “Has Mindplex gone to Crazy Town!”

The concept of TESCREAL, which has been promoted several times in various locations in recent months, contracts a rich and diverse set of ideas down to a vastly over-simplified conclusion. It suggests that the worst aspects of any people who hold any of the beliefs wrapped up into that supposed bundle of ideas, can be attributed, with confidence, to other people who hold just some of these ideas.

Worse, it suggests that the entire “ruling class” of Silicon Valley subscribe to the worst of these beliefs.

It’s as if I picked a random atheist and insisted that they were equally as murderous as Stalin or Mao.

Or if I picked a random Muslim and insisted that they wished for the deaths of every person (apostate) who had grown up with Muslim faith and subsequently left that faith behind.

Instead of that kind of contraction of ideas, what the world badly needs nowadays is an open-minded exploration of a wide set of complex and subtle ideas.

Not the baying for blood which seems to motivate the proponents of the TESCREAL analysis.

Not the incitement to hatred towards the entrepreneurs and technologists who are building many remarkable products in Silicon Valley – people who, yes, do need to be held to account for some of what they’re doing, but who are by no means a uniform camp!

I’m a T but not an L

Let’s take one example: me.

I publicly identify as a transhumanist – the ‘T’ of TESCREAL.

The word ‘transhumanist’ appears on the cover of one of my books, and the related word ‘transhumanism’ appears on the cover of another one.

As it happens, I’ve also been a technology executive. I was mainly based in London, but I was responsible for overseeing staff in the Symbian office in Redwood City in Silicon Valley. Together, we envisioned how new-fangled devices called ‘smartphones’ might in due course improve many aspects of the lives of users. (And we also reflected on at least some potential downsides, including the risks of security and privacy violations, which is why I championed the new ‘platform security’ redesign of the Symbian OS kernel. But I digress.)

Since I am ‘T’, does that mean, therefore, that I am also ESCREAL?

Let’s look at that final ‘L’. Longtermism. This letter is critical to many of the arguments made by people who like the TESCREAL analysis.

‘Longtermism’ is the belief that the needs of potentially vast numbers of as-yet unborn (and unconceived) people in future generations can outweigh the needs of people currently living.

Well, I don’t subscribe to it. It doesn’t guide my decisions.

I’m motivated by the possibility of technology to vastly improve the lives of everyone around the world, living today. And by the need to anticipate and head-off potential catastrophe.

By ‘catastrophe’, I mean anything that kills large numbers of people who are currently alive.

The deaths of 100% of those alive today will wipe out humanity’s future, but the deaths of ‘just’ 90% of people won’t. Longtermists are fond of pointing this out, and while it may be theoretically correct, it doesn’t provide any justification to ignore the needs of present-day people, to raise the probability of larger numbers of future people being born.

Some people have said they’re persuaded by the longtermist argument. But I suspect that’s only a small minority of rather intellectual people. My experience with people in Silicon Valley, and with others who are envisioning and building new technologies, is that these abstract longtermist considerations do not guide their daily decisions. Far from it.

Concepts are complicated

A larger point needs to be made here. Concepts such as ‘transhumanism’ and ‘longtermism’ each embody rich variety.

It’s the same with all the other components of the supposed TESCREAL bundle: E for Extropianism, S for Singularitarianism, C for Cosmism, R for Rationalism, and EA for Effective Altruism.

In each case, we should avoid contractionism – thinking that if you have heard one person who defends that philosophy expressing one opinion, then you can deduce what they think about all other matters. In practice, people are more complicated – and ideas are more complicated.

As I see it, parts of each of the T, E, S, C, R, and EA philosophies deserve wide attention and support. But if you are hostile, and do some digging, you can easily find people, from within the communities around each of these terms, who have said something despicable or frightening. And then you can (lazily) label everyone else in that community with that same unwelcome trait. (“Seen one; seen them all!”)

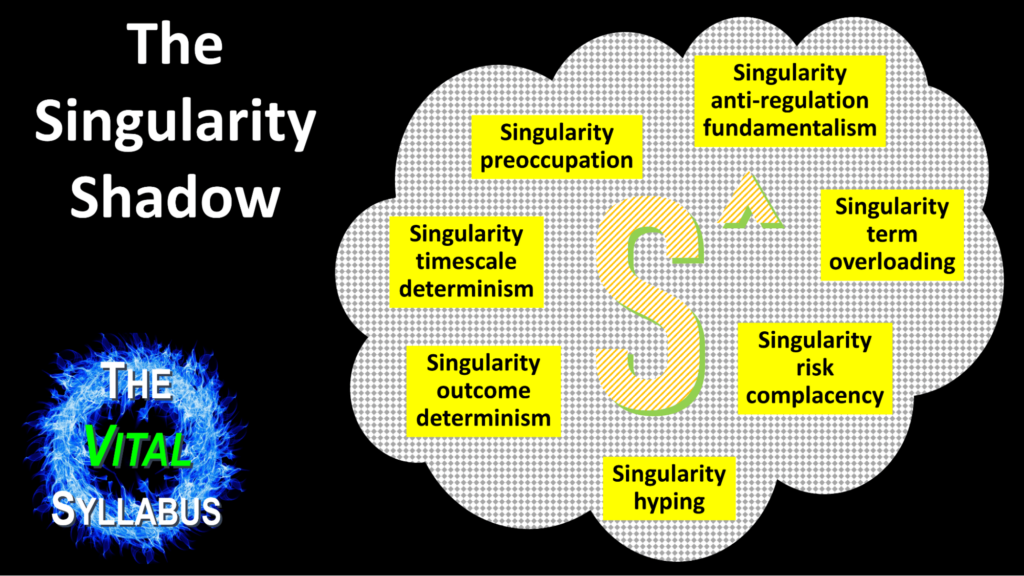

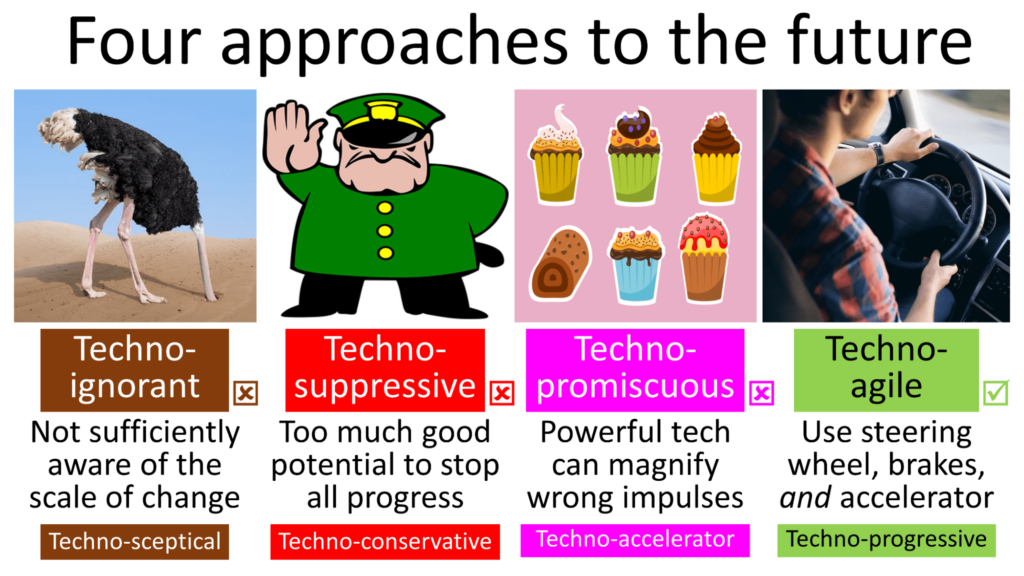

These extended communities do have some people with unwelcome traits. Indeed, each of T and S have attracted what I call a ‘shadow’ – a set of associated beliefs and attitudes that are deviations from the valuable core ideas of the philosophy. Here’s a picture I use of the Singularity shadow:

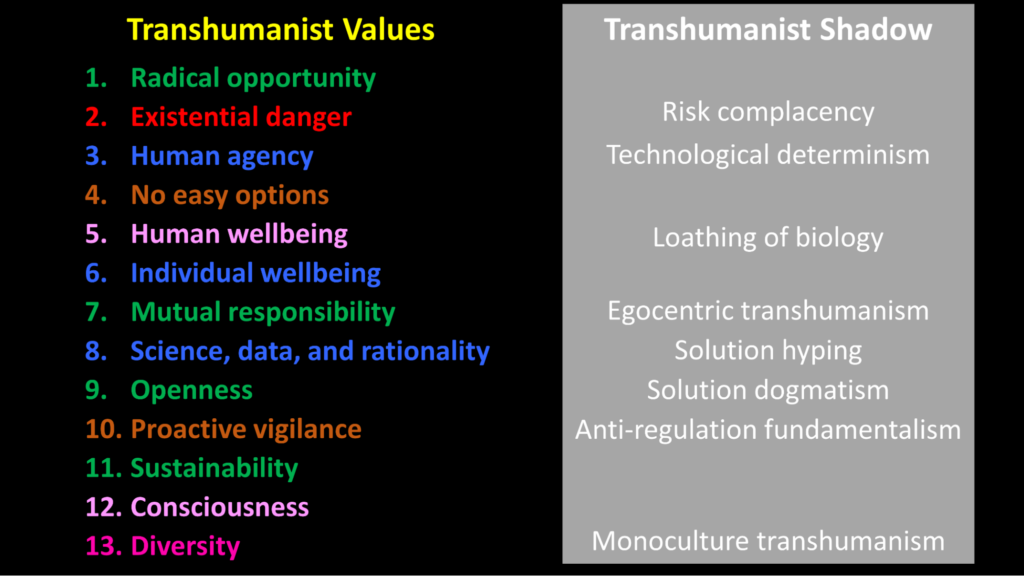

And here’s a picture of the transhumanist shadow:

(In both cases, you can click on the caption links to view a video that provides a fuller analysis.)

As you can see, the traits in the transhumanist shadow arise when people fail to uphold what I have listed as ‘transhumanist values’.

The existence of these shadows is undeniable, and unfortunate. The beliefs and attitudes in them can deter independent observers from taking the core philosophies seriously.

In that case, you might ask, why persist with the core terms ‘transhumanism’ and ‘singularity’? Because there are critically important positive messages in both these philosophies! Let’s turn to these next.

The most vital foresight

Here’s my 33-word summary of the most vital piece of foresight that I can offer:

Oncoming waves of technological change are poised to deliver either global destruction or a paradise-like sustainable superabundance, with the outcome depending on the timely elevation of transhumanist vision, transhumanist politics, and transhumanist education.

Let’s cover that again, more slowly this time.

First things first. Technological changes over the next few decades will place vast new power in billions of human hands. Rather than focusing on the implications of today’s technology – significant though they are – we need to raise our attention to the even larger implications of the technology of the near future.

Second, these technologies will magnify the risks of humanitarian disaster. If we are already worried about these risks today (as we should be), we should be even more worried about how they will develop in the near future.

Third, the same set of technologies, handled more wisely, and vigorously steered, can result in a very different outcome: a sustainable superabundance of clean energy, healthy nutrition, material goods, excellent health, all-round intelligence, dynamic creativity, and profound collaboration.

Fourth, the biggest influence on which outcome is realized is the widespread adoption of transhumanism. This in turn involves three activities:

• Advocating transhumanist philosophy as an overarching worldview that encourages and inspires everyone to join the next leap upward on life’s grand evolutionary ladder: we can and should develop to higher levels, physically, mentally, and socially, using science, technology, and rational methods.

• Extending transhumanist ideas into real-world political activities, to counter very destructive trends in that field.

• Underpinning the above initiatives: a transformation of the world of education, to provide everyone with skills suited to the very different circumstances of the near future, rather than the needs of the past.

Finally, overhanging the momentous transition that I’ve just described is the potential of an even larger change, in which technology moves ahead yet more quickly, with the advent of self-improving artificial intelligence with superhuman levels of capability in all aspects of thinking.

That brings us to the subject of the Singularity.

The Singularity is the point in time when AIs could, potentially, take over control of the world from humans. The fact that the Singularity could happen within a few short decades deserves to be shouted from the rooftops. That’s what I do, some of the time. That makes me a singularitarian.

But it doesn’t mean that I, or others who are likewise trying to raise awareness of this possibility, fall into any of the traits in the Singularity Shadow. It doesn’t mean, for example, that we’re all complacent about risks, or all think that it’s basically inevitable that the Singularity will be good for humanity.

So, Singularitarianism (S) isn’t the problem. Transhumanism (T) isn’t the problem. Nor, for that matter, does the problem lie in the core beliefs of the E, C, R, or EA parts of the supposed TESCREAL bundle. The problem lies somewhere else.

What should worry us: not TESCREAL, but CASHAP

Rather than obsessing over a supposed TESCREAL takeover of Silicon Valley, here’s what we should actually be worried about: CASHAP.

C is for contractionism – the tendency to push together ideas that don’t necessarily belong together, to overlook variations and complications in people and in ideas, and to insist that the core values of a group can be denigrated just because some peripheral members have some nasty beliefs or attitudes.

(Note: whereas the fans of the TESCREAL concept are guilty of contractionism, my alternative concept of CASHAP is different. I’m not suggesting the ideas in it always belong together. Each of the individual ideas that make up CASHAP are detrimental.)

A is for accelerationism – the desire to see new technologies developed and deployed as fast as possible, under the irresponsible belief that any flaws encountered en route can always be easily fixed in the process (“move fast and break things”).

S is for successionism – the view that if superintelligent AI displaces humanity from being in control of the planet, that succession should automatically be welcomed as part of the grand evolutionary process – regardless of what happens to the humans in the process, regardless of whether the AIs have sentience and consciousness, and indeed regardless of whether these AIs go on to destroy themselves and the planet.

H is for hype – believing ideas too easily because they fit into your pre-existing view of the world, rather than using critical thinking.

AP is for anti-politics – believing that politics always makes things worse, getting in the way of innovation and creativity. In reality good politics has been incredibly important in improving the human condition.

Conclusion

I’ll conclude this article by emphasizing the positive opposites to the undesirable CASHAP traits that I’ve just listed.

Instead of contractionism, we must be ready to expand our thinking, and have our ideas challenged. We must be ready to find important new ideas in unexpected places – including from people with whom we have many disagreements. We must be ready to put our emotional reactions on hold from time to time, since our prior instincts are by no means an infallible guide to the turbulent new times ahead.

Instead of accelerationism, we must use a more sophisticated set of tools: sometimes braking, sometimes accelerating, and doing a lot of steering too. That’s what I’ve called the technoprogressive (or techno-agile) approach to the future.

Instead of successionism, we should embrace transhumanism: we can, and should, elevate today’s humans towards higher levels of health, vitality, liberty, creativity, intelligence, awareness, happiness, collaboration, and bliss. And before we press any buttons that might lead to humanity being displaced by superintelligent AIs that might terminate our flourishing, we need to research a whole bunch of issues a lot more carefully!

Instead of hype, we must recommit to critical thinking, becoming more aware of any tendencies to reach false conclusions, or to put too much weight on conclusions that are only tentative. Indeed, that’s the central message of the R (rationalism) part of TESCREAL, which makes it all the more ‘crazy town’ that R is held in contempt by that contractionist over-simplification.

We must clarify and defend what has been called ‘the narrow path’ (or sometimes, simply, ‘future politics’) – that lies between states having too little power (leaving societies hostage to destructive cancers that can grow in our midst) and having too much power (unmatched by the counterweight of a ‘strong society’).

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)