Bitcoin is back into your friends’ and family’s chats, as the world’s most valuable digital asset edged its prior all-time high price record in dramatic fashion. The months of sustained growth that also saw other crypto niches like AI cryptocurrencies (riding the artificial intelligence trend) and memecoins (easy-to-understand mainstream tokens) balloon in value appeared unstoppable after most of the Grayscale spot ETF redemptions were done and investors are frothing at the sky-high predictions they are starting to hear across social media and cable news.

After spending over two years trading far far below its November 2021 peak, Bitcoin defied the naysayers by skyrocketing past $73,000 to set a new supreme price milestone of $73,750 on March 14th.

This latest achievement capped an extraordinary bull run for the crypto market leader, which saw its price increase by over 50% so far in 2024 amid frenzied trading activity. On the day it reached its new record high, $100+ billion worth of Bitcoin changed hands as investors pile in, hoping to ride the wave higher.

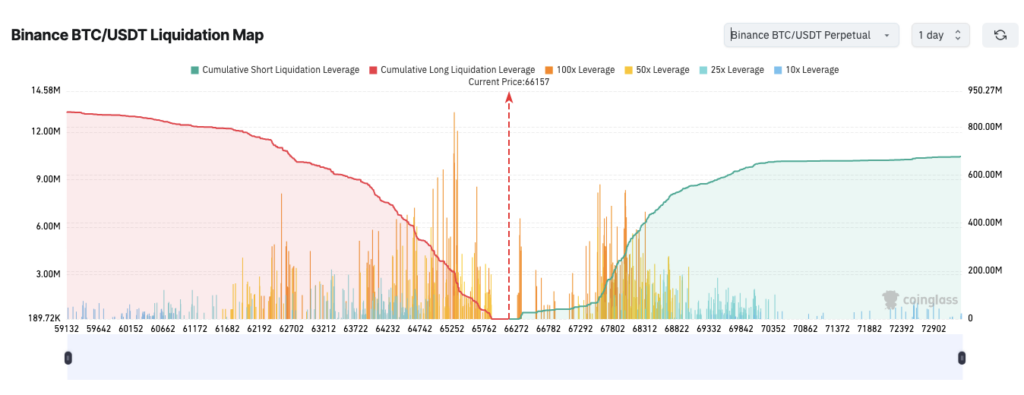

Bitcoin’s new personal best above $73,000 follows on from record-setting earlier in the month, when it hit $69,000 for the first time on 5 March. That proved too great to turn resistance into support, and caused a cascade of liquidations, with its price plunged by double-digits, falling over 15% to $59,000, before paring some of the losses in a rebound to a V-shaped recovery to the new high, and to the $71,000 where it currently trades.

This whiplash-inducing price action underscores the intense tug-of-war still playing out across crypto markets, where TradFi whales have now entered the ring and brought with them some heavy bags to throw around.

So what forces converged to propel the world’s largest cryptocurrency to such lofty new heights in the first quarter of 2024? As is often the case in these bullish periods, the rally was fueled by the perfect storm of optimistic narratives and real-world regulatory and economic developments. We already covered many of these in last year’s 2024 Crypto Bull Run article, and I recommend you go revisit it.

Bitcoin spot ETFs create insatiable BTC demand

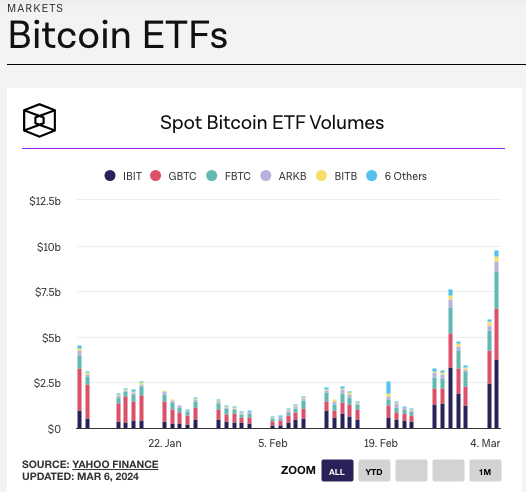

Restoring interest back in Bitcoin this year were the highly-anticipated launches of spot Bitcoin exchange-traded funds (ETFs) by major financial players like BlackRock, Fidelity, and Grayscale. After years of denial by US regulators, multiple spot Bitcoin ETFs finally received approval in January 2024.

These ETFs allow institutional and retail investors to gain exposure to Bitcoin’s price movements through a regulated, familiar product that directly holds the underlying crypto assets. Huge sums of investment capital flooded into these ETFs right out of the gate, creating immense buy pressure in the spot Bitcoin markets as the funds raced to accumulate enough BTC to back their fund shares.

Just two months after launching, the Bitcoin spot ETFs had already vacuumed up a staggering combined total of over $45 billion in assets under management, representing around 684,000 Bitcoin tokens: over 3% of the total circulating supply. Inflows showed no signs of stopping, with BlackRock’s fund alone reaching $10 billion in just seven weeks.

Bitcoin Halving

Another major factor driving Bitcoin’s rally has been the building anticipation of the next ‘halving’ event for the cryptocurrency, expected in April 2024. This systemic halving of Bitcoin’s mining reward happens automatically every four years, resulting in a 50% reduction in new supply hitting the market. The latest halving will see BTC rewards drop to 3.125, which is down four halvings from 2011’s block rewards of 50 BTC. With over 19 million Bitcoin already mined (and possibly a quarter lost) there is less and less to go around. Previous halvings reliably preceded massive price increases as the decreasing supply dynamic helped fuel further buying demand.

Many analysts and industry experts have forecasted Bitcoin to surge well past $100,000 within 12-18 months after April’s halving, based on the historic patterns of past cycles where prices eventually climbed 10× or higher following the supply shocks. Speculators piled in early to front-run the perceived upside.

FASB Accounting

Bitcoin’s rally also got a boost in late 2023 from the official embrace of long-awaited new accounting rules for US public companies around cryptocurrencies. Starting in 2025, new FASB guidance will allow businesses to value certain crypto assets at fair market value on their balance sheets each reporting period, marking a huge upgrade from the current treatment of Bitcoin as an ‘indefinite-lived intangible asset’.

This rule change laid the groundwork for even broader institutional adoption of Bitcoin, removing one of the final barriers for public companies and investment funds looking to add exposure without complex workarounds.

US Elections, SEC and Interest Rate Cuts

Another important consideration to make is the impact of US policymakers’ adoption of Bitcoin and crypto. While the SEC under Herr Gensler views most cryptos as securities, it views Nakamoto’s coin as a commodity thanks to its proof-of-work origins. The Fed, whose monthly FOMC meetings became horror movie nights for crypto investors in 2022 and 2023, will likely soon have to relent and start reducing those inflation-crunching high interest rates, or combat a recession next. And of course, America will decide on the next President this year, and this should see crypto bashing through Operation Chokepoint 2.0 this year. Even Donald Trump is warming to Bitcoin now.

What’s different this time round?

While the Bitcoin frenzy and associated wildly optimistic price forecasts provoked understandable flashbacks to the 2017 and 2021 crypto bubbles for market veterans, the 2024 rally did have some key fundamental differences.

This time, sustained upward price pressure came not from shadowy derivatives platforms like FTX and opaque stablecoin ecosystem hazards like you-know-who, but from transparent, battled-hardened regulated funds and publicly-traded companies allocating directly to Bitcoin’s core layer-1. Record volumes and open interest levels on trusted exchanges – centralized and decentralized, spot and derivative – reflected genuine liquidity.

And whereas the 2021 peak was fueled by hype around experimental blockchain technologies and corporate marketing gimmicks, Bitcoin’s 2024 renaissance had the more grounded narrative of finally fulfilling its long-awaited promises as a now matured value reservoir and decentralized financial network, emboldened by the embrace of legacy institutional capital via ETFs, which may soon extend to Ethereum.

Of course, only time will tell if Bitcoin can maintain these lofty price levels or if the market mania will once again dissolve into despair. It’s easy to get caught up in the FOMO, and even easier to hit that sell button at a loss when market euphoria wears off and those 20% drops or weeks of sideways action break your resolve.

But in its latest epic price run, crypto’s top dog (sorry Doge) demonstrated its incredible ability to capture imaginations and animate markets around the world like no other asset.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)