Introduction

Social networks have risen back to prominence over the course of 2023, with Elon Musk’s Twitter rebranding to X and headlines screaming about its battles with Meta’s Threads. Meta’s continued push into a VR-powered metaverse is finally picking up steam, as this astonishing new Lex Fridman podcast demonstrates.

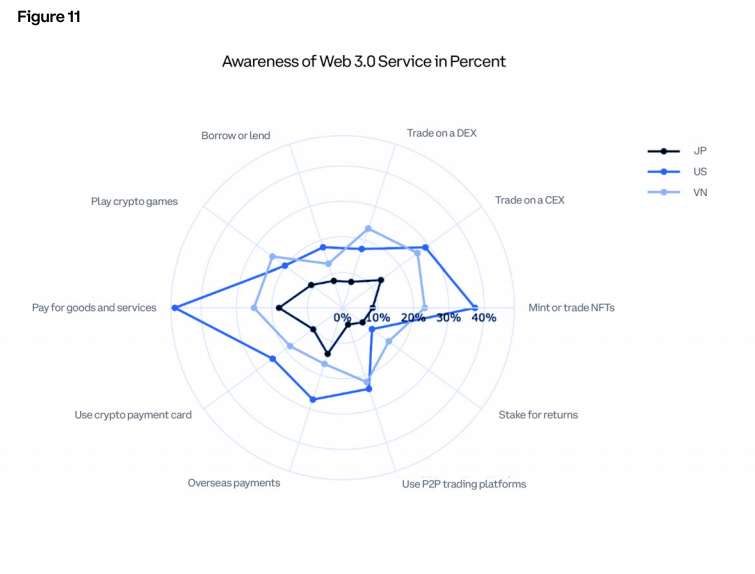

With 4.7 billion users plugged in for an average of 2.5 hours a day on traditional social media, creator economies are blossoming and it’s no surprise that its Web3 version, Social Finance (SocialFi/SoFi) is high on the priority list for crypto investors and builders for finding new decentralized ways to monetize users’ social network clout.

Despite the lowest crypto VC funding since 2020, Q3 2023 saw multi-million investment rounds for SoFi projects such as RepubliK and Phaver. A lot of this can be attributed to the Base chain’s surprise hit Friend.tech, which allows social media accounts to be tokenized and traded. Its 2023 buzz is giving off some early Axie Infinity tingles, not for GameFi but this time for SocialFi.

Let’s take a closer look at what this emerging trend for crypto’s potential bull cycle of 2024/2025 is all about.

What is SocialFi?

Please note: ‘Social finance’ can also refer to another altruistic form of finance that supports real-world communities, which shouldn’t be confused with social network finance, the topic of this article.

SocialFi stands for “social finance” and combines the principles of social media and decentralized finance (DeFi) to offer a Web3 approach to social interactions through the power of blockchain technology. At its core, SocialFi is about empowering content creators, influencers, and participants who seek better control over their data and freedom of speech, aka data privacy and censorship-resistance.

These lofty ideals are admirable, but a network can only be successful if users are sufficiently incentivized to share their resources and time with it. This is where those quirky little digital assets come in – the ones we either love or hate, depending where in the cycle we bought and sold them:

• Cryptocurrencies (e.g. ETH or a governance token) provide avenues for monetizing social media engagement and rewarding infrastructure and security providers.

• Non-fungible tokens (NFTs) establish the management and digital ownership of assets.

The Three Core Principles of SocialFi

So what makes SocialFi platforms different from their giant Web2 equivalents like Facebook, X (Twitter), YouTube, and Instagram?

Three words: Decentralization. Tokenization. Governance.

Decentralization: Distributed control

Decentralization is the backbone of SoFi, setting it apart from social media platforms like Facebook and Twitter. Unlike these centralized platforms, where a single server hosts user data and content, SocialFi is spread over a decentralized network. This shift in architecture gives users more control over their own data and enhances the platform’s resistance to censorship and major data breaches.

The level of decentralization depends on the underlying blockchain; Bitcoin and Ethereum are highly decentralized, making them secure and resilient, while others are ehhh, not so much. Tools like DappRadar and DeFiLlama can help you gauge the health of a Social Finance project.

Tokenization: Quantifying social influence

Tokenization is another core principle in SocialFi. It transforms the fuzzy idea of social influence into a measurable asset. Users earn tokens based on their contributions to the community, and these tokens are multifunctional. They can be used for micro-payments, trading, or even voting on platform changes. This token-based economy is made possible through smart contracts, which autonomously distribute value to users, providing a reward to make the platform more engaging.

Governance: Community decision-making

The third pillar of SocialFi is community governance, which puts decision-making power into the hands of the users. Unlike traditional platforms where changes are dictated by a managing company, SocialFi uses a DAO (Decentralized Autonomous Organization) to allow users to vote on significant changes or new features. This democratic approach fosters a sense of ownership and aligns the platform more closely with the needs and preferences of its community.

How SocialFi democratizes social media

1. Monetization and Data Ownership

The terms “There’s no such thing as a free lunch” and “If the product is free, then you are the product” ring especially true for social media platforms. These platforms exploited early Web2 users’ reluctance to pay for any online service or content during the 2000s and 2010s through a Faustian offering of free services. Years later, users learned their behavior was recorded all along to build models to manipulate human online behavior for commercial and other purposes. Don’t be evil my ass.

Traditional Web2 social media platforms have been criticized for their centralized control over user data and monetization strategies. Platforms like Youtube, Twitter and TikTok milk their users’ content and engagement to generate billions of dollars of revenue but share only a fraction of profits with the actual content creators. While this is starting to change and some Web2 platforms are onboarding their own token and even NFTs, it’s still too lopsided.

It was reported in 2022 that creators still rely on brand partnerships for up to 77% of their income, whereas subscriptions and tips make up around 1–3%. SocialFi platforms instead use social tokens or in-app utility tokens to manage incentives fairly. These tokens can be created by either the application or the user (a fan token), allowing creators to manage their own micro-economies.

2. Freedom of Speech

Another big bugbear with Web2 platforms, especially in these increasingly fractured and divisive political times, is the centralized decision-making process that often ends up in a final form of censorship.

There is sometimes a need to stop harmful content from being disseminated across the internet, but the question is who gets to do this. It can be a very bad thing to have a centralized arbiter of truth that stifles opposing views from controversial public figures (read the prescient books Animal Farm, 1984, and A Brave New World). A decentralized curation process, aligned with Web3 ethos, could offer a fairer approach.

Social media initially created new communities and united old ones. Unfortunately, a weekly post or two by an average user doesn’t pay the bills for platforms. Controversy stimulates emotion and magnetizes user eyeballs, and that brings in dollars. So, biased algorithms were created to herd users into digital echo chambers and online political fight clubs. Web2 platforms are as complicit in spreading hatred across social media as any Tate or Trump.

SocialFi platforms beat censorship through decentralized curation. All publicly viewable posts can be swiftly tagged based on their topic and nature of the words used. Individual nodes can be assigned the control and responsibility over filtering.

Three Key Challenges for SocialFi

Scalability

One of the primary challenges for SocialFi is scalability. Traditional social media platforms like Facebook generate massive amounts of data daily. Blockchain solutions like DeSo claim to address this issue through techniques like warp sync and sharding, but these solutions are yet to be stress-tested at scale during bull market volatility.

Sustainability

Another challenge is creating sustainable economic models. While many platforms promise high incentives, these are often short-term growth hacks. The models need to be stress-tested through various market cycles to ensure long-term viability. Another problem is the intricate issue of token emission schedules.

Security

Unfortunately in blockchain your network is only as strong as your weakest link. The hacking incident on the Roll platform raised concerns about the safety of SocialFi platforms – and in a field where smart contracts and hot wallets play such a crucial role, the threats from malicious or faulty code, or phishing scams, must be overcome before we can expect mainstream adoption. This is where the concept of account abstraction (see my article on Vitalik’s 3 Ethereum transitions) should revolutionize user safety.

Ten Trending SocialFi Tokens for 2024

Below are some SoFi tokens trending currently.

1. Galxe: A Web3 social community project built on Ethereum with over 10 million active users.

2. Torum: A social media platform built on Binance Smart Chain that combines DeFi and NFTs.

3. Friend.tech: A decentralized social media platform built on Base Network that allows users to tokenize their social network.

4. NestedFi: A SocialFi project that supports building, managing portfolios, copying trades of the best traders, and supporting social trading with just one click.

5. STFX: A SocialFi protocol for short-term digital asset management.

6. Hooked Protocol: A launchpad project (recently invested in by Binance) that supports SocialFi.

7. Lens Protocol: A project made about Social Graph and running on the Polygon network, developed by the founder of AAVE.

8. Safereum: A decentralized memecoin project with decent performance.

8. Bitclout: A social token project that gained significant attention by allowing brands, organizations, and individuals to create their tokens.

10. Rally: A social token project that allows creators to monetize their content and engage with their audience.

Disclaimer: I do not hold any of these tokens and be advised that you shouldn’t invest in any of them without doing proper research. They are very risky and require an advanced grasp of crypto knowledge. You’ll need to understand tokenomics like their vesting unlocked schedule, fully diluted value, the team behind them, and their supposed value proposition and use cases. Many of these projects will likely not be around in 5 years’ time.

SoFi So Good. What’s next?

By combining the principles of social media and decentralized finance, SoFi can reshape the social media landscape and help the normal user reclaim rightful ownership of their data, and monetize it fairly and transparently if they choose to do so.

SocialFi is an amalgamation of Web3 and social media trends, and thus perfectly geared towards boosting creator economy models. However, as we’ve seen with other trends such as Play-to-Earn (P2E), and even DeFi to some extent, early hype and traction mean nothing if they’re not built on something of substance. SoFi experiences will need to have engagement and staying power if they are to retain real users and the network effects that come with them.

Therefore, SocialFi’s robustness can only be claimed after it has weathered a few market downturns and soldiered through them. With an evolving Web2 industry and new frontiers like the metaverse and artificial intelligence on the doorstep, the opportunities are endless.

Just one last thing: Please, let’s ban infinite scroll.

Let us know your thoughts! Sign up for a Mindplex account now, join our Telegram, or follow us on Twitter.

.png)

.png)

.png)